Scalable Socket Server

4.89/5 (36 votes)

How fast can your PC serve up data?

Introduction

This project is the result of a blindingly fast Node.js demonstration. I became interested in the question: Just how fast can my PC serve up networked content? What is the best way to structure the code to get maximum performance?

I decided to find out.

Kegel's article "The C10K problem" suggests it was not unreasonable in 2001 for a web server to support 10,000 simultaneous clients requesting 4K from disk.

I will ignore that fact that there are those on the Internet who consider C10K "so last decade" and now target 1 million concurrent transactions using commodity hardware. That sort of performance/scalability requires specialist programming including replacing the network driver.

There is a reasonable amount of literature in the Linux world on how to go about writing networked servers and clients. The Windows world is less well served. There is a reasonable amount of documentation for WCF but that is not directly relevant to C++. The Windows Socket 2.0 API is well documented but perhaps not the best way to use it. The plan is to produce a number of separate articles covering:

- Windows socket programming.

- Linux socket programming.

Please note:

- The article is not meant to provide an exhaustive treatment of the socket programming.

- The article is not meant to provide an exhaustive analysis of server architecture performance.

- The article does not consider "keep-alive" connections, jumbo frames or mechanisms to prevent denial-of-service attacks.

I do not have access to high-speed network at home so client and server applications were tested on the same machine. Anti-virus software was turned off and the machine disconnected from the Internet. IIS and all unnecessary desktop applications were closed down. The assumption is that this will be enough to reproduce the real-world relative performance.

Part 1 - Windows Sockets

Architecture

What should an "efficient" socket server look like? There are two "extreme" approaches:

-

The Threading Model

Each time a server receives a request, a thread is created to service the request ("one-thread-per-request"). The threads wait for blocking operations such as file I/O and run until requests are fully processed.

The obvious problem with this is that the total number of threads required is unbounded. Threading also requires locking or other strategies to coordinate access to shared resources. Thread creation and locks are expensive. The up-side of this approach is that development can be much easier than using the event model.

-

The Event Model

Incoming requests are added to a queue. A finite state machine (FSM) running on a single thread processes the requests on the queue. No blocking is allowed. Operations such as file I/O are implemented using asynchronous calls. If an asynchronous call is made during the request processing then processing on the current request stops, the current state is saved and processing starts on the next request in the queue. When the asynchronous call returns, the original request is re-added to the queue, or possibly another prioity queue.

Event based systems do not have the overhead associated with threads and locks. However as only a single thread is used, they do not utilise the full power of modern processors. FSM are also hard to understand and modify. Frameworks such as Node.js provides a more user-friendly and flexible callback mechanism which allows developers to implicitly define their own FSM.

Many hybrid architectures attempt to combine the best of both approaches. Even so, it is fair to say that in the IT community generally, "threads are bad, events are good".

In 1978 Lauer and Needhams published the paper "On the duality of Operating System Structures" which showed that threads and events are "dual": what can be done with one model can be done with the other.

The process of creating an "efficient" socket server therefore comes down to using an architecture that minimises overheads (thread context switching, locking, memory paging etc).

Environment

Tests were conducted on a Dell Vostro running Intel(R) Core(TM) i5-3450 CPU @3.10 GHz, 8.00 GB Ram. The operating system(s) is Windows 8.1 Pro 64-bit (and Mint 17). The network card is an integrated Realtek PCIe GBE which is in theory capable of gigabits per second. The machine was bought in 2012. It has also become polluted with various applications that insist on running in the background. Task Manager shows a steady 5% of CPU consumed by background tasks after some optimisation.

Tools

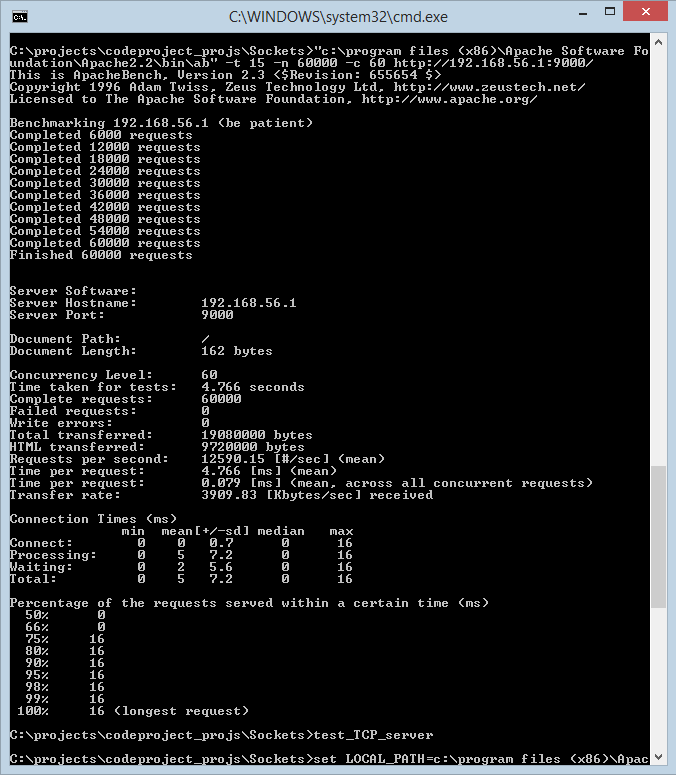

The Apache Benchmarking tool ab was used to test the TCP servers and generate statistics. A typical output from ab is shown below.

The base test configuration is 60,000 requests with 10-10,000 concurrent connections. Each test configuration was run at least 4 times to get representative performance figures.

Code

The code was compiled using the Community version of Visual Studio 2013. The project comes with the Visual Studio solution and project files used to build the projects on my machine. You will need to ensure that:

- boost is installed and the libraries built (using bootstrap).

- The VC++ Include directory includes the boost library.

- The VC++ Library directory includes the directory containing the built boost libraries (stage/lib at the time of writing).

The sample uses the following classes:

socket- a thin wrapper around the classic socket API. Not all of the socket API is wrapped. No attempt is made to be elegant. The motivation is to provide RAII management of sockets. If you want fully functional socket classes, there are plenty on the web. E.g. SocketCC, CSocket, PracticalSocket, SocketCC, SimpleSockets, RudeSocket, ...dedicated_heap- a dedicated heap used to manage buffer memory; used with overloaded versions ofnewanddelete. The class can be compiled to usestd::vectororVirtualAlloc.thread_pool- a lightweight thread pool class used to to process HTML requests.

None of the code has undergone commercial levels of testing. Please report bugs and give me time to fix them rather than down-voting me!

Base Configuration

The base configuration used for performance testing is:

- Default HTML response (4K)

- Windows Firewall off. Windows Defender off.

- Anti-virus software removed, and computer disconnected from Internet.

The HTML response from the server can be examined by typing the server url into a web browser. (The server url is printed to std::cout during initialisation)

The project is not particularly concerned with the efficiency of any code needed to process a HTML request; once started, the server will always the return the same HTML. The HTML returned by the server can be customised by passing the name of a file containing the new HTML on the command line.

Content

The following patterns have been implemented and considered:

- UDP client and server.

- Multi-threaded TCP server using

listenandaccept. - Single-threaded TCP select server using

selectandaccept. - Windows I/O completion port socket server.

- asio sync and async servers.

UDP client and server

The purpose of these applications is to see how fast packets can be generated and processed. The simple UDP client + server were able to produce and consume 230,000 messages per second. No effort was made to optimise the code. Since both client and server application were running on the same machine, it seems likely that a ballpark figure of up to 460,000 packets/sec might be possible on a dedicated server with a good network card.

Estimate of TCP/IP performance based on UDP performance.

The following packets are generated to service a simple single-packet HTML request with a simple single-packet HTML response.

- SYN

- SYN + ACK

- ACK

- HTML request

- ACK

- HTML response

- ACK

- FIN

- ACK

- FIN

- ACK

A single HTML request results in at least 11 TCP/IP packets moving across the wire. Assuming the packet size is actually about 1500 bytes, a request for 4K generates 3 data packets and 3 low-level ACK packets giving a total of 15 packets. If we assume that the UDP is efficient (it has none of the complexity of a TCP/IP server), and we were able to implement our own super-efficient TCP/IP protocol using raw sockets, then we could expect 230,000/15 = 15,333 requests per second (rps). (Windows does not support raw sockets except on Windows servers to preventing malicious desktop code misusing the facility).

Multi-threaded TCP server

This server uses a thread-pool to minimise the cost of thread creation. Incoming requests are added to a task/request queue where they are then processed in order of arrival. In that sense it could be argued that the server is an hybrid which uses aspects of both threading and event models.

Without error handling the sequence of calls looks like:

thread_pool.start_up()

bind() // to local end point.

listen() // for incoming connections.

for(;;)

{

incoming_socket = accept() // wait on/accept incoming connections

create task to process incoming connection.

Task is:

{

recv();

... // process the request

send(); // the appropriate response

}

}

Thread pools tend to work best when the number of threads is roughly the same as the number of processor cores (4 on my machine).

There is a limit to the number of threads supported by Windows. By default, each thread is allocated 1MB for stack space, which puts an upper limit on the number of threads somewhere below 2048. It is possible to change the stack size, but Windows will always allocate at least 64K - which gives a limit of less than 31,250 threads. In practice the limit for a server would be considerably less than this since the memory would need to be allocated for the heap to perform the normal functions of the server.

The maximum number of threads can be increased beyond the Windows limit by using a user threading package which can also avoids the cost of context switching by using techniques such as co-routines. However such libraries are not common and a multi-threaded server will always use more resources than equivalent event driven server simply because each thread must have its own stack space. A real-world server using a large number of threads would also face other limitations such as restrictions on the maximum number of open files, limits on the number of open database connections etc.

This pattern is simple to implement and is suitable for computed content such as charts and mathematical calculations. The addition of blocking I/O would severely degrade performance.

The single-threaded TCP select Server

This server follows pattern often seen in Linux socket programming literature:

bind() // to local end point.

listen() // for incoming connections.

fd_set known_sockets;

add listen_socket to known_sockets

for(;;)

{

fd_set ready_to_read;

select(known_sockets, ready_to_read); // find which sockets are ready to read.

foreach(socket in ready_to_read)

{

if(socket == listening_socket)

{

incoming_socket = accept() // wait on/accept incoming connections

add incoming_socket to known_sockets

}

else

{

recv();

... // process the request

send(); // the appropriate response

}

}

}

The pattern can be implemented as blocking/non-blocking.

Although Microsoft supports the familiar socket API, the Microsoft implementation differs from "standard" Unix/Linux implementation. For example, the first parameter to select is ignored. The FD_SET, FD_CLR macros uses a (slow) loop to search for the required socket in the socket set; Linux implementations use the file descriptors as indices.

Microsoft states that "Mechanisms like the WSAAsyncSelect and select functions are provided for easier porting from Windows 3.1 and Unix respectively, but are not designed to scale" [1] so it would be expected that the performance would not be as good as other architectures. Microsoft have another pattern they would prefer you to use for high performance applications.

IO Completion Port (IOCP) Server

IO completion port servers are recommended by Microsoft for building scalable hi-performance server applications and dates back to the mid-1990s. IOCP servers are considerably more complex than the previous servers. Pseudo-code follows:

class connection

{

enum completion_key { none, IO, shutdown }

enum connection_state { recv, send, accept, reset } operation;

...

};

IOCP = CreateIoCompletionPort()

bind() // to local end point.

listen() // for incoming connections.

CreateIoCompletionPort(list_socket, IOCP) // Associate listen socket with IOCP.

Load AcceptEx function

create WSAOVERLAPPED and buffer pool.

create threads.

foreach(thread):

{

for (int i=0; i<max_connection; i++)

create_connection()

while(!stopping())

{

get completion port status // via GetQueuedCompletionStatusEx()

key = OVERLAPPED_ENTRY.lpCompletionKey

if(key == shutdown)

close connection;

else

{

connection = key;

app_state_machine.on_IO_complete(...) // Do whatever should be done next.

// Inside the state machine...

switch(operation)

{

case accept:

on_accept(); // also includes recv operation.

break;

case recv:

on_recv_complete(); // calls WSASend()

break;

case send:

on_send_complete(); // finished.

break;

}

if(finished)

{

delete_connection()

call create_connection()

}

}

}

}

create_connection()

{

// Create connection object and block until AcceptEx returns.

create a connection object.

create accept socket for connection.

CreateIoCompletionPort(accept_socket, IOCP, key = pointer to connection) // Associate accept socket with IOCP.

Call AcceptEx(...) passing in the connection (derived from WSAOVERLAPPED) and a completion key = IO

}

The code follows the pattern of socket servers in that new connections are created by listening on a accept socket with AcceptEx. The pattern differs in that (i) a new socket is created up front (before the call to AcceptEx), (ii) the socket is associated with a ULONG_PTR key value via CreateIOCompletionPort, and (iii) AcceptEx is treated like any asynchronous function and does not block, rather execution blocks on GetQueuedCompletionStatusEx which will notify the calling thread of any new connection.

Once a connection is established, each I/O completion invokes the application specific state machine to determine what action should be taken next (send/receive data or close socket). All calls to WSASend and WSARecv are asynchronous - they return immediately. Threads check the completion port status by calling GetQueuedCompletionStatusEx which returns when I/O is complete (or there is an error condition). GetQueuedCompletionStatusEx supplies the key associated with the send/receive socket - in our case the key is used to determine whether the server should shutdown.

The implementation uses GetQueuedCompletionStatusEx since it is capable of de-queuing multiple completion packets in one go, therefore reducing the number of context switches and potentially improving performance.

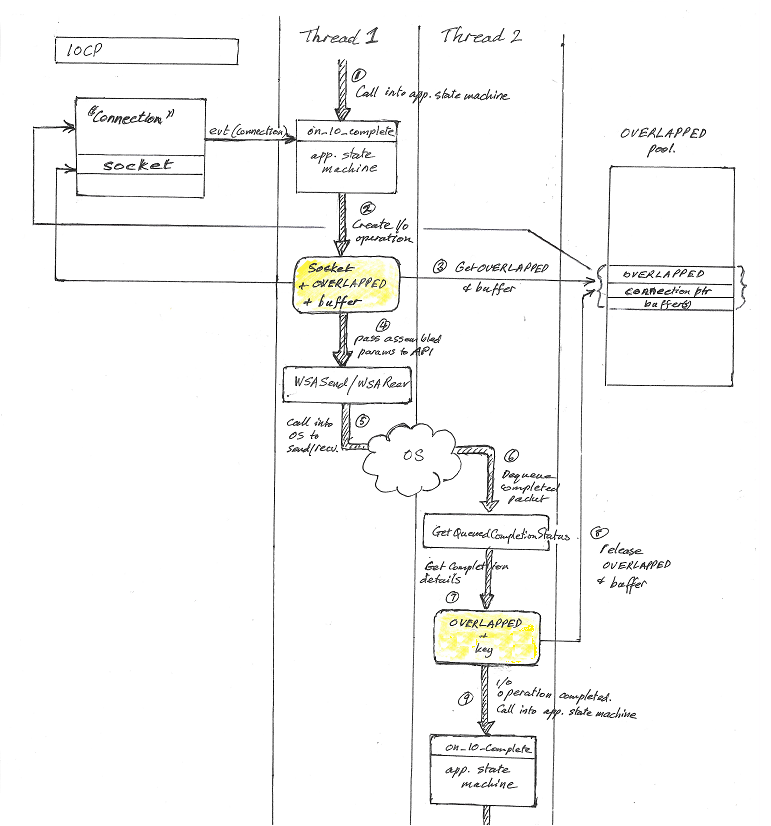

The diagram below shows the typical steps that take place during processing:

-

Thread 1 calls into the application state machine to determine what should be done next.

-

The state machine decides to perform an I/O operation and assembles the required data (socket +

WSAOVERLAPPED+ data buffer) -

The

WSAOVERLAPPEDstructure and data buffer are obtained from theWSAOVERLAPPEDpool. TheWSAOVERLAPPEDpool is a heap like structure containing multiple pre-allocated triplets (WSAOVERLAPPED+ data buffer + connection pointer). The triplet is updated so that the connection pointer points to the associated connection. -

The assembled data is passed to

WSASendorWSARecvwhich return immediately. Thread 1 moves onto processing other work. -

Windows schedules the data for transmission (

WSASend) or waits for data to arrive from the network (WSARecv). -

The I/O operation completes, a completion packet is queued and then retrieved by

GetQueuedCompletionStatusExon thread 2. -

The

GetQueuedCompletionStatusExfunction returns a pointer to the originalWSAOVERLAPPEDstructure and the key associated with the send/recv socket. -

The

WSAOVERLAPPEDstructures and buffers may be released back to the OVERLAPPED pool if they are no longer needed. Alternatively they are immediately reused. -

Thread 2 calls into the application state machine to determine what should be done next.

The server uses VirtualAlloc to allocate memory and VirtualLock to lock the virtual memory into physical memory. The amount of required memory required for buffers and WSAOVERLAPPED structures is calculated during initialisation and the working set size adjusted to ensure the VirtualAlloc and VirtualLock functions do not fail. In early testing, using VirtualAlloc and VirtualLock resulted in about a 10% increase in throughput.

It should be noted that the use of virtual memory in the current code is not efficient or complete. The project uses code I wrote years ago. I may rewrite the code in the future. (These articles are a major undertaking, mostly because of the amount of checking and code testing that has to be done)

Design Considerations

The server does not use the ability of WSASend/WSARecv to send/receive data from multiple buffers in a single call. If that functionality is required it would be necessary to have separate WSAOVERLAPPED and buffer pools since they would no longer be in 1:1 correspondence.

The current design allows the application logic to have multiple overlapped WSASend and WSARead operations on a socket (connection) so the WSAOVERLAPPED/buffer and sockets (connections) are no longer in 1:1 correspondence with each other and cannot be combined into a single structure.

A simple Echo server would only ever have a single I/O operation pending per connection so in that case it would be possible to do away with the WSAOVERLAPPED pool and combine the WSAOVERLAPPED + data buffer + connection object into the same structure (typically an extended WSAOVERLAPPED).

It is possible to pass the address of the connection object as the completion port key associated with the accept socket. That means that when GetQueuedCompletionStatusEx returns the key can be cast to a connection object from which all the other data structures can be found. However that does not work for AcceptEx as GetQueuedCompletionStatusEx returns the key associated with the listen socket, not the accept socket.

The server cannot detect if the client suddenly dies or otherwise fails to correctly close down the connection. "Dead" connections can be a problem as they consume resources and eventually (sometimes quite quickly) cause the server to run out of memory. They can be used to implement a denial-of-service attack. The suggested way to handle this is to kill a connection if it has not received a data packet within a certain length of time. Windows will do this anyway but the default time-out is 10 minutes which is not appropriate for high performance/capacity servers. The solution to dead connections is application specific - in our case the problem is partially avoided by closing the connection after sending the response.

Asio

Performance

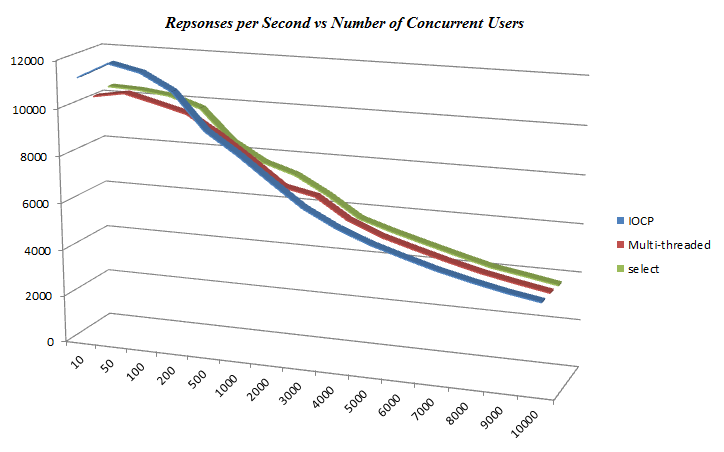

So which server architecture delivers the best perforamance? The server was tested using the standard configurations with 10 to 10,000 concurrent users. Reponses per second are shown in the figure below.

The IOCP server delivers the best performance below 200 concurrent users. Beyond 200 concurrent users, performance of all architectures is pretty much the same. Time per request (across all concurrent requests) shows a similar pattern starting around 0.09 ms and converging across architectures to 0.267 ms.

That's just not right.

It seems that the performance is actually being limited by something other than the socket server software itself. The obvious possibilities are:

-

There is a fundamental limit on how fast Windows can process socket requests and our applications are bumping up against that limit. Although such a limit exists, it seems likely that the limit would be affected by the server architectures since they use the kernel in very different ways, so this possibility seems unlikely.

-

ab.exe (a socket application itself) is not up to the job of serving up the required number of packets and beyond 200 users is really reporting on its own performance; that assertion is supported by various posts on the Internet.

Our original assumption that we could do reliable performance comparisons on a single machine has proved not to be correct. The testing regime was inadequate.

I need to more powerful machine anyway (I have been developing software using the Android emulator). When that happens, I will/may review this article and try a more sophisticated approach to benchmarking.

Testing below 200 concurrent users suggests:

-

Tweaking compiler options and locking virtual memory had a small impact on performance. Reusing sockets did not really seem to make a difference. That may be because the servers are not really operating at maximum capacity.

-

Tweaking the number of threads, number of buffers per thread and even the number of

OVERLAPPED_ENTRIEScan make significant differences to performance. These can be set on the command line to allow experimentation and optimisation. -

It is hard to feel confident that the performance of the servers is not being affected by the multitude of application/services chugging away in the background of any desktop PC. Anti-virus software may report being turned off when it isn't really. Very dramatic improvements in performance can be had by using the Control Panel/Administrative Tools/Service window to actually turn off services.

No performance data is available for the asio servers.

Conclusion

If you want to write a performant socket server using Windows, it probably makes sense not to fight City Hall - write an I/O completion port server - but that advice is based on very limited evidence.

On our low spec PC, the IOCP server yielded about 12,600 responses per second with a 1K packet at around 100 concurrent users. That's very respectable considering the results where obtained on a single machine running both the client and server, and that the performance was probably under-reported by ab.exe.

A final reminder is that my figures were obtained on my machine and should not be regarded as reliable. Your test results will almost certainly differ from mine.