Manage, monitor, and control all your IoT devices with Overlord

4.15/5 (6 votes)

Overlord is an open-source .NET IoT management platform written in C# and running on Microsoft Azure.

Table of Contents

- Overview

- Status

- Goals

- Why build cloud IoT apps with .NET?

- Static typing and compiler infrastructure

- Visual Studio 2013

- Performance

- Documentation

- Design

- Azure Services

- Storage

- Data flow

- Data parallelism for cloud IoT apps

- Storing timeseries data for cloud IotT apps

- Entities

- Logging

- Security

- Testing

- Points of interest

Overview

Overlord is a cloud-scale IoT platform that provides an HTTP REST API for bi-directional data transfer and communication with any device that can connect to the Web or any private network over TCP/IP. Overlord provides an API for managing devices, sensors, readings, channels, alerts, and messages. Devices can register sensors and push data to the server using a simple REST API which the server then acknowledges and responds with any messages or alerts that have been generated for that device. Device sensor data grouped as channels can be streamed to subscribing devices using a simple request-response model where the client sends the index or date of the last channel data item it processed. Devices can set alerts on channels, and these can be triggered by sensor data exceeding some threshold value. On-demand messages can be sent from one device to another through the API. Messages and alerts for a device are placed on a queue are popped off in the order received, when the device confirms it has processed the message or alert.

You can use Overlord as an aggregator node on a sensor network that stores or replicates your sensor data to the public cloud. Overlord does not require your IoT devices to understand protocols like OData. You can use Overlord from Python or Bash command-line scripts without installing any client-side libraries.

Overlord is hosted on Github.

Status

A 0.1.0 release will be completed for March 31st 2015. As of now I've committed the Security layer and about 80% of the Storage layer. I've also written a set of passing unit tests for the storage and security layers.

Download current Overlord source code.

You can open the solution in Visual Studio 2013. Overlord by default will use the Azure Storage Emulator when in debug mode. Nuget package restore is enabled so all the required NuGet packages should be installed for the project. In the Overlord.Testing project run all the unit tests there and make sure they all successfuly complete, especially the ingestSensorValues unit testc. You can view the contents of the device, user, channel, device channel, digest tables and queue et.al right in Visual Studio.

You can check my Github for further updates and releases.

Goals

There are a number of IoT management platforms out there and several of them are free and open-source. Microsoft itself has announced its plans for the Azure Intelligent Systems Service which leverages the huge amount of services Microsoft has built for its Azure PaaS to let users manage all their data from any device conceivable. Overlord is a open-source IoT platform that has a bit of a different philosophy than other IoT platforms.

One drawback many HTTP IoT platforms share is the need for the IoT device to use special client libraries or support operations like JSON parsing when sending and receiving data. A very low-cost Arduino device may only have 32K of flash memory for code and 2K SRAM for data, and may use a Ethernet or Wifi shield that doesn't have encryption and HTTPS capabilities in its network stack. Or say you are using a low cost microcontroller with an embedded Ethernet inteface like those developed by Freescale. This is the scenario protocols like MQTT and CoAP were designed to address, but these protocols use UDP and are designed for M2M communication between sensor nodes and devices, not sending data to the public cloud.

You might have a scenario where you want to send and stream data to and from the cloud using HTTP but without having to do things like JSON parsing or support WebSockets which can require IoT devices that are more like full-fledged computers like the Raspberry Pi. You migh have existing IoT applications or networks that use a particular language or library or technology that you don't want to modify significantly to use cloud data storage and communication.

Overlord's goal is to be a zero-footprint platform for IoT data storage and communication. Devices do not need any special client libraries to send data to Overlord or to stream data channels or receive alerts and messages. Any device with a typical TCP/IP stack that can make HTTP requests can connect to Overlord without needing additional client libraries or doing things like JSON or XML parsing or using WebSockets or HTTP keep-alives. For embedded devices running Linux, like the Raspberry Pi 2 which I'm using as a test device, you can use the Overlord API from a terminal session e.g you can write a simple shell script to poll the GPIO pins and send their state to Overlord using wget or curl. For embedded devices like the Arduino or Freescale Freedom that can be programmed with C, you can connect to an Overlord server and send and receive data using a standard TCP/IP network stack or a client library like the Arduino WebClient without the need for additional libraries. The data your device sends and receives is formatted as flat CSV-type structures and be can parsed very easily by programs written in C or by standard Unix shell tools like sed. You can use Overlord to mirror data your devices are collecting now without having to significantly alter your existing controller devices or data nodes.

Overlord is designed to be very extendable and pluggable with different hosting enviroments and storage providers and network protocols. You can run your own Overlord instance on your Azure website, or even as a standalone server process on your private network with Azure only used as a storage backend.

Why build cloud IoT apps with .NET?

There are a lot of cloud applications o0ut there that use languages like JavaScript or Python or Ruby and run on platforms like node.js. MeshBlu for example is an open-source IoT management platform that runs on Node.

In my opinion, languages that enforce strong typing and static type checks like C# are way better suited for doing cloud-scale development than languages like JavaScript or CoffeeScript and Python. And frameworks like the .NET Framework and the .NET runtime with class libraries like the Task Parallel Library are way better suited for the kind of multi-tiered development and security and performance requirements cloud apps need. Some of the benefits of building cloud apps with .NET are:

1. Static Typing and Compiler Infrastructure

I never took the static typing of languages like C# to be a hindrance. When I write JavaScript code I'm always fiddlng with parameter orders and doing guards against unexpected values from a call.

Technology changes very rapidly in software development. For an app with multiple layers and a lot of moving parts like Overlord, or where I have to learn a new library or API, I can't imagine not being able to depend on the 'security blanket' of compiler type checking. Whether I was working with the Azure Table Data Service or Windows Identity Framework, the fact that I knew in advance what method return and parameter object types should be, saved my bacon many times. After working with jQuery.Ajax in JavaScript I know how much harder learning a library is where you're not exactly sure what a method call is going to return (What am I getting here: object or string or stringified object or ???? )

Some of the many benefits the static typing and advanced compiler infrastructure of .NET bring are:

Extension Methods

Extension methods are one of the coolest things I've come across in any language. Extension methods are basically aliases the compiler translates at compile time, meaning you can extend existing types very simply. Extensions are only visible in namespaces you own which again is a huge plus.

Extension methods are an elegant solution to common problems that usually require clunky solutions. For example, in my storage library I have the following System.String extensions:

namespace Overlord.Storage

{

public static class StringExtensions

{

public static Guid UrnToGuid(this string urn)

{

string prefix = "urn:uuid:";

if (urn.IndexOf(prefix) == 0)

{

return Guid.ParseExact(urn.Substring(prefix.Length), "D");

}

else

{

return Guid.ParseExact(urn, "D");

}

}

public static string UrnToId(this string urn)

{

string prefix = "urn:uuid:";

if (urn.IndexOf(prefix) == 0)

{

return urn.Substring(prefix.Length);

}

else return urn;

}

public static Guid ToGuid(this string s)

{

return Guid.ParseExact(s, "D");

}

}

}

This lets me have one place where I deal with parsing GUID identifiers for devices etc. that are strings. So I can say things like:

"urn:uuid:d155074f-4e85-4cb5-a597-8bfecb0dfc04".UrnToGuid()

very naturally. These kinds of type extensions can in principle be done by dynamic languages like JavaScript, but obviously letting the C# compiler do the work means it's a lot less work for you and much safer for your code, compared with extending object prototypes at runtime say.

LINQ

LINQ took me a while to get my head around when I first started and I'm still a novice. But once you 'get it' it makes dealing with collections, lists and arrays so much easier. Querying data collections in a type-safe way with LINQ is much easier and safer compared to using something like Underscore.js in JavaScript.

LINQ makes possible in C# the kind of 1-line solutions you might expect to see in functional languages. For instance, when I add a device reading I must check that the sensor names and senor values are valid. When you create sensors on a device you must name them like "D2" or N1" where the first letter represents the sensor data type: DateTime or Number in our two examples. Before I write sensor values to storage I can use a simple LINQ query, together with a string extension method called IsValidSensorName, to test if any sensor values are not named properly:

if (values.Any(v => !v.Key.IsVaildSensorName()))

I can throw an exception using a LINQ query to generate a properly formatted list of the bad sensor values

if (values.Any(v => !v.Key.IsVaildSensorName()))

{

string bad_sensors = values.Where(v => !v.Key.IsVaildSensorName())

.Select(v => v.Key + ":" + v.Value).Aggregate((a, b) =>

{ return a + " " + b + ","; });

throw new ArgumentException("Device reading has bad sensor names. {0}", bad_sensors);

}

The Where LINQ function first filters all the sensor name-value pairs that are not valid. The Select LINQ function then projects these values to a simple list of strings. And then the Aggregate LINQ function aggregates this list into a single list formatted with spaces and commas. (I think you can can also do these things in one step with the LINQ Concat query.)

Task Parallel Library

The Task Parallel Library is a complete set of .NET types and methods for easily and safely doing parallel and conurrent operations in .NET. The TPL removes the need for you to deal with the issues of multihreading in your cloud-app and provides familiar language constructs for adding data and task parallelism to your code.

LINQ and the TPL make it possible to do complex operations in much fewer lines that otherwise. E.g in my Digest worker role I have to do the following operation:

- Read up 32 messages from an Azure Storage Queue.

- Convert each message from its serialized string form to an an instance of IStorageDeviceReading.

- Paritition each message into groups according to the device id of the reading. Each group must be sorted in ascending order according to the reading time.

- Now in parallel for each group place the reading into table storage.

IEnumerable<CloudQueueMessage> queue_messages = storage.GetDigestMessages(32);

if (queue_messages != null)

{

IEnumerable<IStorageDeviceReading> messages =

queue_messages.Select((q) => JsonConvert

.DeserializeObject<IStorageDeviceReading>(q.AsString));

IEnumerable<IGrouping<Guid, IStorageDeviceReading>> message_groups = messages

.OrderBy(m => m.Time)

.GroupBy(m => m.DeviceId);

Parallel.ForEach(message_groups, message_group =>

{

Log.Partition();

foreach (IStorageDeviceReading m in message_group)

{

storage.AddDigestDeviceReading(m);

}

});

LINQ and the TPL lets me do this complex operation in a very compact, readable way.

There are so many language features that C# has, which combined with the .NET class library and runtime, that makes your code so much shorter and more readable and easier to understand. Yet you still don't have to wrangle with the vagueness of duck-typed languages like JavaScript. Cloud-scale IoT apps that have to work in a secure way with huge amounts of data of disparate types, can benefit a lot from .NET and statically-typed languages like C#.

2. Visual Studio 2013

Visual Studio 2013 Community Edition is free! It's hard to find an IDE, free or otherwise, that you could say is better than Visual Studio. Everything you need to build end-to-end cloud apps, from testing to version control (you can sync directly to Github from VS) to server management to HTML and JS editing to support for open-source languages like Python, is available in one place. Plus it has the terrific NuGet package manager for managing 3rd-party libraries like jQuery and there are tons of community-made extensions like the xUnit test runner that integrate with Visual Studio.

Visual Studio 2013 Community comes with a full suite of tools for managing your Azure services and developing and depolying your cloud apps. You can publish your ASP.NET projects directly to an Azure Website or your web apps and class libraries as an Azure Cloud Service. You can view and edit directly Azure Storage tables and SQL databases. The ability to directly view the contents of my Azure tables and queues inside VS was a huge timesaver and makes developing and deploying cloud apps as frictionless as possible. The fact that I can also create C and Python projects in the same IDE when the time comes for testing my IoT API with different clients and networking libraries is also pretty awesome.

3. Performance

Node.js gets a lot of props for its asynchronous design and its really popular among web developers these days. But from the little I know of it, I don't see anything that Node does that can't be done by async methods in C#. Compiler support for asynchronous calls, lambda expressions and type inference are already part of C# along with the TPL class library. JavaScript is a nice lightweight language for scripting documents and wiring together UI components, but I'd balk at using it for doing high volume network applications. Dynamic typing when building apps that use a lot of different components and libraries doesn't seem like a good idea to me.

.NET applications are all compiled to native code so for a long-running process I think .NET code would have the clear advantage over interpreted languages. I plan to write a set of benchmarks for Overlord and Node.js IoT platforms like MeshBlu and see how they compare so stay tuned.

4. Documentation

.NET has been around for a long time and its commercial enterprise roots meant that documentation is always a priority. I haven't come across the same philosophy when working in Python for instance. Open-source is good at a lot of things but documentation for new libraries isn't one of them. When building Overlord I was never really stuck for long on anything that an article on MSDN or StackOverflow article didn't show me the error of my ways...even if I was using a new version of a library like the Azure Storage SDK.

Apart from the .NET and Azure documentation, Microsoft has also released a lot of architecture guidance, patterns, code samples and full examples for building full stack cloud applications. The patterns and practices team has written Cloud Design Patterns and the free ebook Building Cloud Apps with Microsoft Azure. Plus there are ton of code examples and blog articles on everything Azure and ASP.NET and Entity Framework et.al by Microsoft writers and bloggers as well as independent authors. People have been building distributed multi-tier apps with .NET for a long time so getting guidance and solutions and patterns for whatever type of cloud app you want to build isn't hard to find.

Design

The design of Overlord follows the usual core principles of promoting modularity, pluggability and testability. Overlord is broken out into several different C# projects:

Overlord.Security: This is a C# library that implements the claims-based identity and authorization model for Overlord.

Overlord.Storage: This a C# library that provides the interfaces for implementing storage of Overlord users, devices, sensors, readings, channels, alerts, and messages. It ships with one storage provider for Azure Table Storage. Other storage providers can be plugged in easily using the IStorage* interfaces.

Overlord.Digest.Azure This is a C# library which does all the incoming sensor data processing and the queue processing for handling outgoing messages and alerts. When sensor data is pushed to Overlord a queue message is generated which the Digest reads, checks for any channels the sensor belongs to and alerts set on the sensor, and then writes the data to the correct data tables. It is currently written as a Azure Cloud Service Worker Role.

Overlord.Core: This is a C# library that implements the core REST API actions: CRUD for users, devices, sensors, readings, channels, alerts, and messages. Adding devices and sensors, storing sensor readings , streaming data channels, sending and receiving alerts and messages, are all done by actions exposed by the core API. The core API doesn't care about what network protocol is used to access it, so supporting other protocols besides TCP/IP and HTTP like UDP and IoT protocols like CoAP can be done very easily

Overlord.Http: This is a ASP.NET web project that provides access to the Overlord core API using HTTP. It uses the ASP.NET MVC Web Api 2 framework.

Overlord.Testing: This is a C# library that contains all the unit and other tests for each layer of the application. It relies on the xUnit testing library.

Having a separate project for each of these layers lets me test each part of the app very quickly. If I want to test a storage method there is no need to mock Web Api controller objects, or for the test runner to load the ASP.NET MVC libraries during a test run. Future plans are to have a dashboard type UI where users can monitor their devices and graph the incoming sensor data

Azure Services

Azure Table Storage is a low-cost highly scalable data storage platform ideal for storing and retrieving large amounts of structured data that is not inherently relational.

Azure Queue Storage: Overlord uses storage queues for low-cost highly-scalable asynchronous data processing and messaging between devices. Incoming device readings are serialized to queue messages and then ingested FIFO sequentially by the Digest cloud service worker role. Overlord delivers messages to devices on demand or when sensor alerts are triggered by the digest.

Azure Cloud Services: Overlord uses a worker role in a Cloud Service to asynchronously process incoming sensor data and publish this data FIFO as channels that devices can read and query as streams of time-ordered data.

Azure Websites: The Overlord HTTP server is an ASP.NET web application that runs on Azure Websites. I can optionally also deploy it as a Cloud Service web role.

Microsoft Azure has a ton of technology like Event Hubs and Service Bus you can use for building apps that capture telemetry data or do messaging. I chose to write my own storage and messaging layer because I wanted to learn how to design and code cloud-scale systems like these. The goal of Overlord is to implement a REST API that can be used by resource constrained devices, without the heavy-lifting of unpacking OData messages say.

Storage

Storage is the biggest layer in Overlord and where I've spent the most time. I basically chose to create my own storage and messaging layer instead of using something like an Azure SQL database or Azure Event Hubs or Service Bus. It took a lot of work but I had a lot of fun doing it and I learned a lot of stuff and how to think about asynchronous operations and concurrency and data parallelism.

Overlord storage is designed around a scalable key-value store. Azure Table Storage is the first storage provider written but other types of key-value data stores like Redis can be used by implementing the IStorage interface. Devices call the API to send device readings. A device reading is a set of one or more data pairs consisting of a sensor name and a sensor value. Sensor names must begin with S, I, N, D, L, B and end with a number e.g N1,S25, B43 are all valid sensor names. Each valid sensor name corresponds to a sensor type : String, Integer, Number(Double), DateTime, Logical(bool) and Binary (byte[])

Device readings are sent to the API asynchronously and in high-volume. The HTTP API server is a ASP.NET MVC web application running on Azure Websites (or Azure Cloud Services) and can scale to accomomodate any amount of devices and readings coming in from all over the world. Devices and users also call the API to request data channels. A channel is a time-ordered series of sensor readings. Channels are how Overlord publishes sensor data and are what users and devices subscribe to. A channel can consist of sensors from many different devices. For example we can create a channel that includes all temperature sensors on devices located in Trinidad.

In order to accomplish this type of data ingestion and publishing, which is typical of cloud-apps, we have to carefully consider our data flow.

Data Flow

The basic data flow is:

- Devices call the REST API AddReading method to add sensor data formatted as key-value pairs.

- The API layer passes the device readings to the Storage layer.

- The Storage layer serializes the device readings to a queue message and places a message on the Digest queue.

- The Digest process reads up to 32 readings from the queue of device readings FIFO, groups them according to device id and then in parallel calls the storage.IngestSensorValues method on each group of readings.

- IngestSensorValues analyzes each sensor key-value pair for each device reading and writes the sensor data to the device channel tables. Each device has a device channel table which consists of all sensor readings for that device ordered according to the time of the readings.

- IngestSensorValues analyzes each sensor value and checks if the value is within the thresholds configured for any alerts that are on that sensor.

- If a threshold value is within that of an alert set on a sensor, IngestSensorValues adds a message to the message queue for that device.

- IngestSensorValues checks if each sensor belongs to any other channels and writes the sensor value data to those channel tables.

- Devices or users can the query the channel data through the REST API.

Data parallelism for Cloud IoT apps

The following are some lessons for doing data operations in cloud apps that I've learned both from the design of Azure Storage and from the advice given by the Microsoft patterns and practices team and other places.

- When doing network or disk I/O it is way faster to write and read or send and receive as much data as you can in one operation. You should try to utilize as much disk and network bandwidth as you have for single operations.

- Make all your API calls asynchronous and use queues to sequentially process incoming asynchronous data FIFO.

- Partition your high-volume data into partitions (segments) that can be safely written concurrently and can be read in parallel. In Overlord sensor readings are the high-volume data entities, and device ids and sensor names form a natural partitioning scheme. It will never be the case that one device needs to write sensor data that belongs to another device, nor that one sensor on a device needs to write sensor data belonging to another sensor, nor that we need to wait to fetch sensor data for one or several devices sequentially.

- Parallelize your I/O to execute concurrently on your partitoned data in one operation instead of serially.

- Use the Task Parallel Library and patterns for doing parallel operations and avoid low-level managing of our own multi-threading.

- In Azure Table Storage try as much as you can to query on exact matches for ParitionKey and RowKey. This is the fasted type of read operation you can do with Table Storage and is much faster than range queries on the two indexes or worse yet queries on property values.

Storing time series data in Cloud IoT apps

I use the approach that the Semantic Logging Application Block and the Windows Azure Diagnostics team took for storing high-volume time series data in Azure Table Storage. For my sensor data I take the Paritition key for each row as the number of ticks in UTC of the time of the reading, rounded to the nearest minute. I then store the exact time of the reading either as the RowKey or one of the row properties depending on what type of query I plan to do on the data. This lets me quickly query the sensor data concurrently between different time intervals while doing an exact match on the table PartitionKey and RowKey. For example if I want to make a query for all the sensor data for 4 sensors on a device that were added in the past hour, I can execute a query in parallel that fetches each table row that corresponds to a minute in the interval of the past hour and a row key that is a sensor name for each of the 4 sensors. I can in theory execute 60 concurrent Table Storage queries for this data.

Entities

There are 8 entities in the Overlord data model: users, devices, sensors, readings, channels, channel items, alerts, and messages. Basically a user has multiple devices and can add, delete and update the properties of each device, like their name and location.

User Entity (IStorageUser)

| Id | Token | ETag | Name | Devices |

| {4567-2134-4444-1234} | password | timestamp | User 01 | [{1234-6789-0004-1234},...] |

A device has multiple sensors. Devices register individual sensors with Overlord and push data to Overlord using sensor names.

Device Entity (IStorageDevice)

| Id | Token | ETag | User Id | Name | Description | Location | Sensors |

| {1234-6789-0004-1234} | password | timestamp | User 01 | Device 01 | Raspberry Pi 2 | Gasparillo, Trinidad W.I. | S1, S2, N1 |

Sensor Entity (IStorageSensor)

| Device Id | (Sensor) Name | Description | Units | Channels | Alerts |

| {1234-6789-000-1234} | N1 | Digital temperature sensor | Degrees Celsius | [{5555-5555-6666-6666},...] | [{0<val<100},...] |

A reading is a set of sensor values together with the time the device sensors were read sent to Overlord

Device Reading Entity (IStorageDeviceReading)

| Time (to minute) | Time (exact) | Sensor Values |

| 0635631838800000000 | 0635631838834500000 | {S1:"",S2="",N1:30} |

A channel is a grouping of sensor data into a time-ordered series.

Channel Entity (IStorageChannel)

| Id | Name | SensorType | Description | Units | Alerts |

| {5555-5555-6666-6666} | Trinidad Weather | N0 | Weather sensors in Trinidad | Degrees Celsius | [{40<val<100},...] |

Channel Item Entity (IStorageChannel)

| Time(to minute) | Time(exact) | Device Id | (Sensor Values) |

| 0635631838800000000 | 0635631838834500000 | {1234-6789-0004-1234} | {N1:30} |

Alert Entity (IStorageChannel)

| SensorType | MinValue | MaxValue | Message |

| N0 | 40 | 100 | "It's too hot!" |

| N0 | -30 | 10 | "It's too cold!" |

Message Entity (IStorageChannel)

| Receiver Device Id | DataType | Value |

| {1234-6789-000-1234} | S0 | It's too hot! |

Entity data normalization or duplication?

In relational database design one of the fundamental virtues is normalizing your data tables: minimizing the duplication of data between related entities. This contributes both to the performance of your database and (most importantly) to the consistency of your data. But using in a structured storage service like Azure Table Storage, normalization can be a hindarance. Duplication of data between related entities is perfectly fine and greatly simplifies querying.

For instance my device channel data can exist in other channel tables, instead of being forced to accesdd it in one place through relation. This lets me tune the performance of my storage provider to whatver type of data I'm planning to store.

Generally speaking when designing cloud IoT apps you want to carefully consider if using a predefined storage layer, like a relational database, is better compared to designing your storage layer around a basic key-value store like Redis or Azure Table Storage. Designing your storage layer around your data and not the other way around may amount to the same amount of work and yields a lot of benefits. With Overlord I chose the former and this gives me the most flexibility for maximizing security, consistency, and performance. I can control how I store and retrieve timeseries data like sensor readings and also discrete entities like users and devices.

Logging

Logging is a critical part of cloud-scale applications because of the high volume of data and operations that your cloud-app must process and perform. A good logging component that gives you the most flexibility and expressibility in how you write and analyze log events lets you monitor every aspect of your cloud app, and trace the source of any app failures due to coding bugs or scaling or performance problems.

Overlord uses the Semantic Logging Application Block from the Microsoft patterns and practices library. Semantic logging is where you use code instead of text strings to structure your event logs and provides a huge amount of consistency and power in how log events can be collected and analyzed. The best way to explain semantic logging it is to look at it in action:

[EventSource(Name = "AzureStorage")]

public class AzureStorageEventSource : EventSource

{

public class Keywords

{

public const EventKeywords Configuration = (EventKeywords)1;

public const EventKeywords Diagnostic = (EventKeywords)2;

public const EventKeywords Perf = (EventKeywords)4;

public const EventKeywords Table = (EventKeywords)8;

}

public class Tasks

{

public const EventTask Configure = (EventTask)1;

public const EventTask Connect = (EventTask)2;

public const EventTask WriteTable = (EventTask)4;

public const EventTask ReadTable = (EventTask)8;

}

Here we define an even source which contains all the events we want to be logged for our AzureStorage component. We categorize events by task and by keywords. Keywords are multiples of 2 so they can be ORed together.

We define methods on our EventSource that will be used to log message. This is the key part of semantic logging: using methods that represent exactly what the app is doing.

[Event(5, Message = "FAILURE: Write Azure Table Storage: {0}\nException: {1}", Task = Tasks.WriteTable,

Level = EventLevel.Error,

Keywords = Keywords.Diagnostic | Keywords.Table)]

internal void WriteTableFailure(string message, string exception)

{

this.WriteEvent(5, message, exception);

}

[Event(6, Message = "SUCCESS: Write Azure Table Storage: {0}", Task = Tasks.WriteTable,

Level = EventLevel.Informational,

Keywords = Keywords.Diagnostic | Keywords.Table)]

internal void WriteTableSuccess(string message)

{

this.WriteEvent(6, message);

}

We now have a unified consistent way to log reads and writes to Azure Table Storage that ensures all the relevant information about the operation is being stored in a consistent way. Each layer in Overlord can define their own event source and log events in the same way as the storage layer. I also use extension methods to easily capture exceptions without extra typing:

public static class AzureStorageEventExtensions

{

...

public static void ReadTableFailure(this AzureStorageEventSource ev,

string message, Exception e)

{

ev.ReadTableFailure(message, e.ToString());

}

}

So I can say:

catch (Exception e)

{

Log.WriteTableFailure(string.Format("Failed to add device entity: {0}, Id: {1}, Token {2}.",

device.Name, device.Id.ToUrn(), device.Token), e);

You can log to different event sinks which is just a fancy name for the different log destinations. SLAB is flexible enough to log different kinds of events to different locations. I choose to log to plain-text files as its the easiest option. So in my log file there are entries like these:

EventId : 6, Level : Informational, Message : SUCCESS: Write Azure Table Storage: Added device reading entity: Partition: 0635631739800000000,

RowKey: 9ac31883-f0e3-4666-a05f-6add31beb8f4_0635631740429485024, Sensor values: S1:9KR8hJMpk4s34VndWn16Z6GIMyvIAJWk0ZUkbNOe3vn,D1:3/28/2015 1:57:13 PM ,

Whenever I write a sensor value. When I'm ready for a more scalable logging component I can use the Azure Table Storage sink for my log events.

Security

This is another layer that took a lot of time to complete. For a system designed to be used by potentially millions of devices and users, security is a paramount concern. We have to ensure that each user and device is isolated in a single security context that cannot access any data it is not authorized to. We have to minimize the possibility of either unintentional or intentional data corruption or leakage.

Overlord uses a claims-based identity and authorization model, and role-based access checks, using classes from the Windows Identity Framework and .NET System.Security.Permissions namespace. A good detailed look at the WIF Claims Programming Model is here. WIF provides a unified way to work with authorization and authentication providers from Windows user accounts to Active Directory to even cloud identity providers like Windows Live. You can plug any kind authentication scheme into WIF and use attributes like ClaimsPrinicipalPermission to delcaratively assert cross-cutting security aspects of your app.

Cloud apps are usually composed of a number of components or layers that are isolated from one another in a logical sense or in a networking sense. For instance the Overlord storage library is logically separate from the rest of the application and stores data on networked servers separate from where the Overlord Http listerner is running.

A claim represents a security assertion that one layer of Overlord declares and other layers trust as being true. When a device or user is successfuly authenticated, the Security layer sets claims about the current device or users identity and role, that other layers like Storage and Core trust. When a storage or Api action is required the current layer must assert that this action was requested so that lower layers like Storage can trust the action was intentional.

So for instance, in our storage layer the DeleteUser method is declared as

[PrincipalPermission(SecurityAction.Demand, Role = UserRole.Administrator)]

[ClaimsPrincipalPermission(SecurityAction.Demand, Resource = Resource.Storage,

Operation = StorageAction.DeleteUser)]

public bool DeleteUser(IStorageUser user)

In our storage layer we require for each CRUD action that the thread principal belong to a specific role. So our IStorage.DeleteUser method requires the identity of the principal attached to our thread to belong to the "Administrator" user role. But we also require that when peforming a storage action, such action was legitimately requested from a higher layer. The way we do this is by using a claim type called StorageAction.DeleteUser.

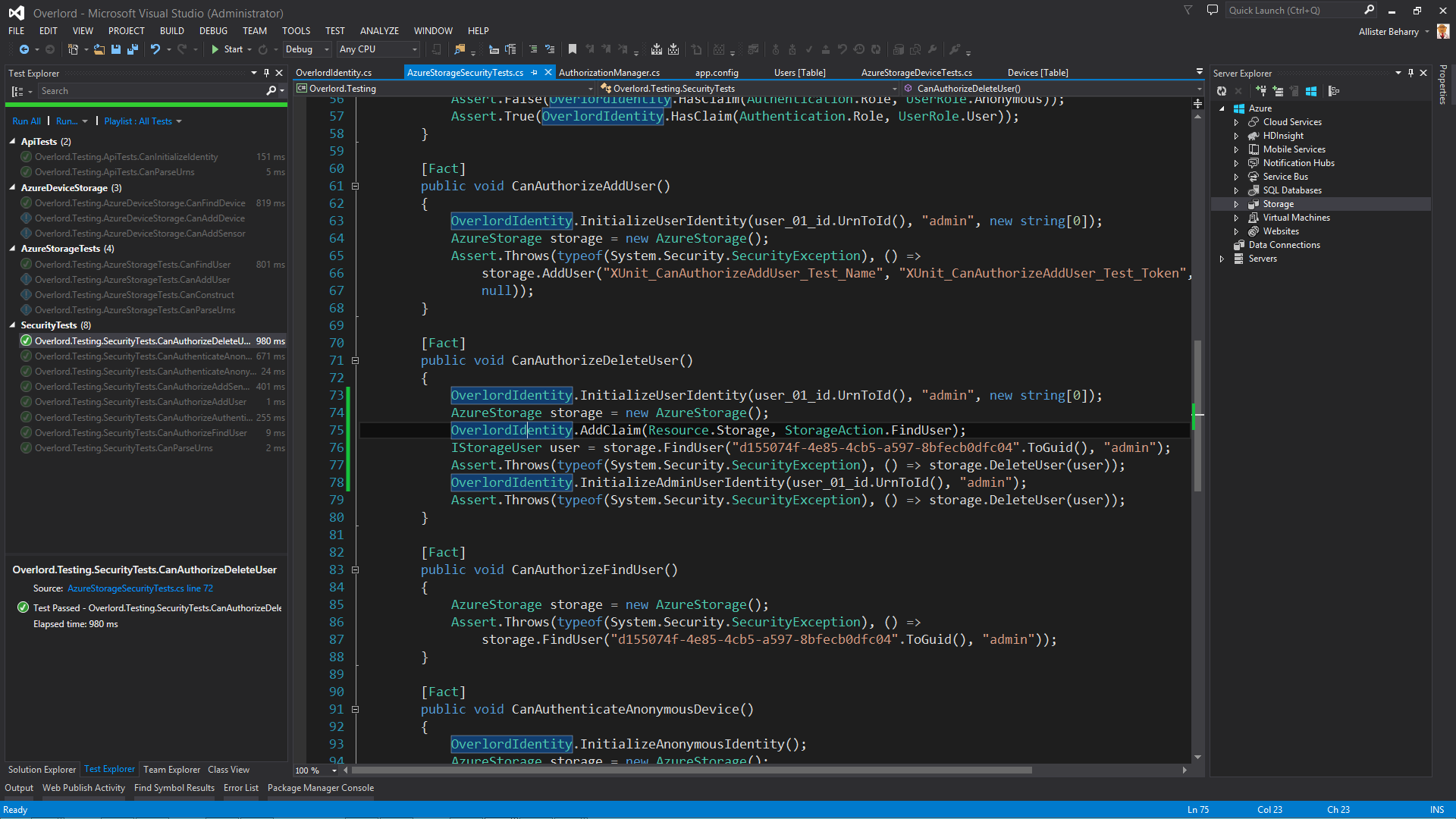

Any part of Overlord that needs to delete a user must add this claim to the current thread identity. If this claim is not there then any call to the IStorage.DeleteUser throws a security exception. This minimizes the possibilty of Overlord unintentionally (or maybe intentionally by a malicious attacker) performing any storage actions that a layer doesn't explicitly say it wants to. Thus, tests like the following are possible:

[Fact]

public void CanAuthorizeDeleteUser()

{

OverlordIdentity.InitializeUserIdentity(user_01_id.UrnToId(), "admin", new string[0]);

AzureStorage storage = new AzureStorage();

OverlordIdentity.AddClaim(Resource.Storage, StorageAction.FindUser);

IStorageUser user = storage.FindUser("d155074f-4e85-4cb5-a597-8bfecb0dfc04".ToGuid(), "admin");

//Security Exception thrown here:

Assert.Throws(typeof(System.Security.SecurityException), () => storage.DeleteUser(user));

//Intialize an admin user identity:

OverlordIdentity.InitializeAdminUserIdentity(user_01_id.UrnToId(), "admin");

//But exception is still thrown here because it doesn't have the correct StorageAction claim

Assert.Throws(typeof(System.Security.SecurityException), () => storage.DeleteUser(user));

}

because a security exception is thrown when any method in the storage layer is accessed without the thread identity being in the required role. But even if the identity does have the required role it still needs the correct StorageAction claim to perform the operation.

When our core Api library is loaded, our security layer kicks things off by intializing the identity of the current thread principal. We take the current identity, which is probably a Windows identity, and make a new ClaimsIdentity out of it:

private static void InitalizeIdentity()

{

//Get the current user identity as a stock IIdentity

IIdentity current_user_identity = Thread.CurrentPrincipal.Identity;

//Create a new ClaimsPrincipal using our stock identity

ClaimsPrincipal principal = new ClaimsPrincipal(new ClaimsIdentity(current_user_identity,

null, current_user_identity.AuthenticationType,

ClaimsIdentity.DefaultNameClaimType, ClaimTypes.Authentication.Role));

//Assign to our current thread principal

Thread.CurrentPrincipal = principal;

ClaimsIdentity new_user_identity = (ClaimsIdentity)Thread.CurrentPrincipal.Identity;

}

ClaimTypes.Authentication.Role is actually a type we own that we define with a custom name, to keep it from clashing with any standard or Windows roles:

namespace Overlord.Security.ClaimTypes

{

public class Authentication

{

public const string Role = "urn:Overlord/Identity/Claims/Roles";

What this means is we can add, delete, and check application roles without messing with any existing roles the identity may pick up from elsewhere.

With our shiny new identity we then assign this as the current thread principal identity.

ClaimsIdentity new_user_identity = (ClaimsIdentity)Thread.CurrentPrincipal.Identity;

Our new identity object will go out of scope as soon as our Overlord libraries are unloaded and the thread principal identity will revert to whatever it was before it started executing our libraries.

In our application configuration we declare a custom authorization manager class:

<system.identityModel>

<identityConfiguration>

<claimsAuthorizationManager type="Overlord.Security.AuthorizationManager,Overlord.Security" />

</identityConfiguration>

</system.identityModel>

Then when calls are made to protected methods like DeleteUser, the authorization manager can decide how to authorize the call:

public override bool CheckAccess(AuthorizationContext context)

{

return OverlordIdentity.HasClaim((string) context.Resource.First().Value,

(string) context.Action.First().Value);

}

We check for a claim type which is named for the resource being accessed (in the above case Storage) which has as its value the name of the resource action being performed (in the above case DeleteUser.) So we can be pretty sure than any calls to the storage layer must have been invoked by a method that intentionally added the storage action to the list of claims the identity currently has. Notice in our DeleteUser method we do at the end:

OverlordIdentity.DeleteClaim(Resource.Storage, StorageAction.DeleteUser)

This deletes the claim from the identity and ensures that any further calls to the storage layer must also explicitly say they are invoking the DeleteUser action.

Using claims-based authorization allows the many components in a cloud application to safely communicate security contexts and authorization information. Overlord stores the currently authenticated user or device id as a claim on the thread principal's identity. Thus everytime we perform an action this information about the device or user requesting the action is always available to every bit of code executing on the same thread. This eliminates the need for repeatedly filtering queies based on which user or device id is currently authenticated and minimizes the possibility of accessing or modifying data that doesn't belong to the current user or device.

Testing

Tests are the foundation on which any app is built. Unit tests allow me to make changes to different layers which must result in the same behavior. If a unit test fails it means that the layer is not behaving as it should

Unit tests are invaluable when working with an SDK like the Azure Storage SDK as they catch errors I make (like with concurrently writing entities) before the component is integrated with the other layers. E.g consider the code below:

IStorageUser user = storage.FindUser(AzureStorageTests.user_02_id.UrnToGuid(), AzureStorageTests.user_02_token);

OverlordIdentity.AddClaim(Resource.Storage, StorageAction.AddDevice);

IStorageDevice device_01 = storage.AddDevice(user,

AzureStorageTests.device_01_name, AzureStorageTests.device_01_token, null, AzureStorageTests.device_01_id);

OverlordIdentity.AddClaim(Resource.Storage, StorageAction.AddDevice);

IStorageDevice device_02 = storage.AddDevice(user, AzureStorageTests.device_02_name, AzureStorageTests.device_02_token, null,

AzureStorageTests.device_02_id);

This inocuous bit of code actually throws a concurrency error with Azure Table Storage. Why? Because in my AddDevice method I say:

TableOperation update_user_operation = TableOperation.Merge(CreateUserTableEntity(user));

result = this.UsersTable.Execute(update_user_operation);

I update the user object when I add a device. But when I go to add a 2nd device, I'm still using the original user object. When I try to update the user a 2nd time, Azure Table Storage complains because I'm using a previous version of the user object. What I need to do in my AddDevice method is:

TableOperation update_user_operation = TableOperation.Merge(CreateUserTableEntity(user));

result = this.UsersTable.Execute(update_user_operation);

user.ETag = result.Etag;

Which places the updated ETag Azure Table Storage sends back after the update user operation on my user object that I am adding devices to.

I use xUnit for unit testing. xUnit is avaliable as a NuGet package and has GUI test runner that integrates into the Visual studion IDE testing GUI:

Test Data

I use the following methods to generate test data. These two methods generate a random string and datetime:

public static Random rng = new Random();

/// <summary>

/// Genarate a random string of characters. Original code by Dan Rigby:

/// http://stackoverflow.com/questions/1344221/how-can-i-generate-random-alphanumeric-strings-in-c

/// </summary>

/// <param name="length">Length of string to return.</param>

/// <returns></returns>

public static string GenerateRandomString(int length)

{

var chars = "ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789";

var stringChars = new char[length];

for (int i = 0; i < stringChars.Length; i++)

{

stringChars[i] = chars[rng.Next(chars.Length)];

}

return new String(stringChars);

}

public static DateTime GenerateRandomTime(int? year, int? month, int? day, int? hour)

{

int y = year.HasValue ? year.Value : DateTime.Now.Year;

int m = month.HasValue ? month.Value : DateTime.Now.Month;

int d = day.HasValue ? day.Value : DateTime.Now.Day;

int h = hour.HasValue ? hour.Value : rng.Next(24);

return new DateTime(y, m, d, h, rng.Next(60), rng.Next(60))

}

Sensor names are strings starting with S, I, N, L, D, B followed by a number. The letter designates the sensor value type: string, integer, number (double), logical (true/false) or a byte array. This method generates a dictionary full of random valid sensor names with the correct type of values.

public static IDictionary<string, object> GenerateRandomSensorData(int num_sensors)

{

string[] sensors = { "S", "I", "N", "L", "D", "B" };

IDictionary<string, object> sensor_values = new Dictionary<string, object>();

for (int i = 1; i <= num_sensors; i++)

{

string sensor_name = sensors[rng.Next(0, 5)] + rng.Next(num_sensors).ToString();

if (sensor_values.Keys.Contains(sensor_name)) continue;

if (sensor_name.ToSensorType() == typeof(string))

sensor_values.Add(new KeyValuePair<string, object>(sensor_name, GenerateRandomString(20)));

else if (sensor_name.ToSensorType() == typeof(DateTime))

sensor_values.Add(new KeyValuePair<string, object>(sensor_name, GenerateRandomTime(null, null,

null, null)));

else if (sensor_name.ToSensorType() == typeof(int))

sensor_values.Add(new KeyValuePair<string, object>(sensor_name, rng.Next(5, 1000)));

else if (sensor_name.ToSensorType() == typeof(double))

sensor_values.Add(new KeyValuePair<string, object>(sensor_name, rng.NextDouble()));

else if (sensor_name.ToSensorType() == typeof(bool))

sensor_values.Add(new KeyValuePair<string, object>(sensor_name, rng.NextDouble() > 0.5));

else

{

byte[] b = new byte[100];

rng.NextBytes(b);

sensor_values.Add(new KeyValuePair<string, object>(sensor_name, b));

}

}

return sensor_values;

}

So I can quickly create a bunch of devices and then add a set of valid sensor data in my tests. As the code for my app grows, the number of tests I run, especially for doing parallel data operations also grows. I can use the Task Parallel Library Parallel.Foreach to simulate devices adding sensor data in parallel:

Parallel.For(0, devices.Count, d =>

{

storage.AuthenticateAnonymousDevice(devices[d].Id.ToUrn(), devices[0].Token);

OverlordIdentity.AddClaim(Resource.Storage, StorageAction.AddDeviceReading);

storage.AddDeviceReading(TestData.GenerateRandomTime(null, null, null, null),

TestData.GenerateRandomSensorData(10));

IDictionary<string, object> sensor_values = TestData.GenerateRandomSensorData(10);

OverlordIdentity.AddClaim(Resource.Storage, StorageAction.AddDeviceReading);

storage.AddDeviceReading(TestData.GenerateRandomTime(null, null, null, null),

TestData.GenerateRandomSensorData(sensor_values));

//Sleep for a random interval

Thread.Sleep(TestData.GenerateRandomInteger(0, 1000));

//Add another set of sensor data

OverlordIdentity.AddClaim(Resource.Storage, StorageAction.AddDeviceReading);

storage.AddDeviceReading(TestData.GenerateRandomTime(null, null, null, null),

TestData.GenerateRandomSensorData(sensor_values));

});

Points of Interest

LINQ

When comparing object using LINQ filters or the .Contains() method on enumerable types, by default the comparison is done by reference. This means that even if every member of 2 objects are equal, the 2 objects still aren't considered equal by .NET. Thus for example Select operations in LINQ may not work as you expect if the two objects in your Where or filter conditions are not the same reference.

If you want to use other semantics when comparing objects, then you'll need to implement IEqualityComparator for your objects. So, because of the redundant way I store objects, I may sometimes pull out 2 different objects from Table Storage that I want to be able to consider as equal. Azure Table Storage already gives me 3 fields that uniquely identity an object: the PartitionKey, RowKey, and Timestamp. So for my IStorageDevice class what I say is

public class IStorageDeviceEq : IEqualityComparer<IStorageDevice>

{

public bool Equals(IStorageDevice d1, IStorageDevice d2)

{

if ((d1.Id == d2.Id) && (d1.Token == d2.Token) && (d1.ETag == d2.ETag))

{

return true;

}

else

{

return false;

}

}

public int GetHashCode(IStorageDevice d)

{

return (d.Id + d.Token + d.ETag).GetHashCode();

}

}

}

Id, Token, and ETag are my 3 fields that I want to use to uniqely identify a IDeviceStorage object. So this works:

IStorageUser user = storage.FindUser(user_01_id.UrnToGuid(), "admin");

IStorageDevice device = storage.FindDevice(device_01_id.UrnToGuid(),

"XUnit_CanFindDevice_Test_Token");

Assert.Contains(device, user.Devices, new IStorageDeviceEq());

Even though the object reference device has is not the same as what is in user.Devices, using the IStorageDeviceEq comparator allows the comparison perofrmed by IEnumerable.Contains() to treat each object as identical.

Notice also that I can do this:

Assert.True(user.Devices.ContainsDevice(device));

because in my IListExtensions class I have an extension to IList that does device comparison using my custom IEqualityComparator. This shows how useful and powerful extension methods are, and how much they can contribute to code readability and correctness.

Semantic Logging Application Block

Each of your custom EventSource classes is static meaning there is only one copy of it shared among all classes in your AppDomain or process. You can initialiase your event listeners once at your app entry point, and each library or component iill log to the same listener. E.g in my Digest worker role in the OnStartup() method I have:

EventTextFormatter formatter = new EventTextFormatter() { VerbosityThreshold = EventLevel.Error };

digest_event_log_listener.EnableEvents(Log, EventLevel.LogAlways, AzureDigestEventSource.Keywords.Perf

| AzureDigestEventSource.Keywords.Diagnostic);

digest_event_log_listener.LogToFlatFile("Overlord.Digest.Azure.log", formatter, true);

storage_event_log_listener.EnableEvents(AzureStorageEventSource.Log, EventLevel.LogAlways, AzureStorageEventSource.Keywords.Perf

| AzureStorageEventSource.Keywords.Diagnostic);

storage_event_log_listener.LogToFlatFile("Overlord.Storage.Azure.log", formatter, true);

which publishes all my AzureStorageEvent and AzureDigestEvents to flat-file sinks in the current process working directory

.If you use the FlatFile event sink then each process where your are doing logging has a lock on the file. This means if you try to enable events twice in the same process you will get a System.IO exception due to the file lock.

For this reason your should ensure you intitlise your event listeners only once when your app libraries load. In my worker role OnStop () method When the libraries are unloaded I do

digest_event_log_listener.DisableEvents(AzureDigestEventSource.Log);

storage_event_log_listener.DisableEvents(AzureStorageEventSource.Log);

which flushes and closes any active sink.

History

Created first version for entry into Azure IoT contest.

Thursday March 26th 2015: Updated with more information on goals, storing and publishing sensor data, logging and testing.

Tuesday March 31st 2015 Added design images, Storage section. Fixed typos.