H264 Encoder DirectShow Filter in C# on AMD GPU

5.00/5 (3 votes)

Make H.264 Video Encoder DirectShow Filter in C# using AMF SDK API from AMD

Table of Contents

- Introduction

- Advanced Media Framework (AMF) SDK Wrapper

- Encoder Initialization

- DirectShow Implementation

- Receiving Samples

- Delivery Output Samples

- Stream Dump for the Debug

- AVC and Annex B

- Flushing

- Communication with the Application

- Filter Property Page

- Filter Overview

Introduction

Each GPU vendor provides their own capabilities for usage of their products. In this article, I show how to implement H264 DirectShow video encoder filter by using Advanced Media Framework (AMF) SDK from AMD. Implementation of this filter done in C# and it is based on BaseClasses.NET library which described in my previous post (Pure .NET DirectShow Filters in C#).

Advanced Media Framework (AMF) SDK Wrapper

The Advanced Media Framework (AMF) SDK provides the optimal interface for the developers to perform multimedia processing on AMD GPU devices. To use that SDK in .NET, we need to wrap objects and functions. The start point of the wrapper is the initialization function: AMFInit(). It is exported from the amfrt32.dll for the x86 platform and from amfrt64.dll for the x64. Good way is to create a wrapper of that function for both platforms and just call one of them depending on runtime target as on .NET we can have the “Any CPU” configuration.

[DllImport("amfrt64.dll", CharSet = CharSet.Ansi,

CallingConvention = CallingConvention.Cdecl, EntryPoint = "AMFInit")]

private static extern AMF_RESULT AMFInit64(ulong version, out IntPtr ppFactory);

[DllImport("amfrt32.dll", CharSet = CharSet.Ansi,

CallingConvention = CallingConvention.Cdecl, EntryPoint = "AMFInit")]

private static extern AMF_RESULT AMFInit32(ulong version, out IntPtr ppFactory);

We have different function names and the different exported DLL names but the entry point attribute values are the same. Based on the target runtime platform, we will call either the AMFInit32() or AMFInit64() function. To check which runtime to use, we check the IntPtr.Size value.

string sModule = "";

AMFInit_Fn AMFInit = null;

if (IntPtr.Size == 4)

{

sModule = "amfrt32.dll";

AMFInit = AMFInit32;

}

if (IntPtr.Size == 8)

{

sModule = "amfrt64.dll";

AMFInit = AMFInit64;

}

s_hDll = LoadLibrary(sModule);

if (s_hDll != null)

{

var result = AMFInit(AMF_FULL_VERSION, out m_hFactory);

}

For proper checking if the SDK runtime DLL is present on the system, we use the LoadLibrary function and try to load the module at the start and if it succeeds, we proceed with the initialization. From the initialization function, we got the factory object pointer. Like in native AMF SDK, we made the initialization as a singleton pattern with the AMFRoot object and the GetFactory() static method.

AMFRoot

The AMFRoot class is the base for every AMF SDK imported object. On constructor reference counter incremented and decremented on Dispose method of the IDisposable interface implementation. Once that counter becomes zero, which means that the latest exported object is released, the runtime DLL is unloaded.

AMFObject

The base class which represents the AMF SDK object is the AMFObject class. It embeds the IntPtr handle reference to the underlying native AMF object and contains equality operators. It is an intermediate class and has a protected constructor.

AMFInterface

All AMF wrapper objects have the same names as they persist in the AMF SDK. So the base AMF interface wrapper class name is the AMFInterface and it implements the underlying AMF interface with the same name. This class controls object references and manages requesting other supported interfaces from that object. All other AMF interfaces inherited from this class. Exported functions of the underlying object are accessed directly by index from its Vtable. In the same way as were implemented marshaling interfaces for the DirectShow base classes .NET wrapper. For such purpose, we have the function GetProcDelegate() which returns a method delegate by index of the given interface pointer.

protected T GetProcDelegate<T>(int nIndex) where T : class

{

IntPtr pFunc = IntPtr.Zero;

lock (this)

{

if (m_hHandle == IntPtr.Zero) return null;

IntPtr pVtable = Marshal.ReadIntPtr(m_hHandle);

pFunc = Marshal.ReadIntPtr(pVtable, nIndex * IntPtr.Size);

}

return (Marshal.GetDelegateForFunctionPointer(pFunc, typeof(T))) as T;

}

So, the QueryInterface() method of the AMFInterface class will have the next implementation.

private AMF_RESULT QueryInterface(Guid iid, out IntPtr p)

{

AMF_RESULT result = AMF_RESULT.NO_INTERFACE;

p = IntPtr.Zero;

lock (this)

{

if (m_hHandle != IntPtr.Zero)

{

var Proc = GetProcDelegate<FNQueryInterface>(2);

result = Proc(m_hHandle, ref iid, out p);

}

}

return result;

}

And with actual .NET types.

public T QueryInterface<T>(Guid _guid) where T : AMFInterface, new()

{

IntPtr p;

if (AMF_RESULT.OK == QueryInterface(_guid, out p))

{

T pT = new T();

AMFInterface _interface = (AMFInterface)pT;

_interface.m_hHandle = p;

return pT;

}

return null;

}

AMFPropertyStorage

The major interface which allows accessing object properties collection is the AMFPropertyStorage. By this interface, we apply settings of the encoder and configure properties of the encoder input surface. Along with that, we also retrieve parameters of the resulting buffer. AMFPropertyStorage is the core object, as this interface is the base for most of the AMF objects.

Internally, AMFPropertyStorage operates with the specified variant type structure. In .NET implementation, we manage to simplify that and hide such variadic things. Main methods GetProperty() and SetProperty() accept different value types. Depending on that type, it prepares the internal variadic structure and call base method of the actual AMFPropertyStorage interface.

The access to existing values is also done as indexed property.

AMFData

AMFData is the interface which represents an abstract class of the GPU or CPU memory buffer. It is inherited from the AMFPropertyStorage interface. From the DataType field, it is possible to determine what object is the base of the current AMFData instance. It can be AMFSurface or AMFBuffer - those two are necessary for our implementation.

AMFBuffer

The interface which inherited from the AMFData and provides access to unordered buffer data in GPU or CPU memory.

AMFPlane

Interface which inherited from the AMFInterface and provides access to a single plane of a surface. A pointer to the AMFPlane interface can be obtained using the variant of the GetPlane() method of the AMFSurface interface. Every AMFSurface object contains at least one plane. The number of planes in a surface is determined by the surface format.

AMFSurface

The interface abstracts a memory buffer containing a 2D image accessible by the GPU. The structure of the buffer depends on the surface type and format. The structure of the buffer depends on the surface type and format. Memory buffers associated with a surface may be stored in either GPU or host memory and consist of one or more planes accessible through the AMFPlane interface.

AMFContext

The interface for creating device specific resources for the AMF functionality. It abstracts the underlying platform-specific technologies with the common API access. In our implementation, we expose only several methods which we need for the encoder creation. The interface inherited from the AMFPropertyStorage.

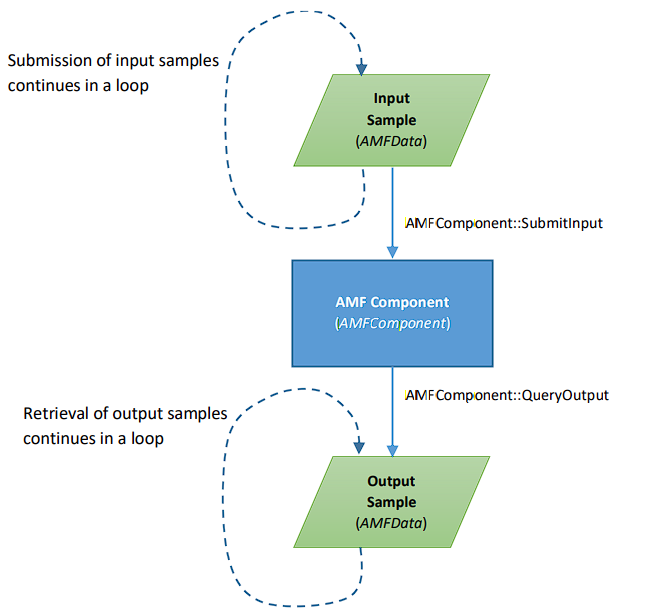

AMFComponent

The implementation of the AMF component functionality, which can process input data and provide output. This interface is derived from the AMFPropertyStorageEx, the extra storage of the properties, which in the same time inherited from the AMFPropertyStorage. The interface exposes several methods for the initialization and submits input and produces output AMFData objects. The base flowchart of the data component processing which is specified in the AMF SDK is the following.

AMFFactory

The AMFFactory interface is the entry point for creation of the AMF objects. AMFFactory is inherited from the AMFObject and not derived from AMFInterface as other AMF objects do. The AMFFactory instance we got from the static method GetFactory() of the AMFRoot class. The wrapper object exposes only two methods which we need. Those are CreateContext() for creating AMFContext object instances, and CreateComponent() to create the encoder component.

Encoder Initialization

As we prepare a wrapper for all the necessary things, then we need to implement creating an encoder object and initialize its properties.

In the filter implementation, we have three helper methods; those are to create an encoder object, initialize its properties and to destroy it: OpenEncoder(), ConfigureEncoder() and CloseEncoder().

The OpenEncoder() is used to create an encoder instance. At the same time, it calls the ConfigureEncoder() - the method where we apply encoder settings and input stream parameters. The CloseEncoder() method is used to dispose of encoder variables and free resources. Accessing the encoder variable is rounded with a critical section to avoid multithreading issues.

As mentioned initially, we are using the AMFFactory object to create an instance of the AMFContext interface. AMFContext should be initialized with the specified graphics technology we are willing to use. Our wrapper exported only the DirectX11 initialization method, but in your implementation, you can change functionality for other technologies supported by the AMF SDK. To see more, you can check the methods of the AMFContext interface in the SDK documentation. Depending on the selected graphics technology, the encoder component uses different underlying types. For example, if you render something with the DirectX11, you can initialize AMFContext with this technology and provide for encoding your own render targets without any copy requirements, as the AMFContext contains methods which allow you to create objects from existing resources of the selected technology.

// Create Encoder Objects

protected HRESULT OpenEncoder()

{

lock (this)

{

if (m_Encoder != null) return NOERROR;

AMF.AMF_RESULT result;

AMF.AMFFactory factory = null;

try

{

// Get the factory object

result = AMF.AMFRoot.GetFactory(out factory);

if (result == AMF.AMF_RESULT.OK)

{

// Create context

result = factory.CreateContext(out m_Context);

}

if (result == AMF.AMF_RESULT.OK)

{

result = AMF.AMF_RESULT.NOT_SUPPORTED;

if (m_DecoderType == AMF.AMF_MEMORY_TYPE.DX11)

{

// Initialize context type

result = m_Context.InitDX11();

}

}

if (result == AMF.AMF_RESULT.OK)

{

// Create Encoder Component

result = factory.CreateComponent(m_Context,

AMF.AMFVideoEncoder.VCE_AVC, out m_Encoder);

}

}

finally

{

if (factory != null)

{

factory.Dispose();

}

}

if (result != AMF.AMF_RESULT.OK)

{

CloseEncoder();

return E_FAIL;

}

// Configure Encoder

HRESULT hr = ConfigureEncoder();

if (FAILED(hr))

{

CloseEncoder();

return hr;

}

// Mark that we need to send sps pps data first

m_bFirstSample = true;

// Initialize Encoder Object

result = m_Encoder.Init(m_SurfaceFormat, m_nWidth, m_nHeight);

if (result != AMF.AMF_RESULT.OK)

{

CloseEncoder();

return E_FAIL;

}

}

return S_OK;

}

After initialization of the AMFContext object, we create an instance of the encoder AMFComponent. This is done with the CreateComponent() method of the AMFFactory. We are specifying the "AMFVideoEncoderVCE_AVC" component string, which identifies the H264 encoder component type. Once the encoder component is created, we call our prepared method ConfigureEncoder() to set up its parameters.

// Setup Encoder settings

protected HRESULT ConfigureEncoder()

{

if (!Input.IsConnected) return VFW_E_NOT_CONNECTED;

BitmapInfoHeader _bmi = Input.CurrentMediaType;

if (Output.IsConnected)

{

m_bAVC = (Output.CurrentMediaType.subType == MEDIASUBTYPE_AVC);

}

AMF.AMF_RESULT result;

lock (this)

{

result = m_Encoder.SetProperty(AMF.AMF_VIDEO_ENCODER_PROP.USAGE,

(long)AMF.AMF_VIDEO_ENCODER_USAGE.TRANSCODING);

result = m_Encoder.SetProperty(AMF.AMF_VIDEO_ENCODER_PROP.QUALITY_PRESET,

(int)m_Config.Preset);

result = m_Encoder.SetProperty(AMF.AMF_VIDEO_ENCODER_PROP.FRAMESIZE,

new AMF.AMFSize(m_nWidth, m_nHeight));

result = m_Encoder.SetProperty(AMF.AMF_VIDEO_ENCODER_PROP.SCANTYPE,

(int)AMF.AMF_VIDEO_ENCODER_SCANTYPE.PROGRESSIVE);

result = m_Encoder.SetProperty(AMF.AMF_VIDEO_ENCODER_PROP.RATE_CONTROL_METHOD,

(int)m_Config.RateControl);

result = m_Encoder.SetProperty(AMF.AMF_VIDEO_ENCODER_PROP.DE_BLOCKING_FILTER,

m_Config.bDeblocking);

result = m_Encoder.SetProperty(AMF.AMF_VIDEO_ENCODER_PROP.IDR_PERIOD,

(int)m_Config.IDRPeriod);

if ((m_Config.Profile & 0xff) != 0)

{

result = m_Encoder.SetProperty(AMF.AMF_VIDEO_ENCODER_PROP.PROFILE,

(int)(m_Config.Profile & 0xff));

}

if (((m_Config.Profile >> 8) & 0xff) != 0)

{

result = m_Encoder.SetProperty(AMF.AMF_VIDEO_ENCODER_PROP.PROFILE_LEVEL,

(int)((m_Config.Profile >> 8) & 0xff));

}

result = m_Encoder.SetProperty(AMF.AMF_VIDEO_ENCODER_PROP.B_PIC_PATTERN,

m_Config.BPeriod);

result = m_Encoder.SetProperty(AMF.AMF_VIDEO_ENCODER_PROP.ADAPTIVE_MINIGOP,

m_Config.bGOP);

long _rate = 0;

if (_rate == 0)

{

VideoInfoHeader _vih = Input.CurrentMediaType;

if (_vih != null)

{

_rate = _vih.AvgTimePerFrame;

}

}

if (_rate == 0)

{

VideoInfoHeader2 _vih = Input.CurrentMediaType;

if (_vih != null)

{

_rate = _vih.AvgTimePerFrame;

}

}

if (_rate != 0)

{

long a = UNITS;

long b = _rate;

long c = a % b;

while (c != 0)

{

a = b;

b = c;

c = a % b;

}

result = m_Encoder.SetProperty(AMF.AMF_VIDEO_ENCODER_PROP.FRAMERATE,

new AMF.AMFRate((int)(UNITS / b),

(int)(_rate / b)));

}

m_rtFrameRate = _rate;

if (m_nWidth != 0 && m_nHeight != 0)

{

int a = m_nWidth;

int b = m_nHeight;

int c = a % b;

while (c != 0)

{

a = b;

b = c;

c = a % b;

}

result = m_Encoder.SetProperty(AMF.AMF_VIDEO_ENCODER_PROP.ASPECT_RATIO,

new AMF.AMFRatio((uint)(m_nWidth / b),

(uint)(m_nHeight / b)));

}

long lRecomended = ((long)m_nWidth * (long)m_nHeight) << 3;

{

long lBitrate = m_Config.Bitrate;

if (lBitrate <= 0 || (lBitrate < lRecomended && m_Config.bAutoBitrate))

{

lBitrate = lRecomended;

}

result = m_Encoder.SetProperty

(AMF.AMF_VIDEO_ENCODER_PROP.TARGET_BITRATE, lBitrate);

lBitrate *= 10;

result = m_Encoder.SetProperty

(AMF.AMF_VIDEO_ENCODER_PROP.PEAK_BITRATE, lBitrate);

}

int nCabac = (int)(m_Config.bCabac ? AMF.AMF_VIDEO_ENCODER_CODING.CABAC

: AMF.AMF_VIDEO_ENCODER_CODING.CALV);

result = m_Encoder.SetProperty(AMF.AMF_VIDEO_ENCODER_PROP.CABAC_ENABLE, nCabac);

}

return S_OK;

}

We are using the SetProperty() method of the AMFComponent which is exposed by the AMFPropertyStorage interface to configure encoder settings. The H264 component has its own parameters which are listed in the AMF_VIDEO_ENCODER_PROP class as static strings. For any other components, those parameters may have different names or values, so you should look at the documentation of those components in the AMF SDK.

The last step is to call the initialization encoder with the actual input settings: video resolution and surface format. This method succeeded if all previous things were done properly. In case of failure during object creation or encoder initialization, we call CloseEncoder() method which clears all AMF resources.

// Free Encoder Objects

protected HRESULT CloseEncoder()

{

lock (this) {

if (m_Encoder != null)

{

m_Encoder.Terminate();

m_Encoder.Dispose();

m_Encoder = null;

}

if (m_Context != null)

{

m_Context.Terminate();

m_Context.Dispose();

m_Context = null;

}

}

return NOERROR;

}

Along with the Dispose(), we are calling Terminate() method of the AMFComponent and AMFContext objects, to clear all internal resources hold by those objects. After calling the Terminate method, accessing the AMFComponent can cause a crash.

DirectShow Implementation

The encoder filter uses the TransformFilter as the base class for the implementation. We need to override the abstract methods of it.

To validate supported input formats, it is required to override the CheckInputType() method. In there for all supported inputs, we return S_OK. Validation done based on listed supported formats by the AMF SDK.

// Validate input format

public override int CheckInputType(AMMediaType pmt)

{

// We accept video only

if (pmt.majorType != MediaType.Video)

{

return VFW_E_TYPE_NOT_ACCEPTED;

}

// Format must be specified

if (pmt.formatType != FormatType.VideoInfo && pmt.formatType != FormatType.VideoInfo2)

{

return VFW_E_TYPE_NOT_ACCEPTED;

}

if (pmt.formatPtr == IntPtr.Zero)

{

return VFW_E_TYPE_NOT_ACCEPTED;

}

// Check the supported formats

if (

(pmt.subType != MediaSubType.YV12)

&& (pmt.subType != MediaSubType.UYVY)

&& (pmt.subType != MediaSubType.NV12)

&& (pmt.subType != MediaSubType.YUY2)

&& (pmt.subType != MediaSubType.YUYV)

&& (pmt.subType != MediaSubType.IYUV)

&& (pmt.subType != MediaSubType.RGB32)

&& (pmt.subType != MediaSubType.ARGB32)

)

{

return VFW_E_TYPE_NOT_ACCEPTED;

}

// Check an alignment for the planar types

if (

(pmt.subType == MediaSubType.YV12)

|| (pmt.subType == MediaSubType.NV12)

|| (pmt.subType == MediaSubType.IYUV)

)

{

BitmapInfoHeader _bmi = pmt;

if (ALIGN16(_bmi.Width) != _bmi.Width)

{

return VFW_E_TYPE_NOT_ACCEPTED;

}

}

return NOERROR;

}

The CheckTransform() method also needs to be overridden, it is used to validate both input and output types for the transformation. In the case of encoder implementation, we just can return S_OK.

Another method which we should override from the base implementation is the SetMediaType(). It receives the final media format description of the input or output pin. On this method, we prepare all information about the input format and resolution and initialize the encoder component with those settings. As we receive data on the CPU memory, we have to copy it into the GPU before submitting to the encoder component. So we also prepare information about the pitch of each plane depending on the input format.

// Set input or output media format

public override int SetMediaType(PinDirection _direction, AMMediaType mt)

{

HRESULT hr = (HRESULT)base.SetMediaType(_direction, mt);

if (hr.Failed) return hr;

// If we set input media type

if (_direction == PinDirection.Input)

{

BitmapInfoHeader _bmi = mt;

if (_bmi != null)

{

m_nWidth = _bmi.Width;

m_nHeight = Math.Abs(_bmi.Height);

m_bVerticalFlip = (_bmi.Height > 0);

}

// Configure settings of each format

if (mt.subType == MediaSubType.YV12)

{

m_SurfaceFormat = AMF.AMF_SURFACE_FORMAT.YUV420P;

m_hPitch = m_nWidth;

m_vPitch = m_nHeight;

m_bFlipUV = true;

}

if (mt.subType == MediaSubType.YUYV || mt.subType == MediaSubType.YUY2)

{

m_SurfaceFormat = AMF.AMF_SURFACE_FORMAT.YUY2;

m_hPitch = m_nWidth << 1;

m_vPitch = m_nHeight;

}

if (mt.subType == MediaSubType.NV12)

{

m_SurfaceFormat = AMF.AMF_SURFACE_FORMAT.NV12;

m_hPitch = m_nWidth;

m_vPitch = m_nHeight;

}

if (mt.subType == MediaSubType.UYVY)

{

m_SurfaceFormat = AMF.AMF_SURFACE_FORMAT.UYVY;

m_hPitch = m_nWidth << 1;

m_vPitch = m_nHeight;

}

if (mt.subType == MediaSubType.IYUV)

{

m_SurfaceFormat = AMF.AMF_SURFACE_FORMAT.YUV420P;

m_hPitch = m_nWidth;

m_vPitch = m_nHeight;

m_bFlipUV = false;

}

if (mt.subType == MediaSubType.RGB32 || mt.subType == MediaSubType.ARGB32)

{

m_SurfaceFormat = AMF.AMF_SURFACE_FORMAT.ARGB;

m_hPitch = m_nWidth << 2;

m_vPitch = m_nHeight;

}

// Setup encoder instance

hr = OpenEncoder();

if (FAILED(hr)) return hr;

}

return hr;

}

To connect the output pin with the downstream filter, we should prepare the supported formats. Those formats are requested from the filter and listed in the overridden GetMediaType() method. The index in the argument is the zero based index of requested output media type. Method should return the VFW_S_NO_MORE_ITEMS if all types are listed, otherwise, the media type should be filled in the structure which is also passed as an argument.

// Build the output media types which are supported

public override int GetMediaType(int iPosition, ref AMMediaType pMediaType)

{

if (pMediaType == null) return E_POINTER;

if (iPosition < 0) return E_INVALIDARG;

if (!Input.IsConnected) return VFW_E_NOT_CONNECTED;

if (iPosition > 0) return VFW_S_NO_MORE_ITEMS;

// Open encoder if it not yet done

HRESULT hr = OpenEncoder();

if (FAILED(hr)) return hr;

pMediaType.majorType = MediaType.Video;

pMediaType.subType = MEDIASUBTYPE_H264;

BitmapInfoHeader _bmi = Input.CurrentMediaType;

long _rate = 0;

if (_rate == 0)

{

VideoInfoHeader vih = Input.CurrentMediaType;

if (vih != null)

{

_rate = vih.AvgTimePerFrame;

}

}

if (_rate == 0)

{

VideoInfoHeader2 vih = Input.CurrentMediaType;

if (vih != null)

{

_rate = vih.AvgTimePerFrame;

}

}

int _width = m_nWidth;

int _height = m_nHeight;

pMediaType.formatType = FormatType.VideoInfo;

VideoInfoHeader _vih = new VideoInfoHeader();

_vih.AvgTimePerFrame = _rate;

_vih.BmiHeader.Size = Marshal.SizeOf(typeof(BitmapInfoHeader));

_vih.BmiHeader.Width = _width;

_vih.BmiHeader.Height = _height;

_vih.BmiHeader.BitCount = 12;

_vih.BmiHeader.ImageSize = _vih.BmiHeader.Width * Math.Abs(_vih.BmiHeader.Height) *

(_vih.BmiHeader.BitCount > 0 ? _vih.BmiHeader.BitCount : 24) / 8;

_vih.BmiHeader.Planes = 1;

_vih.BmiHeader.Compression = MAKEFOURCC('H', '2', '6', '4');

_vih.SrcRect.right = _width;

_vih.SrcRect.bottom = _height;

_vih.TargetRect.right = _width;

_vih.TargetRect.bottom = _height;

if (m_Config.Bitrate == 0)

{

_vih.BitRate = _vih.BmiHeader.ImageSize;

}

else

{

_vih.BitRate = (int)m_Config.Bitrate;

}

pMediaType.sampleSize = _vih.BmiHeader.ImageSize;

pMediaType.SetFormat(_vih);

return NOERROR;

}

We set the output type as MEDIASUBTYPE_H264. The format parameters such as width, height and frame rate are sets based on input pin connection format.

For the output communication, we should specify allocator parameters: buffer size and number of buffers. Those settings are configured in the DecideBufferSize() overridden method.

// Adjust output buffer size and number of buffers

public override int DecideBufferSize

(ref IMemAllocatorImpl pAlloc, ref AllocatorProperties prop)

{

if (!Output.IsConnected) return VFW_E_NOT_CONNECTED;

AllocatorProperties _actual = new AllocatorProperties();

BitmapInfoHeader _bmi = (BitmapInfoHeader)Input.CurrentMediaType;

if (_bmi == null) return VFW_E_INVALIDMEDIATYPE;

prop.cbBuffer = _bmi.GetBitmapSize();

prop.cbAlign = 1;

if (prop.cbBuffer < Input.CurrentMediaType.sampleSize)

{

prop.cbBuffer = Input.CurrentMediaType.sampleSize;

}

if (prop.cbBuffer < _bmi.ImageSize)

{

prop.cbBuffer = _bmi.ImageSize;

}

// Calculate optimal size for the output

int lSize = (_bmi.Width * Math.Abs(_bmi.Height) *

(_bmi.BitCount + _bmi.BitCount % 8) / 8);

if (prop.cbBuffer < lSize)

{

prop.cbBuffer = lSize;

}

// Number of buffers

prop.cBuffers = 10;

int hr = pAlloc.SetProperties(prop, _actual);

return hr;

}

Once we start the graph for processing, it calls the Pause() method with the state equals to the FilterState.Stopped. So in our overridden implementation of the Pause(), we prepare initial output parameters, create an encoder, if it was not initialized, and start the output processing thread.

// Called once the filter switched into paused state

public override int Pause()

{

// If we currently in the stopped state

if (m_State == FilterState.Stopped)

{

m_evQuit.Reset();

lock (this)

{

// In case if no encoder so far create it

if (m_Encoder == null)

{

HRESULT hr = OpenEncoder();

if (FAILED(hr)) return hr;

}

}

// Initialize startup parameters

m_bFirstSample = true;

m_rtPosition = 0;

// No input and output in queues

m_evInput.Reset();

m_evOutput.Reset();

m_evFlush.Reset();

// Start the encodig thread

m_Thread.Create();

}

return base.Pause();

}

During the stopping of the graph, the Stop() method is called. In that method, we just stop the processing thread and collect the garbage.

// Called once the filter swithed into stopped state

public override int Stop()

{

// Set quit and flush to exit all waiting threads

m_evQuit.Set();

m_evFlush.Set();

// Shutdown encoder thread

m_Thread.Close();

// Switch into stopped state

HRESULT hr = (HRESULT)base.Stop();

GC.Collect();

return hr;

}

There are also two methods which may need to be overridden. Those methods are a must to call base class implementation. One is the BreakConnect() method. It signals that the input or output pin has been disconnected. In implementation, we release the encoder resources if an argument is the input pin.

// Called once the pin disconnected

public override int BreakConnect(PinDirection _direction)

{

HRESULT hr = (HRESULT)base.BreakConnect(_direction);

if (hr.Failed) return hr;

// If we disconnect input pin then we close the encoder

if (_direction == PinDirection.Input)

{

CloseEncoder();

}

return hr;

}

Another method we need to override is the CompleteConnect(). Here, we check the situation if the input pin becomes connected and the output was connected previously. This is possible if we connect both pins of the filter and then decide to reconnect the input. In such a case, we must reconnect output pin as the format and buffer size may be changed with the new input format.

// Final call for the connection completed

public override int CompleteConnect(PinDirection _direction, ref IPinImpl pPin)

{

HRESULT hr = (HRESULT)base.CompleteConnect(_direction, ref pPin);

if (hr.Failed) return hr;

// Just reconnect output pin in case it was connected before the input

if (_direction == PinDirection.Input && Output.IsConnected)

{

hr = (HRESULT)Output.ReconnectPin();

}

return hr;

}

Receiving Samples

As we have an encoder filter, we may not have output data right after we receive the input. Due to that, we need to pass the data to the encode in the base class overridden OnReceive() method and leave the Transform abstract method with empty implementation.

// Overriding abstract method, but it is not used here as we also override OnReceive

public override int Transform(ref IMediaSampleImpl pIn, ref IMediaSampleImpl pOut)

{

return E_UNEXPECTED;

}

At the beginning of the OnReceive() method, we should handle input media type change. That situation is possible then the upper filter first calls the CheckInputType() method (QueryAccept() on the pin interface) once the input pin is already connected and after, in case if we can accept the new type, that type is assigned to the incoming media sample. Such mechanism called QueryAccept (Downstream).

// Set the new media type it it set with the sample

{

AMMediaType pmt;

if (S_OK == _sample.GetMediaType(out pmt))

{

SetMediaType(PinDirection.Input,pmt);

Input.CurrentMediaType.Set(pmt);

pmt.Free();

}

}

In the SetMediaType() implementation, we should prepare for the situation where the format can be changed dynamically. So in streaming state and changing media type, we check whatever resolution or the format of the input has been changed and in such case, recreate the encoder objects and restart the output thread.

// Store initial values to check encoder reset

int Width = m_nWidth;

int Height = m_nHeight;

AMF.AMF_SURFACE_FORMAT Format = m_SurfaceFormat;

bool bRunning = (m_State != FilterState.Stopped);

BitmapInfoHeader _bmi = mt;

if (_bmi != null)

{

m_nWidth = _bmi.Width;

m_nHeight = Math.Abs(_bmi.Height);

}

///...

// Type can be set while filter active

if (bRunning)

{

// In that case store actual video resolution

VideoInfoHeader pvi = mt;

if (pvi != null)

{

if (pvi.TargetRect.right - pvi.TargetRect.left < m_nWidth

&& pvi.TargetRect.right - pvi.TargetRect.left > 1)

{

m_nWidth = (pvi.TargetRect.right - pvi.TargetRect.left);

}

if (pvi.TargetRect.bottom - pvi.TargetRect.top < m_nHeight

&& pvi.TargetRect.bottom - pvi.TargetRect.top > 1)

{

m_nHeight = (pvi.TargetRect.bottom - pvi.TargetRect.top);

}

}

}

// Check if we need to reset encoder

if (m_nWidth != Width || m_nHeight != Height || Format != m_SurfaceFormat)

{

// Shutdown encoder thread

m_evQuit.Set();

m_Thread.Close();

// Close the encoder

CloseEncoder();

}

///...

// If we running - starts the encoder thread

if (bRunning && !m_Thread.ThreadExists)

{

m_bFirstSample = true;

m_rtPosition = 0;

m_evQuit.Reset();

m_Thread.Create();

}

For the encoder input, we need to pass the AMFSurface object which is allocated on the GPU memory. The format of that surface should be the same as we used to initialize the encoder component. Once we receive the video data for most of the formats, we can create the wrapped AMFSurface object. That is done for the actual media sample data buffer without any coping. Such surface memory is the host or CPU. For that purpose, we can use the CreateSurfaceFromHostNative() method of the AMFContext object. Created surface can be copied to another AMFSurface object which is allocated on GPU memory. That can be done with the CopySurfaceRegion() method of the AMFSurface interface.

IntPtr p;

_sample.GetPointer(out p);

AMF.AMFSurface output_surface = null;

AMF.AMFSurface surface = null;

AMF.AMF_RESULT result = AMF.AMF_RESULT.FAIL;

// Create otuput surface on GPU

result = m_Context.AllocSurface

(m_DecoderType, m_SurfaceFormat, m_nWidth, m_nHeight, out output_surface);

if (result == AMF.AMF_RESULT.OK)

{

// Wrap host surface with the media sample buffer

result = m_Context.CreateSurfaceFromHostNative(m_SurfaceFormat, m_nWidth,

m_nHeight, m_hPitch,

m_vPitch, p, out surface);

}

// If we have wrapped surface

if (result == AMF.AMF_RESULT.OK && surface != null)

{

// Copy it to the GPU memory

result = surface.CopySurfaceRegion(output_surface, 0, 0, 0, 0, m_nWidth, m_nHeight);

}

For the RGB data, It is possible that the image data arrived as the bottom-up or vertically flipped. In the media pipeline, RGB data arrives as bottom-up if the height has a positive value in the media type; otherwise, the height is negative, which means the image data comes in the regular way or top-down.

In the case where we have a bottom-up image, we are unable to use a wrapped buffer surface as then we also get video which is vertically flipped. For that purpose, we create an AMFSurface object on the host or CPU with the AllocSurface() method of the AMFContext interface and copy image data into that surface with the restoring image into bottom-up order. After that, we call the Convert() method of the AMFSurface and specifying desired platform type: DirectX11 in our case.

if (m_SurfaceFormat == AMF.AMF_SURFACE_FORMAT.ARGB && m_bVerticalFlip)

{

result = m_Context.AllocSurface(AMF.AMF_MEMORY_TYPE.HOST, m_SurfaceFormat, m_nWidth,

m_nHeight, out output_surface);

if (result == AMF.AMF_RESULT.OK)

{

p = (IntPtr)(p.ToInt64() + m_hPitch * (m_nHeight - 1));

var plane = output_surface.GetPlane(AMF.AMF_PLANE_TYPE.PACKED);

var dest = plane.Native;

for (int i = 0; i < m_nHeight; i++)

{

API.CopyMemory(dest,p, m_hPitch);

dest = (IntPtr)(dest.ToInt64() + plane.HPitch);

p = (IntPtr)(p.ToInt64() - m_hPitch);

}

// Convert surface to the GPU memory type

result = output_surface.Convert(m_DecoderType);

}

}

For the YV12 input format, we also require to create a surface object on the host and then convert it to the GPU memory. This is necessary as the AMF SDK does not support the YV12 format, but it supports the IYUV which is the YUV420P format in the AMF SDK. Those formats are the similar 420 planar types with three planes but have just one difference that the U and V planes replaced. So we need to allocate IYUV (YUV420P) AMFSurface and copy each plane of the source data with flip U and V. And then, also call the Convert() surface method to get it into the GPU.

// For YV12 format we have to do input manually as YV12 format not supported

if (m_SurfaceFormat == AMF.AMF_SURFACE_FORMAT.YUV420P && m_bFlipUV)

{

// Allocate YUV420P Surface on HOST

result = m_Context.AllocSurface(AMF.AMF_MEMORY_TYPE.HOST, m_SurfaceFormat, m_nWidth,

m_nHeight, out output_surface);

if (result == AMF.AMF_RESULT.OK)

{

// Setup source plane pointers

IntPtr y = p;

IntPtr v = (IntPtr)(p.ToInt64() + m_hPitch * m_vPitch);

IntPtr u = (IntPtr)(v.ToInt64() + ((m_hPitch * m_vPitch) >> 2));

int hPitch_d2 = m_hPitch >> 1;

int nWidth_d2 = m_nWidth >> 1;

// Setup destination plane pointers

int y_stride = output_surface.GetPlane(AMF.AMF_PLANE_TYPE.Y).HPitch;

int v_stride = output_surface.GetPlane(AMF.AMF_PLANE_TYPE.V).HPitch;

var dY = output_surface.GetPlane(AMF.AMF_PLANE_TYPE.Y).Native;

var dV = output_surface.GetPlane(AMF.AMF_PLANE_TYPE.V).Native;

var dU = output_surface.GetPlane(AMF.AMF_PLANE_TYPE.U).Native;

// Copy planes data

for (int i = 0; i < (m_nHeight >> 1); i++)

{

API.CopyMemory(dY, y, m_nWidth);

y = (IntPtr)(y.ToInt64() + m_hPitch);

dY = (IntPtr)(dY.ToInt64() + y_stride);

API.CopyMemory(dY, y, m_nWidth);

y = (IntPtr)(y.ToInt64() + m_hPitch);

dY = (IntPtr)(dY.ToInt64() + y_stride);

API.CopyMemory(dV, v, nWidth_d2);

v = (IntPtr)(v.ToInt64() + hPitch_d2);

dV = (IntPtr)(dV.ToInt64() + v_stride);

API.CopyMemory(dU, u, nWidth_d2);

u = (IntPtr)(u.ToInt64() + hPitch_d2);

dU = (IntPtr)(dU.ToInt64() + v_stride);

}

// Convert surface to the GPU memory type

result = output_surface.Convert(m_DecoderType);

}

}

Once the output surface is ready, we should specify a timestamp and duration which we can get from the incoming media sample.

// Configure timings and set it to the output surface

long start, stop;

hr = (HRESULT)_sample.GetTime(out start, out stop);

if (hr >= 0)

{

output_surface.pts = start;

if (hr == 0)

{

output_surface.Duration = stop - start;

m_rtPosition = stop;

}

else

{

output_surface.Duration = m_rtFrameRate;

m_rtPosition = start + m_rtFrameRate;

}

}

else

{

output_surface.pts = m_rtPosition;

output_surface.Duration = m_rtFrameRate;

m_rtPosition += m_rtFrameRate;

}

For the first sample, it is required to have encoder initialization information, so we should set a flag for the encoder to insert sps pps data into bitstream. Also, we should signal that the first frame will be a keyframe.

// For the first sample setup SPS/PPS and force IDR frame

if (m_bFirstSample)

{

output_surface.SetProperty(AMF.AMF_VIDEO_ENCODER_PROP.FORCE_PICTURE_TYPE,

(int)AMF.AMF_VIDEO_ENCODER_PICTURE_TYPE.IDR);

output_surface.SetProperty(AMF.AMF_VIDEO_ENCODER_PROP.INSERT_SPS, true);

output_surface.SetProperty(AMF.AMF_VIDEO_ENCODER_PROP.INSERT_PPS, true);

m_bFirstSample = false;

}

After we are ready to submit the surface to the encoder. This is done by calling the SubmitInput() method of the AMFComponent object. But it is possible that the submission can fail due to the very fast coming of the input surface and the internal input queue of the encoder becoming full. In that situation, we will wait for the output event to be signaled. This event is set when the output bitstream data is ready, so we can try to feed the encoder with the new input. So we start waiting in the loop for such event notification or for the quit or flush signals. Once we successfully feed the encoder, we also signal with the event that the new input surface is submitted for the encoding.

hr = S_OK;

do

{

lock (this)

{

// Submit Surface to the Encoder

if (m_Encoder != null)

{

result = m_Encoder.SubmitInput(output_surface);

}

else

{

hr = E_FAIL;

break;

}

}

// If we succeeded signal to the output thread

if (result == AMF.AMF_RESULT.OK)

{

m_evInput.Set();

break;

}

else

{

// Otherwise wait for the encoder free slot

if (0 != WaitHandle.WaitAny(new WaitHandle[] { m_evOutput, m_evFlush, m_evQuit }))

{

break;

}

}

} while (hr == S_OK);

Delivery Output Samples

The main purpose of an AMF component is to process a media stream, usually as part of a pipeline. The AMFComponent takes AMFData objects on input and also provides AMFData on output. In the encoder implementation, we have AMFSurface which has the AMFData as the base class on input and AMFBuffer on output which is also derived from the AMFData.

Delivering output performed in the separate thread. This thread is started once the filter switches into active state. The thread sets up the local encoder object and increments its reference. On the processing loop, we request an output data from the encoder and if it’s not yet available, switch into waiting state. We wait for one of three events: the input signal which is set once the new surface passes to the encoder, the quit which is set to shutdown and the flush notification.

while (true)

{

AMF.AMFData data;

// Request for the output bitstream data

var result = encoder.QueryOutput(out data);

if (data != null)

{

///...

data.Dispose();

}

else

{

// if flushing, we can get EOF exit the loop in that case

if (result != AMF.AMF_RESULT.OK && flushing)

{

encoder.Flush();

break;

}

// If no flushing signals

if (!flushing)

{

// We are waiting for input or quit

int wait = WaitHandle.WaitAny(new WaitHandle[]

{ m_evInput, m_evFlush, m_evQuit });

if (0 != wait)

{

// If quit, then exit the loop

if (m_evQuit.WaitOne(0))

{

break;

}

else

{

// signal to discard input samples

flushing = true;

encoder.Drain();

}

}

}

}

}

In case the new output data is available and it supports the AMFBuffer interface, then the data from that buffer is copied into IMediaSample interface. The AMFBuffer also contains the timestamp and duration of the encoded video frame which is also set to the IMediaSample. From the requesting property AMF_VIDEO_ENCODER_PROP.OUTPUT_DATA_TYPE of the buffer object, we can set the keyframe flag for the output data.

// Query for the AMFBuffer interface

var buffer = data.QueryInterface<AMF.AMFBuffer>();

if (buffer != null)

{

// Get free sample from the output allocator

IntPtr pSample = IntPtr.Zero;

HRESULT hr = (HRESULT)Output.GetDeliveryBuffer(out pSample, null, null, AMGBF.None);

if (hr == S_OK)

{

IMediaSampleImpl sample = new IMediaSampleImpl(pSample);

IntPtr p;

// Copy media data

sample.GetPointer(out p);

sample.SetActualDataLength(buffer.Size);

API.CopyMemory(p, buffer.Native, buffer.Size);

// Setting up sample timings

long start = buffer.pts;

long stop = buffer.pts + buffer.Duration;

sample.SetTime(start, stop);

int type = 0;

// An IDR frame should be marked as sync point

if (AMF.AMF_RESULT.OK ==

buffer.GetProperty(AMF.AMF_VIDEO_ENCODER_PROP.OUTPUT_DATA_TYPE, out type))

{

sample.SetSyncPoint((int)AMF.AMF_VIDEO_ENCODER_OUTPUT_DATA_TYPE.IDR == type);

}

///...

}

buffer.Dispose();

}

To support dynamic format change right after we request IMediaSample from the output pin allocator, we must validate if it contains the new media type information. As we support only AVC or H264, then we will have differences only with that part, so in our case, there is no need to perform additional checking of that type, and we just pass it to the SetMediaType() with the output direction as an argument.

// Takes care if the upper filter change type - for example switched from annex b to avc

{

AMMediaType pmt;

if (S_OK == sample.GetMediaType(out pmt))

{

SetMediaType(PinDirection.Output, pmt);

Output.CurrentMediaType.Set(pmt);

pmt.Free();

}

}

This way, we handle a mechanism of dynamic format change which is called QueryAccept (Upstream).

Once the IMediaSample is ready, it is delivered to the downstream filter by calling the Deliver() of the output pin with the media sample as an argument. After that, we set an output event, this way we signal that the encoder may accept the new input data.

// Deliver sample

Output.Deliver(ref sample);

sample._Release();

// Signal for the free input slot

m_evOutput.Set();

The processing of the output data performed until either the quit or flush event set into signaled state. On those events, the thread exits.

Stream Dump for the Debug

During the delivering output we may need, for testing purposes, to perform saving data which is produced from the encoder component. For that, we create an output stream in case we have the debug dump file name. That stream is created during the starting of the output thread with the file name specified in the m_sDumpFileName class variable. We are also initializing the helper array of bytes.

// Prepare debug dump stream

Stream stream = null;

byte[] output = new byte[1920 * 1080];

if (!string.IsNullOrEmpty(m_sDumpFileName))

{

try

{

stream = new FileStream(m_sDumpFileName, FileMode.Create,

FileAccess.Write, FileShare.Read);

}

catch

{

}

}

In the delivering output loop, once we get AMFBuffer and we have an output stream, we copy the received data to the prepared array and save it to the stream. If there is no room for the data in the array, then we just rescale it.

// Debug dump if initialized

if (stream != null)

{

if (buffer.Size > output.Length)

{

Array.Resize<byte>(ref output, buffer.Size);

}

Marshal.Copy(buffer.Native, output, 0, buffer.Size);

stream.Write(output, 0, buffer.Size);

}

On exiting the output loop, we also dispose of the output stream if it was created.

if (stream != null)

{

stream.Dispose();

}

The dump file will be recreated each time of the file seeking operation performed, which is also called on end of stream notification.

You can see that the binary data is in H264 bitstream format with the start codes.

And that binary file is able to play with GraphEdit tool.

AVC and Annex B

The media data of the H264 can be delivered as full frames and with the start code prefixes bitstream format as described on annex b of ITU-T H.264 Standard documentation. To signal that we are providing AVC framing, we prepare output media type in format of MPEG2VIDEO with specifying decoder initialization extra data parameters and frame length size prefix. We set yhe frame length as four bytes long. We get the profile and level by the requesting AMF_VIDEO_ENCODER_PROP.PROFILE and AMF_VIDEO_ENCODER_PROP.PROFILE_LEVEL properties from the encoder component.

AMF.AMFInterface data;

AMF.AMF_RESULT result;

lock (this)

{

if (m_Encoder == null) return VFW_S_NO_MORE_ITEMS;

// Request SPS PPS extradata

result = m_Encoder.GetProperty(AMF.AMF_VIDEO_ENCODER_PROP.EXTRADATA, out data);

// If no info - just skip that type

if (data == null) return VFW_S_NO_MORE_ITEMS;

// Request profile and level

result = m_Encoder.GetProperty(AMF.AMF_VIDEO_ENCODER_PROP.PROFILE, out _profile_idc);

result = m_Encoder.GetProperty

(AMF.AMF_VIDEO_ENCODER_PROP.PROFILE_LEVEL, out _level_idc);

}

We get the initialization parameters from the encoder as AMF_VIDEO_ENCODER_PROP.EXTRADATA property. The data is in start code prefixed format, so we should extract the sps and pps lists from there.

List<byte[]> spspps = new List<byte[]>();

var buffer = data.QueryInterface<AMF.AMFBuffer>();

data.Dispose();

if (buffer != null)

{

IntPtr p = buffer.Native;

IntPtr nalu = IntPtr.Zero;

int total = buffer.Size;

int offset = 0;

// Split up the sps pps nalu

while (total > 3)

{

if (0x01000000 == Marshal.ReadInt32(p, offset))

{

if (nalu != IntPtr.Zero)

{

int type = (Marshal.ReadByte(nalu) & 0x1f);

if (type == 7 || type == 8) // sps pps

{

byte[] info = new byte[offset];

Marshal.Copy(nalu, info, 0, offset);

spspps.Add(info);

}

}

nalu = (IntPtr)(p.ToInt64() + offset + 4);

total -= 4;

p = nalu;

offset = 0;

}

else

{

offset++;

total--;

}

}

if (nalu != IntPtr.Zero)

{

int type = (Marshal.ReadByte(nalu) & 0x1f);

offset += total;

if (type == 7 || type == 8) // sps pps

{

byte[] info = new byte[offset];

Marshal.Copy(nalu, info, 0, offset);

spspps.Add(info);

}

}

}

Before setting the initialization data to the format, we should prepare the extra data format. In the MPEG2VIDEO, the first two bytes specified the size of the extra data and is followed by the actual data.

// If we have SPS PPS

if (spspps.Count > 0)

{

// Allocate extra data buffer

pExtraData = Marshal.AllocCoTaskMem(100 + buffer.Size);

if (pExtraData != IntPtr.Zero)

{

IntPtr p = pExtraData;

// Fill up the buffer

for (int i = 0; i < spspps.Count; i++)

{

Marshal.WriteByte(p, 0, (byte)((spspps[i].Length >> 8) & 0xff));

Marshal.WriteByte(p, 1, (byte)(spspps[i].Length & 0xff));

p = (IntPtr)(p.ToInt64() + 2);

Marshal.Copy(spspps[i], 0, p, spspps[i].Length);

p = (IntPtr)(p.ToInt64() + spspps[i].Length);

}

// Ammont of actual data

_extrasize = (int)(p.ToInt64() - pExtraData.ToInt64());

pMediaType.subType = MEDIASUBTYPE_AVC;

}

}

The full format initialization of the MPEG2VIDEO in our case looks next.

if (_extrasize > 0) {

_vih.BmiHeader.Compression = MAKEFOURCC('A', 'V', 'C', '1');

}

_mpegVI.dwProfile = (uint)_profile_idc;

_mpegVI.dwLevel = (uint)_level_idc;

_mpegVI.dwFlags = 4;

if (pExtraData != IntPtr.Zero && _extrasize > 0)

{

_mpegVI.cbSequenceHeader = (uint)_extrasize;

_mpegVI.dwSequenceHeader = new byte[_extrasize];

Marshal.Copy(pExtraData, _mpegVI.dwSequenceHeader, 0, _extrasize);

}

pMediaType.formatSize = Marshal.SizeOf(_mpegVI) + _extrasize;

pMediaType.formatPtr = Marshal.AllocCoTaskMem(pMediaType.formatSize);

Marshal.StructureToPtr(_mpegVI, pMediaType.formatPtr, false);

if (_mpegVI.dwSequenceHeader != null && _mpegVI.dwSequenceHeader.Length > 0)

{

int offset = Marshal.OffsetOf(_mpegVI.GetType(), "dwSequenceHeader").ToInt32();

Marshal.Copy(_mpegVI.dwSequenceHeader, 0,

new IntPtr(pMediaType.formatPtr.ToInt64() + offset),

_mpegVI.dwSequenceHeader.Length);

}

In addition, the subtype of that format is set to MEDIASUBTYPE_AVC. During the media type agreement, we check that value to determine which way is passing the data.

// If we set output media type

if (_direction == PinDirection.Output)

{

hr = OpenEncoder();

// Check for the AVC output

m_bAVC = (mt.subType == MEDIASUBTYPE_AVC);

}

The data which we got from the encoder output is always in the bitstream format: with the start code prefixes. So, we should manually handle the request for the AVC output and replace start codes with the data size in case the output type is AVC. It is good that for such a purpose, we set the prefix size length for the four bytes in the media type, as it equals the start code length. In the implementation of the output loop when we initialize output sample data, we check whatever the downstream filter requires the AVC input. And in such cases, we look for the start code prefix and this way, calculate the size of the previous nalu. With that value size, we replace the start code prefix.

// Setup length information in case we output non annex b

if (m_bAVC)

{

IntPtr nalu = IntPtr.Zero;

int total = buffer.Size;

int offset = 0;

while (total > 3)

{

if (0x01000000 == Marshal.ReadInt32(p, offset))

{

if (nalu != IntPtr.Zero)

{

Marshal.WriteByte(nalu, 0, (byte)((offset >> 24) & 0xff));

Marshal.WriteByte(nalu, 1, (byte)((offset >> 16) & 0xff));

Marshal.WriteByte(nalu, 2, (byte)((offset >> 8) & 0xff));

Marshal.WriteByte(nalu, 3, (byte)((offset >> 0) & 0xff));

}

nalu = (IntPtr)(p.ToInt64() + offset);

total -= 4;

p = (IntPtr)(nalu.ToInt64() + 4);

offset = 0;

}

else

{

offset++;

total--;

}

}

if (nalu != IntPtr.Zero)

{

offset += total;

Marshal.WriteByte(nalu, 0, (byte)((offset >> 24) & 0xff));

Marshal.WriteByte(nalu, 1, (byte)((offset >> 16) & 0xff));

Marshal.WriteByte(nalu, 2, (byte)((offset >> 8) & 0xff));

Marshal.WriteByte(nalu, 3, (byte)((offset >> 0) & 0xff));

}

}

Flushing

On seeking a file or once the EOS is received, it is necessary to drain all outstanding data from the encoder and deliver it to an output.

In the encoder output thread, we are waiting for the either quit event or flush event signals. On the flush event, we call the Drain() method of the encoder component. Once the encoder receives it, it discards any input data until the Flush() method is called. At that time, we request all pending buffers from the encoder and deliver them to the output pin. Once the output buffers are empty, we call the Flush() method and the encoder becomes available for the new input data.

// if flushing we can get EOF exit the loop in that case

if (result != AMF.AMF_RESULT.OK && flushing)

{

encoder.Flush();

break;

}

// If no flushing signals

if (!flushing)

{

// We are waiting for input or quit

int wait = WaitHandle.WaitAny(new WaitHandle[] { m_evInput, m_evFlush, m_evQuit });

if (0 != wait)

{

// If quit then exit the loop

if (m_evQuit.WaitOne(0))

{

break;

}

else

{

// signal to discard input samples

flushing = true;

encoder.Drain();

}

}

}

In the DirectShow filter, we receive the BeginFlush() method call then the flushing starts. In the overridden method, we signal flushing starts.

// Start draining samples from the encoder

public override int BeginFlush()

{

HRESULT hr = (HRESULT)base.BeginFlush();

if (hr.Failed) return hr;

// Signal that we are flushing

m_evFlush.Set();

return hr;

}

The EndFlush() base class DirectShow method is called when the flush operation has been finished. In our implementation, we restart the output thread and reset startup settings.

// End flushing

public override int EndFlush()

{

// Stop endcoding thread

if (!m_Thread.Join(1000))

{

m_evQuit.Set();

}

// Reset startup settings

m_bFirstSample = true;

m_rtPosition = 0;

// Reset events

m_evFlush.Reset();

m_evQuit.Reset();

// Start encoding thread

m_Thread.Create();

return base.EndFlush();

}

Communication with the Application

To communicate with the application, create interface IH264Encoder which can be requested from the filter. It is a regular .NET interface, but it is exported into a filter type library and can be accessed with the COM as we specify Guid and ComVisible attributes to that interface declaration.

[ComVisible(true)]

[System.Security.SuppressUnmanagedCodeSecurity]

[Guid("825AE8F7-F289-4A9D-8AE5-A7C97D518D8A")]

[InterfaceType(ComInterfaceType.InterfaceIsIUnknown)]

public interface IH264Encoder

{

[PreserveSig]

int get_Bitrate([Out] out int plValue);

[PreserveSig]

int put_Bitrate([In] int lValue);

[PreserveSig]

int get_RateControl([Out] out rate_control pValue);

[PreserveSig]

int put_RateControl([In] rate_control value);

[PreserveSig]

int get_MbEncoding([Out] out mb_encoding pValue);

[PreserveSig]

int put_MbEncoding([In] mb_encoding value);

[PreserveSig]

int get_Deblocking([Out,MarshalAs(UnmanagedType.Bool)] out bool pValue);

[PreserveSig]

int put_Deblocking([In,MarshalAs(UnmanagedType.Bool)] bool value);

[PreserveSig]

int get_GOP([Out,MarshalAs(UnmanagedType.Bool)] out bool pValue);

[PreserveSig]

int put_GOP([In,MarshalAs(UnmanagedType.Bool)] bool value);

[PreserveSig]

int get_AutoBitrate([Out,MarshalAs(UnmanagedType.Bool)] out bool pValue);

[PreserveSig]

int put_AutoBitrate([In,MarshalAs(UnmanagedType.Bool)] bool value);

[PreserveSig]

int get_Profile([Out] out profile_idc pValue);

[PreserveSig]

int put_Profile([In] profile_idc value);

[PreserveSig]

int get_Level([Out] out level_idc pValue);

[PreserveSig]

int put_Level([In] level_idc value);

[PreserveSig]

int get_Preset([Out] out quality_preset pValue);

[PreserveSig]

int put_Preset([In] quality_preset value);

[PreserveSig]

int get_SliceIntervals([Out] out int piIDR,[Out] out int piP);

[PreserveSig]

int put_SliceIntervals([In] ref int piIDR,[In] ref int piP);

}

Encoding settings can be configured by that interface. Most of the settings are not possible to modify during active filter state. In that case, it returns the VFW_E_NOT_STOPPED.

If the output pin of the filter is connected and the connection type is AVC, then the output pin will be reconnected during changing the properties. As for the AVC encoder configuration specified in the media type.

The settings which can be configured with the interface are the basic encoder settings. How they are set to the encoder component you can look at the ConfigureEncoder() method, which were discussed earlier. You can prepare your own configuration and set any properties even if they are not specified here. For that, follow the specified component documentation in the AMF SDK.

Filter does not support the IPersistStream nor the IPersistPropertyBag interfaces.

Filter Property Page

The encoder settings can be configured with the property page dialog which handles settings over the exposed IH264Encoder interface.

On the property page, it is possible to configure the encoder with the basic settings. The settings reset for each filter instance, but you can easily add functionality to load and save them to the system registry.

Filter Overview

Filter has good encoding performance.

DirectShow filter registered in the Video Compressors category.

Created DirectShow filter supported video encoding with H264 on AMD Graphics card with the AMF SDK. The input format for encoding is the NV12, YV12, IYUV, UYVY, YUY2, YUYV, RGB32 and ARGB. For planar input types (NV12, YV12, IYUV), it is required to have width as 16 bits alignment. The YV12 format is done as IYUV with the flip of the U and V planes which is performed on CPU. The RGB types supported vertical flip which is performed on CPU.

Filter uses the DirectX11 platform for the context initialization. For creating any other platform context, you should look at the AMF SDK documentation.

Filter has three output media types and can provide data with the bitstream and AVC format.

The encoded video can be saved into a file even with the AVI Mux filter. The playback results are also good.

The binaries should be registered as the COM type library with the RegAsm tool which is located in the “WINDIR\Microsoft.NET\Framework\XXXX” where XXXX platform version. If you build binary from the sources, it registers the COM interop automatically as it has enabled that in the project settings.

History

- 24th January, 2024: Initial version