Creating Custom Web Crawler with Dotnet Core using Entity Framework Core and C#

4.91/5 (6 votes)

There are most of web scraping and web crawler frameworks existing on different infrastructures. But when it comes to dotnet environments, you have no such option that you can find your tool that accommodates your custom requirements.

- You can find the GitHub repository in here: DotnetCrawler

Introduction

In this article, we will implement a custom web crawler and use this crawler on eBay e-commerce web site that is scraping eBay iphones pages and insert this record in our SQL Server database using Entity Framework Core. An example database schema will be Microsoft eShopWeb application, we will insert eBay records into Catalog table.

Background

Most web scraping and web crawler frameworks exist on different infrastructures. But when it comes to dotnet environments, you have no such option that you can find your tool which accommodates your custom requirements.

Before I start to develop a new crawler, I was searching these tools listed below which were written in C#:

- Abot is a good crawler but it has no free support if you need to implement some custom things, also there is not enough documentation.

- DotnetSpider has really good design, its architecture using the same as the most using crawlers like Scrapy and WebMagic. But documentation is Chinese so even though I translated it to English with Google translate, it's hard to learn how to implement custom scenarios. Also, I want to insert crawler output to SQL Server database but it was not working properly, I have opened an issue on GitHub but no one has responded yet.

I had no other option to solve my problem with limited time. And I did not want to spend more time to investigate more crawler infrastructures. I have decided to write my own tool.

Basics of Crawler

Searching a lot of repositories give me an idea to create a new one. So the main modules of crawler architecture are almost the same, all of one and all of them go to the big picture of crawler’s life, you can see one below:

This figure shows the main modules that should be included in a common crawler projects. Therefore, I have added these modules as a separate project under Visual Studio solution.

Basic explanations of these modules are listed below:

Downloader; responsible for downloading to given URL into local folder or temp and return tohtmlnodeobjects towards processor.Processors; responsible for processing given HTML nodes, extract and find intended specific nodes, load entities with these processed data. And return this processor to pipeline.Pipelines; responsible for exporting entity to different databases which uses from application.Scheduler; responsible for scheduling crawler commands in order to provide polite crawl operations.

Step by Step Developing DotnetCrawler

As per the above modules, how could we use Crawler classes? Let's try to imagine and after that, implement together.

static async Task MainAsync(string[] args)

{

var crawler = new DotnetCrawler<Catalog>()

.AddRequest(new DotnetCrawlerRequest

{ Url = "https://www.ebay.com/b/Apple-iPhone/9355/bn_319682",

Regex = @".*itm/.+", TimeOut = 5000 })

.AddDownloader(new DotnetCrawlerDownloader

{ DownloderType = DotnetCrawlerDownloaderType.FromMemory,

DownloadPath = @"C:\DotnetCrawlercrawler\" })

.AddProcessor(new DotnetCrawlerProcessor<Catalog> { })

.AddPipeline(new DotnetCrawlerPipeline<Catalog> { });

await crawler.Crawle();

}

As you can see, DotnetCrawler<TEntity> class has a generic entity type which will be used as a DTO object and saving the database. Catalog is a generic type of DotnetCrawler and also generated by EF.Core scaffolding command in DotnetCrawler.Data project. We will see this later.

This DotnetCrawler object is configured with Builder Design Pattern in order to load their configurations. Also, this technique naming as a fluent design.

DotnetCrawler is configured with the below methods:

AddRequest; This includes main url ofcrawlertarget. Also, we can define filter for targeted urls that aim to focus intended parts.AddDownloader; This includes downloader types and if download type is “FromFile”, that means download to local folder, then also required to path of download folder. Other options are “FromMemory” and “FromWeb”, both of them download target url but not save.AddProcessor; This method loads with new default processor which basically provides to extract HTML page and locate some HTML tags. You can create your own processor due to extendable design.AddPipeline; This method loads with new default pipeline which basically provides to save entity into database. Current pipeline provides to connect SqlServer using Entity Framework Core. You can create your own pipeline due to extendable design.

All these configurations should be stored in main class; DotnetCrawler.cs.

public class DotnetCrawler<TEntity> : IDotnetCrawler where TEntity : class, IEntity

{

public IDotnetCrawlerRequest Request { get; private set; }

public IDotnetCrawlerDownloader Downloader { get; private set; }

public IDotnetCrawlerProcessor<TEntity> Processor { get; private set; }

public IDotnetCrawlerScheduler Scheduler { get; private set; }

public IDotnetCrawlerPipeline<TEntity> Pipeline { get; private set; }

public DotnetCrawler()

{

}

public DotnetCrawler<TEntity> AddRequest(IDotnetCrawlerRequest request)

{

Request = request;

return this;

}

public DotnetCrawler<TEntity> AddDownloader(IDotnetCrawlerDownloader downloader)

{

Downloader = downloader;

return this;

}

public DotnetCrawler<TEntity> AddProcessor(IDotnetCrawlerProcessor<TEntity> processor)

{

Processor = processor;

return this;

}

public DotnetCrawler<TEntity> AddScheduler(IDotnetCrawlerScheduler scheduler)

{

Scheduler = scheduler;

return this;

}

public DotnetCrawler<TEntity> AddPipeline(IDotnetCrawlerPipeline<TEntity> pipeline)

{

Pipeline = pipeline;

return this;

}

}

According to this, after the necessary configurations are done, crawler.Crawle () method is triggered asynchronously. This method completes its operations by navigating the next modules respectively.

Example of eShopOnWeb Microsoft Project Usage

This library also includes an example project whose name is DotnetCrawler.Sample. Basically, in this sample project, implementing Microsoft eShopOnWeb repository. You can find this repo here. In this example repository is implemented e-commerce project, it has “Catalog” table when you generate with EF.Core code-first approach. So before using the crawler, you should download and run this project with a real database. To perform this action, please refer to this information. (If you have an already existing database, you can continue with your database.)

We were passing “Catalog” table as a generic type in DotnetCrawler class.

var crawler = new DotnetCrawler<Catalog>()

Catalog is a generic type of DotnetCrawler and also generated by EF.Core scaffolding command in DotnetCrawler.Data project. DotnetCrawler.Data project installed EF.Core nuget pagkages. Before running this command, .Data project should download the below nuget packages.

Packages required to run EF commands

Now, our packages are ready and able to run EF command in Package Manager Console with selecting DotnetCrawler.Data project.

Scaffold-DbContext "Server=(localdb)\mssqllocaldb;Database=Microsoft.eShopOnWeb.CatalogDb; Trusted_Connection=True;" Microsoft.EntityFrameworkCore.SqlServer -OutputDir Models

By this command DotnetCrawler.Data project created Model folder. This folder has all entities and context objects generated from eShopOnWeb Microsoft’s example.

After that, you need to configure your entity class with custom crawler attributes in order to understand crawler spiders and load entity fields from web page of eBay iphones;

[DotnetCrawlerEntity(XPath = "//*[@id='LeftSummaryPanel']/div[1]")]

public partial class Catalog : IEntity

{

public int Id { get; set; }

[DotnetCrawlerField(Expression = "1", SelectorType = SelectorType.FixedValue)]

public int CatalogBrandId { get; set; }

[DotnetCrawlerField(Expression = "1", SelectorType = SelectorType.FixedValue)]

public int CatalogTypeId { get; set; }

public string Description { get; set; }

[DotnetCrawlerField(Expression = "//*[@id='itemTitle']/text()", SelectorType = SelectorType.XPath)]

public string Name { get; set; }

public string PictureUri { get; set; }

public decimal Price { get; set; }

public virtual CatalogBrand CatalogBrand { get; set; }

public virtual CatalogType CatalogType { get; set; }

}

With this code, basically, crawler requested given url and tried to find given attributes which defined xpath addresses for target web url.

After these definitions, finally we are able to run crawler.Crawle() method asynchronously. In this method, it performs the following operations respectively.

- It visits the url in the request object given to it and finds the links in it. If the property value of Regex is full, it applies filtering accordingly.

- It finds these urls on the internet and downloads by applying different methods.

- The downloaded web pages are processed to produce the desired data.

- Finally, these data are saved to the database with

EF.Core.

public async Task Crawle()

{

var linkReader = new DotnetCrawlerPageLinkReader(Request);

var links = await linkReader.GetLinks(Request.Url, 0);

foreach (var url in links)

{

var document = await Downloader.Download(url);

var entity = await Processor.Process(document);

await Pipeline.Run(entity);

}

}

Project Structure of Visual Studio Solution

So you can start with creating a new project on Visual Studio with Blank Solution. After that, you can add .NET Core Class Library projects as below image:

Only sample project would be .NET Core Console Application. I will explain all the projects in this solution one by one.

DotnetCrawler.Core

This project includes main classes of crawler. It has only one interface which has Crawle method and implementation of this interface. So you can create your custom crawler on this project.

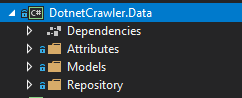

DotnetCrawler.Data

This project includes Attributes, Models and Repository folder. We should examine these folders deeply.

- Model folder; should include

Entityclasses which were generated by Entity Framework Core. So you should put your database table entities in this folder and also this folder should existContextobject of Entity Framework Core. Now this folder has eShopOnWeb Microsoft’s database example. - Attributes folder; include Crawler Attributes which provide to take xpath information about crawled web pages. There has 2 classes, DotnetCrawlerEntityAttribute.cs for entity attribute, DotnetCrawlerFieldAttribute.cs for property attribute. These attributes should be on

EF.Coreentity classes. You can see example usage of attributes as below code block:[DotnetCrawlerEntity(XPath = "//*[@id='LeftSummaryPanel']/div[1]")] public partial class Catalog : IEntity { public int Id { get; set; } [DotnetCrawlerField(Expression = "//*[@id='itemTitle']/text()", SelectorType = SelectorType.XPath)] public string Name { get; set; } }The first xpath is used for locating that HTML node when start to crawl. The second one is used for getting real data information in a particular HTML node. In this example, this path retrieves iphone names from eBay.

- Repository folder; include Repository Design Pattern implementation over the

EF.Coreentities and database context. I used for repository pattern from this resources. In order to use repository pattern, we have to applyIEntity interfacefor allEF.Coreentities. You can see the above code thatCatalogclass implementsIEntityinterface. So crawler’s generic type should implement fromIEntity.

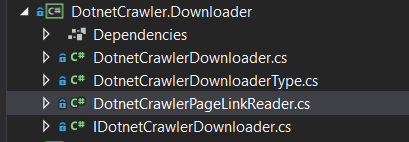

DotnetCrawler.Downloader

This project includes download algorithm in the main classes of crawler. There are different types of download methods that could be applied according to DownloadType of downloader. Also, you can develop your own custom downloader in here in order to implement your requirements. To provide these download functions, this project should load HtmlAgilityPack and HtmlAgilityPack.CssSelector.NetCore packages;

The main function of download method is in DotnetCrawlerDownloader.cs — DownloadInternal() method:

private async Task<HtmlDocument> DownloadInternal(string crawlUrl)

{

switch (DownloderType)

{

case DotnetCrawlerDownloaderType.FromFile:

using (WebClient client = new WebClient())

{

await client.DownloadFileTaskAsync(crawlUrl, _localFilePath);

}

return GetExistingFile(_localFilePath);

case DotnetCrawlerDownloaderType.FromMemory:

var htmlDocument = new HtmlDocument();

using (WebClient client = new WebClient())

{

string htmlCode = await client.DownloadStringTaskAsync(crawlUrl);

htmlDocument.LoadHtml(htmlCode);

}

return htmlDocument;

case DotnetCrawlerDownloaderType.FromWeb:

HtmlWeb web = new HtmlWeb();

return await web.LoadFromWebAsync(crawlUrl);

}

throw new InvalidOperationException("Can not load html file from given source.");

}

This method's download target url as per downloader type; download local file, download temp file or not download directly read from web.

Also, one of the main functions of crawler’s is page visit algorithms. So in this project, in DotnetCrawlerPageLinkReader.cs class apply page visit algorithm with recursive methods. You can use this page visit algorithm by giving depth parameter. I am using this resource in order to solve this issue.

public class DotnetCrawlerPageLinkReader

{

private readonly IDotnetCrawlerRequest _request;

private readonly Regex _regex;

public DotnetCrawlerPageLinkReader(IDotnetCrawlerRequest request)

{

_request = request;

if (!string.IsNullOrWhiteSpace(request.Regex))

{

_regex = new Regex(request.Regex);

}

}

public async Task<IEnumerable<string>> GetLinks(string url, int level = 0)

{

if (level < 0)

throw new ArgumentOutOfRangeException(nameof(level));

var rootUrls = await GetPageLinks(url, false);

if (level == 0)

return rootUrls;

var links = await GetAllPagesLinks(rootUrls);

--level;

var tasks = await Task.WhenAll(links.Select(link => GetLinks(link, level)));

return tasks.SelectMany(l => l);

}

private async Task<IEnumerable<string>> GetPageLinks(string url, bool needMatch = true)

{

try

{

HtmlWeb web = new HtmlWeb();

var htmlDocument = await web.LoadFromWebAsync(url);

var linkList = htmlDocument.DocumentNode

.Descendants("a")

.Select(a => a.GetAttributeValue("href", null))

.Where(u => !string.IsNullOrEmpty(u))

.Distinct();

if (_regex != null)

linkList = linkList.Where(x => _regex.IsMatch(x));

return linkList;

}

catch (Exception exception)

{

return Enumerable.Empty<string>();

}

}

private async Task<IEnumerable<string>> GetAllPagesLinks(IEnumerable<string> rootUrls)

{

var result = await Task.WhenAll(rootUrls.Select(url => GetPageLinks(url)));

return result.SelectMany(x => x).Distinct();

}

}

DotnetCrawler.Processor

This project provides that convert to downloaded web data into EF.Core entity. This requirement solves with using Reflection for get or set members of generic types. In DotnetCrawlerProcessor.cs class, implement current processor of crawler. Also, you can develop your own custom processor in here in order to implement your requirements.

public class DotnetCrawlerProcessor<TEntity> : IDotnetCrawlerProcessor<TEntity>

where TEntity : class, IEntity

{

public async Task<IEnumerable<TEntity>> Process(HtmlDocument document)

{

var nameValueDictionary = GetColumnNameValuePairsFromHtml(document);

var processorEntity = ReflectionHelper.CreateNewEntity<TEntity>();

foreach (var pair in nameValueDictionary)

{

ReflectionHelper.TrySetProperty(processorEntity, pair.Key, pair.Value);

}

return new List<TEntity>

{

processorEntity as TEntity

};

}

private static Dictionary<string, object> GetColumnNameValuePairsFromHtml(HtmlDocument document)

{

var columnNameValueDictionary = new Dictionary<string, object>();

var entityExpression = ReflectionHelper.GetEntityExpression<TEntity>();

var propertyExpressions = ReflectionHelper.GetPropertyAttributes<TEntity>();

var entityNode = document.DocumentNode.SelectSingleNode(entityExpression);

foreach (var expression in propertyExpressions)

{

var columnName = expression.Key;

object columnValue = null;

var fieldExpression = expression.Value.Item2;

switch (expression.Value.Item1)

{

case SelectorType.XPath:

var node = entityNode.SelectSingleNode(fieldExpression);

if (node != null)

columnValue = node.InnerText;

break;

case SelectorType.CssSelector:

var nodeCss = entityNode.QuerySelector(fieldExpression);

if (nodeCss != null)

columnValue = nodeCss.InnerText;

break;

case SelectorType.FixedValue:

if (Int32.TryParse(fieldExpression, out var result))

{

columnValue = result;

}

break;

default:

break;

}

columnNameValueDictionary.Add(columnName, columnValue);

}

return columnNameValueDictionary;

}

}

DotnetCrawler.Pipeline

This project provides that insert database for a given entity object from processor module. To insert database using EF.Core as object relation mapping framework. In DotnetCrawlerPipeline.cs class implement current pipeline of crawler. Also you can develop your own custom pipeline in here in order to implement your requirements. (persistance of different database types).

public class DotnetCrawlerPipeline<TEntity> : IDotnetCrawlerPipeline<TEntity>

where TEntity : class, IEntity

{

private readonly IGenericRepository<TEntity> _repository;

public DotnetCrawlerPipeline()

{

_repository = new GenericRepository<TEntity>();

}

public async Task Run(IEnumerable<TEntity> entityList)

{

foreach (var entity in entityList)

{

await _repository.CreateAsync(entity);

}

}

}

DotnetCrawler.Scheduler

This project provides that schedule jobs for crawler’s crawl action. This requirement is not implemented default solution likewise others so you can develop your own custom processor in here in order to implement your requirements. You can use Quartz or Hangfire for background jobs.

DotnetCrawler.Sample

This project proves that inserting new iPhones into Catalog table from eBay e-commerce web site with using DotnetCrawler. So you can set as startup project to DotnetCrawler.Sample and debug the modules that we explained in the above part of the article.

Conclusion

This library has been designed like other strong crawler libraries like WebMagic and Scrapy but built on architecture that focuses on easy to extend and scaling by applying best practices like Domain Driven Design and Object Oriented principles. Thus, you can easily implement your custom requirements and use default features of this straightforward, lightweight web crawling/scraping library for Entity Framework Core output based on dotnet core.

- GitHub: Source code

If you like this article, please clap and vote for me.

History

- 24.02.2019 - Initial publication