Data-Driven Web Service Testing with Assertive Validation

5.00/5 (2 votes)

A pattern of solution for automating the testing of Web Service-based components.

Introduction

Many organisations today, especially those in business domains where customers have mobile devices, develop their IT landscape around Web Service (WS) components. This allows the overall operational characteristics of the business solution to be loosely coupled with the resultant benefit that parts can be, to a large extent, updated and deployed separately.

Background

The inspiration for this article arose following the consultancy work of the author with a global business that was developing a strategic platform involving WS-based components and therefore had a deep interest in asserting the correct operational behaviour of these components. The solution presented here builds on this experience and provides a generic approach in the sense that can be used for any XML/SOAP-based service. It also sees the task of response validation as a key aspect, providing an individual field basis for asserting the outcome, whilst at the same time retaining accessibility for the user. In designing this solution, the key concepts in mind where:

- Scalable

- Accessible

- Assertive

- Maintainable

Overview

The platform for automating Web Service testing presented here is based on the use of SoapUI. This tool is widely used, not only by developer crews but by architectural and tech-savvy business folk. To set the scene, the automated data-driven platform proposed can be visualised as shown below:

We see here a SoapUi process, containing Groovy scripts and a specialist Java object, reading data from, on the left, a Data Driving spreadsheet and, for each service under test, a pair of files containing Request (RQ) and Response (RS) exemplars. The latter set of files are as they appear in the interface of SoapUI, with the exception the RQ having all the field values replaced with a special notation. Executing a Test Case within SoapUI causes a Test Case-specific sheet of the Data Driving spreadsheet to be read and the tests defined there to executed in sequence. In the spreadsheet the details of the test involve both request as well as corresponding response field definitions

The Detail

On many projects that are involved with WS-based architecture the use of SoapUI (www.soapui.com) is routine. This usage is often characterised by the involvement of both business as well as technical actors. However, in many organisations, particularly those who are averse to purchasing licences for external tooling, the version of products such as SoapUI is limited to a "free" version which has somewhat reduced capabilities. In the particular case of SoapUI, the free version lacks the data-driven aspect. The approach here provides a solid fix for this, whilst retaining the use of the free product. When thinking about assuring quality of Web Services, over and above the issue of wishing to data drive our tests, we have to deal with the following challenging topics:

- Building an appropriate request, setting its content fields as required by the specifics of the test being executed

- Asserting, at a field level, that we get the exact response required for our test

- Specifying the request/response information in such a way that we achieve a scalable, flexible and maintainable solution. In the latter, we would prefer not to involve ourselves in the development of long and complex scripts

The Design

To achieve our forgoing objects, the architectural proposal made here consists of two main parts:

- A set of SoapUI Groovy scripts at the Test Case level. These specify some basic workflow associated with test execution

- A Java object with classes and associated methods that are invoked by the scripts and do the "heavy-lifting" operations needed One key benefit of this split design is that it leaves the scripting side quite light in terms of logic, which improves maintainability. Developing and maintaining scripts with massive and complex programming would be a practical nightmare.

Example Overview

To explore the proposed design, I show here a real-world example using a publicly available Web Service, FlightStats (www.FlightStats.com), specifically their Airport service. Having a background of dealing with schemas and messages in the travel domain (Open Travel, IATA NDC/EDIST etc) it was natural for the author to present a solution using such a service. In order to use this service, you need to create a developer account with FlightStats, and once this is done, use the published WSDL file URIs for the Airport services to create a Project in SoapUI (SoaupUI: File > Create Project, enter Project name, enter the WSDL URI, select "create TestSuite"). Of course, you could take a service from another provider, nothing in the solution precludes you doing this, but in what follows all the details given are based on FlighStats Airport. Below is shown a view of the Project at this initial stage:

For the purpose of describing the details of the approach, it is recommended that you take the SoapUI project from the download (FlightStatsAirport-soapui-project.xml).

Project Configuration

The platform we propose here requires the use of properties specified at the Project level. These Custom Properties are assigned as set out in the following table

| Level | Name | Value |

| Project | FileRootPath (*) | C:\Users\Public\Documents |

| ServiceEndpoint (*) | Api.flightstats.com:443/flex/flightstatus/soap/v2/airportService |

The setting of these properties in SoapUI are as shown in the figure below:

The Scripts

As noted above, one of the key pieces of the design in the test automation design is Groovy scripting. These are defined at the TestSuite/TestCase level, in particular as individual Scripting TestSteps. The figure below shows the setup:

The TestSteps within the TestCase Status_arr consist of four Groovy script elements and one SOAP Request element. The script elements are summarised below (Note: the names of the script TestSteps is important):

| ScriptName | Comment |

| TestSetup | This script instantiates the Java object (Hub) which supports the test automation |

| ConstructRQ | This script invokes methods on the Hub to construct an appropriate Web Service RQ |

| ValidateRS | This script invokes methods on the Hub to validate the Web Service RS returned from the service |

| TestFinalise | This script invokes methods on the Hub to determine if more tests need to be performed |

The main design goal in using these scripts is to separate the various concerns cleanly. For the purpose of this presentation we shall look at the scripts for TestCase "Status_arr". However, the scripting solution we detail here works for any TestCase, nothing within the scripts themselves is specialised for testing this specific service. As can be seen above, there is a TestStep which calls a SOAP service element, which has a specialised name "SOAPREQUEST_Status_arr". Let’s look at each of the scripts in detail.

Script - TestSetup

Double clicking on the script "TestSetup" shows the scripting window. The script starts by importing the types available in the MT.SoapUI.DataDrivenTesting JAR:

It also gets the Custom properties that must be set at the SoapUI project level (see above). The hub object is then configured and initialised:

This hub object operates as a first-class object and has a range of methods that can be called to heavy-lifting throughout the testing workflow. Once initialised the object is saved in the SoapUI context object so that later scripts can get it and use methods provided. Setting up a test case, we need now to call a range of methods of the hub object and this process is shown below:

If we were testing a service that provided a ping operation, then it would be nice to establish that the service was in fact available for testing by doing a "ping". In the present case we don’t have that possibility so the call at line 83 simply returns with True given the value of the project serviceHasPing. The method call at line 96 checks that the paths and files needed in order to proceed with testing do in fact exist. If these paths and files do exist, then the hub method responsible for processing the exemplar files (both request and response) are processed into appropriate data within the hub. If this processing is successful, then the external driving data is imported via the call at line 104. At this point the required data all resides in the hub object.

Script - ConstructRQ

Once the basic test setup has been completed successfully the processing moves to the next task, that represented by the work of the script ConstructRQ. Here the focus is on constructing a request string that matches the specific data requirements of a test case as defined in the external spreadsheet.

In the setup phase the hub was initialised with a test case ordinal value and as we shall see it is the owner of the total test to perform, as defined by the external spreadsheet data, as well as the "current" test case "number". Thus, at lines 26, 27 and 32 we call hub methods to retrieve the test case name and description as well as have an appropriate request text built, without having to refer to the "current" test case "number". Here we see a simple, but important, example of how we devolve tasks to the hub object, preferring to keep the workflow in the scripts – a useful separation of concerns. At line 32, we retrieve the service request body, fully and correctly formed with the necessary field data, as a string and save this in the SoapUI context object at line 38.

Script - SOAPRequest_Status_arr

This is the script, a SOAP Request TestStep, that actually fires off the specialised request to the service under test. In order to provide maximum flexibility, we set the TestRequest property Endpoint as shown below:

In this way we pick up the ServiceEndpoint property set at the project level. On double clicking the SOAP Request TestStep, we see a standard SoapUI XML RQ structure. The body content needs to be replaced with the property expansion as shown below:

This links with the step in the previous step where the specialised RQ body, expressed as a string, was saved in the SoapUI context with the name requestText. The overall result here is that the RQ that is fired will be one with a specialised body content as setup in the script ConstructRQ. Once the request has been issued and a response obtained processing moves to the step where we validate the response received.

Script - ValidateRS

Once the request has been issued and a response obtained, we need to validate what has been received. This is done in the script ValiadteRS. Here, the only focus is to get the response string and pass it to the hub for detailed validation, against the data specified in the data driving spreadsheet. The key steps are as follows:

Script - TestFinalise

This script has the key task of deciding whether there are more tests to be executed, governed by the extent of the external data.

So, on line 18 we see the hub being retrieved from the SoapUI context and, at line 20, how the current test case number is determined from a hub method call thus allowing us to determine if the overall testing is complete or not, line 24 If the external data has more RQ/RS data defined (line 24) then we increment the hub test case number and go to the SoapUI TestStep named "ConstructRQ". If there are no more tests defined in the external spreadsheet then overall processing is terminated (line 38).

The Exemplars

As well as the scripts and the Java object the design proposed here requires the use of so/called exemplars. These are XML files that are used to construct correct requests, the request exemplar, and to discover the correct namespaces in the response, the response exemplar. These files are specified per interface operation and need to be located as shown below:

The path to these files can be expressed in terms of the project properties we saw earlier: { FileRootPath}/{InterfaceVendor}/{InterfaceCollection}/{InterfaceVersion}/{test-step-name} The names of these files should be: {InterfaceVendor}_{InterfaceCollection}_{test-step-name}_ExemplarRQ.xml {InterfaceVendor}_{InterfaceCollection}_{test-step-name}_ExemplarRS.xml The RQ exemplar can be created by copy-pasting a request from SoapUI into a separate file and replacing the value parts with the elements having a special form of naming as shown below:

Each of the "value" parts in the request must be replaced with unique names in the form: ${aaaa.bbbb.cccc…..} These names are the ones that are referenced in the data driving spreadsheet when to define the individual fields of the RQ. The RS exemplar can be constructed from a SoapUI dump file or by copy-pasting directly from the SoapUI response window, using any valid RQ for the operation. This exemplar is used to get the correct set of response namespaces for use in our test validation step.

The Data

As noted above, a key feature of this test automation platform is that it separates the structure of the testing, this being expressed in SoapUI, from the data to be used in the tests, both to form requests as well as validate the outcome. We will now look at the driving data. Below is shown a representative test defined in the (.xlsx) file:

The layout of the overall workbook is important, the key points being: Each test RQ to be issued is set out in the columns C & D and you can see that the fields into which we want to set data are referenced using the exemplar notation we saw earlier. The value could in fact be evaluated on the spreadsheet, as is the case shown in the red cells (D5-D7) – the API constrains the date when we can get data for airport arrival. Each test RS, the data we want to validate in each test is set out in columns E & F. The location in the RS that we want to validate is referenced using an XPath-like notation such that it represents a unique path. The value part, column D, we can express as actual text (e.g. E5) or by a Regex expression (e.g. F13). The latter notation is useful where the actual value will change depending on the date/time of testing, for example. We can at least confirm by testing that the form of the value in the referenced field conforms to a specific definition. In this data driven solution it is important that the sheets in the Excel workbook are named exactly as the names of the SoapUi TestCases. This naming is shown in the above figure. The (.xlsx) file must be saved in 97/2003 Workbook format (.xls) format. As you can see in the figure above, the data, a single RQ/RS pair in this specific case, is terminated by the word "STOP" in column A. In addition, it is important to observer that the number of rows defining the RQ part can be, and usually is different from the number defining the associated RS fields to be validated. The name of the Workbook is important; it must be in the format: {InterfaceVendor}_{InterfaceCollection}_{InterfaceVersion}_DataDriver.(xlsx/xls) Where the version number must be in a format that is consistent with file naming and the spreadsheet must be saved in 97-2003 Workbook format (it is this that is actually read by the Hub).

The Code

The part that does the hard work is the Java object, MT.SoapUI.DataDrivenTesting.jar. This object is configured in the script TestSetup, discussed above. Once it is added to the test execution context of SoapUI (loaded at application startup), it is reachable by any of the scripts and its methods can be used to perform the necessary tasks. The complicated algorithms required to bring the testing solution alive are done in this object, leaving the general test flow to the Groovy scripts in SoapUI. In the download of this article contains both the (.java) files and a Javadoc description and we will not describe the code independently here.

Examples

The example we use as an example is for the operation Status_arr, which in general gives information about flight arrivals at a specified airport. In particular, we will request information about London Heathrow (LHR). The test will involve a single web service call, as reflected in the spreadsheet shown above in the section "The Data", but the approach offered here is fully scalable to as many calls with as many combinations of data that ensures the web service is operating as expected. The general project layout and Test Case Editor for Status_arr is as shown below:

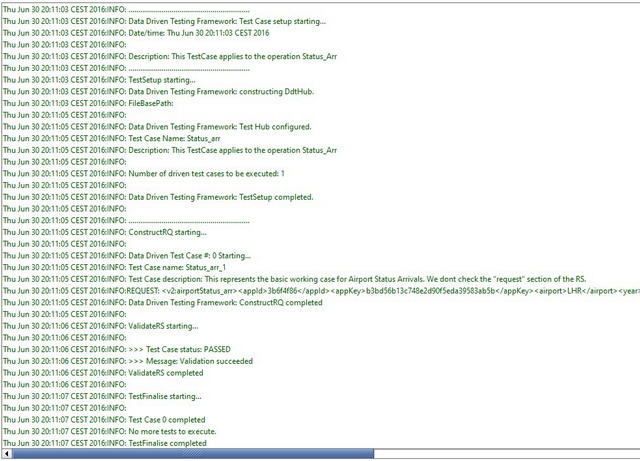

Clicking on the green triangle in the Test Case Editor initiates execution, which produces the output in the TestCase Log window as shown below:

Since all is green we should be very happy, right? Well, maybe. In the approach to data driven web service testing proposed here, the pass/fail is indicated by the outcome of the ValidateRS TestStep, the green shown in the TestCase Log window really only asserting that there was no programmatic or execution errors. To get a clear indication of the outcome, we need to look at the SoapUI log window which contains all the logging information from our scripts. For this case it looks as show in the following figure:

Here we see clearly that the field-level validation we specified in the external data driving spreadsheet was successful ("*** Test Case status: PASSED"). It is certainly possible to extend this basic reporting to provide output that would be consistent with a Continuous Integration scenario.

Conclusion

The proposed approach to data driven testing of web services has a number of key attributes. These can be summarised as follows:

- Separation of concerns – structure of testing, the workflow, and the specific data of a test are separated. This should enhance maintainability

- Accessibility – testers need only interact with a spreadsheet rather than the getting involved in the details of scripting. This also allows business-tech people in projects to use the platform thereby contributing to the overall Quality Assurance process

- Assertive – the validations performed, under the full control of the tester, at the individual RS field level provide a strong assertion that the RS obtained from a web service call, whether a good or bad outcome in relation to the corresponding RQ, is fully as expected

- Scalability – the range of tests to performed on a given (set of) interface(s) is only limited by the needs of the tester

Download

In the download link of this article you will find a range of assets;

- The Java source code for the Hub

- The exemplar files for RQ and RS messages

- The Javadoc for the Hub codebase

- The distribution for JXL the class library used to read 97-2003 format Excel workbooks

- The scripts to be used in a TestCase of SoapUI

- Two Excel files containing driving data for the interface operation Status_arr – one representing a small and one a larger set of tests

- A README text file which provides some basic information about "installing" the contents of the zip

Naming Convention

In adopting this platform for your own web service testing, it is important to take care of the naming conventions used. These are summarised in the table below:

| Item | Example | Comment |

| TestCase name in SoapUI | Status_arr, Status_dep | The names must be as the operation names in the interface |

| TestStep names in TestCase | TestSetup, ConstructRQ, SOAPRequest_Status_arr, ValidateRS, TestFinalise | The names are referenced in the scripts. The SOAP Request TestStep name must have the postfix part of the operation name |

| Exemplar RQ file name | FlightStats_Airport_Status_arr_ExemplarRQ.xml | See “The Exemplars” section above |

| Exemplar RS file name | FlightStats_Airport_Status_arr_ExemplarRS.xml | See “The Exemplars” section above |

| Data driving XLS file | FlightStats_Airport_V2_0_DataDriver.(xlsx/xls) | See “The Data” section above |

Acknowledgements

The theme of this work arose out of consultancy work performed by the author as a (Test Automator)/(Developer in Test) at Credit Suisse AG, Zurich, Switzerland. The author acknowledges this influence as well as asserting that the approach given in this article represents a unique work. I would also like to thank Ludovico Einaudi (www.ludovicoeinaudi.com) for massaging my ears during the development of this solution. Immense thanks are also due to Abby for her unstinting support.