Common Sense Software Engineering – Part II; Requirements Analysis

4.43/5 (4 votes)

Due to the nature of this critical component to software development, this essay intends to present this subject in broad strokes to incite interest among developers in pursuing their own development agendas properly.

Common Sense Software Engineering – Part II; Requirements Analysis

Due to the nature of this critical component to software development, this essay intends to present this subject in broad strokes to incite interest among developers in pursuing their own development agendas properly.

The Need for Good Requirements Analysis

Requirements Analysis is a very large area of software project development, which is most often ignored in its importance whereby it is subordinated to popular paradigms that are either forced to ignore their vital development tenets due to political pressures or merely abused by those with the intention of attempting to ignore its necessities in order to provide deliverables as fast as possible. It is in fact, the most critical aspect for any software project whether it is for a new application or for an existing one. This is because if you get the requirements wrong or are provided with a set of incomplete requirements (or both) and you attempt to implement a project with them, it will simply fail to some degree. This initial phase of software development cannot be ignored or modified to suit some organizational protocol as it is as immutable as a law of physics.

Poor requirements analysis will not only cause a project to fail but if the project is actually implemented, will cost both the client and developer organization the highest cost to the modifications that seek to correct the initial errors and misunderstandings.

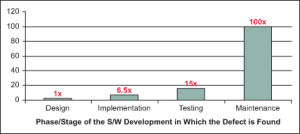

As an example of the potential costs to all involved that will arise from poor requirements analysis, please take a look at the chart below as presented by the following paper from the IBM System Sciences Group…

IBM System Sciences Group

The graph above demonstrates that the earlier a defect is found in a software project’s time-line, the less expensive it will be to repair. If we assume that the first stage of design as shown represents the physical design of the application and not the requirements’ gathering phase (which would then be first), the subsequent cost that will arise in the maintenance phase (post production implementation), which is already exorbitant, will be even higher.

Preliminaries

By showing the prospective project user representatives the chart above or a similar one at an initial meeting for requirements analysis it will go a long way to driving home the point how critical it is to do this phase of the project carefully and methodically so that it will ensure that the rest of the development phases begin with a defect-free foundation.

At an initial requirements’ meeting a second important understanding that must be agreed upon is that the preliminary decisions that were made as to which teams (user & developer) would control the various aspects of the Software Tradeoff Triangle (see “Common Sense Software Engineering – Part I; Initial Planning Analysis”) must be adhered to in order that the project be successful. If there is a break in the balance of this understanding than project development will most likely be sidelined into areas that were not intended (ie: feature creep). For example, if the user-team is controlling the feature-set (product) and needs to set a deadline, the developer-team must have the adequate funds to obtain the necessary staff and tools that will be able to accomplish such a goal.

Beginning a discussion regarding project requirements once the preliminary understandings have been agreed to is best begun by setting up a general outline as to what the major features of the project are expected to be.

With the pressure on development teams to get deliverables into production, setting up such an outline should be broken into two sections; features that are needed in the initial production deployment and\or for a later planned deployment of a set of enhancements and a section that includes possible inclusions (tentative requirements) at a later phase for which planning will be put off until the application is working satisfactorily in production (inclusive of all the intended enhancements). By doing the latter, the developer team will then know to build appropriate flexibility into a project that may include such potential features.

Now that a general working basis has been created with the section that describes the immediate, the phased-in, and tentative needs of the project you are ready to flesh out the requirements in detail in order to develop the actual breakdown of the tasks required to develop the needed project modules.

The “Waterfall” Approach is Still a Common-Sense Process

For those who work in what is now described as “fast paced, dynamic environments” such a methodical approach will or may appear to be counter-intuitive and appear to be just another method in defining software using the “Waterfall” approach to design. This would be a correct assumption and as often as this approach has been derided by modern-day development professionals it is has in fact been the only credible paradigm for software development. In fact, any new paradigm that has been developed for software development cycles as well as the original ones (which are many and already include their modern variations) have a “Waterfall” approach at some level.

The “Waterfall” technique in a very general sense merely defines a set of recursive steps required to move through a specific phase of development. Many have viewed such an approach as rigid and self-defeating as a result of its limitations. Such a perception comes from the idea that once a phase in such an approach has been completed, the project moves onto the next phase leaving the previous one as completed. This may have been the original intent of such an approach but in reality has never worked this way, even within the mainframe environment. As projects progressed there was always some recursion to other phases of the project as needed. However, admittedly, in the mainframe environment such recursion was more limited simply by the nature of the development infrastructure.

Despite the perceptions of the steps or phases within a “Waterfall” approach, how those steps are defined in order to produce an efficient set of processes is really up to the developers involved in the endeavor. However, when we talk in terms of “steps” or “phases”, it should be understood that such areas are axiomatic of the nature of software development processes.

For example, if you write a section of code; once completed your next “step” would be to unit-test it. If your unit-test(s) do not reflect the expected results, you are not simply going to break out of the process and move on to new code. No, you would loop back to correct the initial code and re-test again; repeating this cycle until you are satisfied that your new code is working as it should. In a nutshell, that is the “Waterfall” approach (on a very simplistic level) to software development, though when defined at the overall process level commits developers to a very specific set of ordered steps that appear to be inordinately lengthy and overly detailed in comparison to the basic code-test cycle just described.

Yet using such a cyclic methodology is just using simple common-sense. You wouldn’t test code and then write it…

The “Waterfall” development process got its bad reputation as it was applied to very large projects in which the following of such lengthy processes would actually increase the overall costs to a project and lower its general implementation efficiency when poor planning and execution were factors in the endeavor. However, this would happen with any large project attempted due to the inherent complexities involved no matter what paradigm was applied to it. And despite the varies criticism of the “Waterfall” approach many successful systems, some of which are still running today on large scale machinery, were developed in this manner. One of the widely noted systems that came out of this style of development was the rather famous airline reservation system, “SABER”, which every airline “back in the day” successfully used to manage the booking of their flights on a daily basis.

There is no dispute that the details of the general development phases and steps for a project can be analyzed to effect efficiencies that would not have been available by using the standard approach that was common to large, monolithic projects that were designed during the mainframe era of the Information Technology profession. However, in those circumstances the development techniques at the time necessitated such large-scale approaches to project development.

Agile and its variants have come about due to the capabilities with modern development environments that allow project teams to more easily break down development phases to smaller constructs since monolithic code structures are no longer a required part of the development landscape.

Yet, no matter how finely granulated a development approach is made, some form of step-by-step process will be followed in which corrections are made to defects found within a project’s development phase (design or otherwise) or any level of testing (Unit-Testing, Quality Control Testing, User-Acceptance Testing). This recursive-like process is simply unavoidable.

Requirements Analysis is Requirements Engineering… When Done Properly

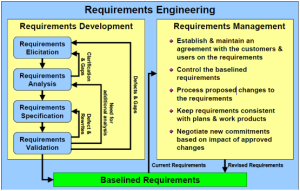

All this being said, true Requirements Analysis, which is just the first stage in the development of a software project can be so detailed and thorough in its own right that in many organizations that use it to its maximum extent it is called by its rightful name, “Requirements Engineering”. And yet even here there is a very discernable pattern to how requirements are initiated, discussed, fleshed out, and agreed upon. Such a pattern is very much like the “Waterfall” approach just discussed as the following graphic demonstrates.

Weigers 2003

As you will note in the left part of the image there are definite loop-back processes that demonstrate a “Waterfall” like approach to requirements development and this is because any refinement process will always have such a characteristic, “Requirements Engineering” being one of them.

Nonetheless, this is not to suggest that every requirements development process has to be long and involved as the above pattern may indicate. Different development environments are adopting differing techniques to manage such a phase in their development efforts that benefit the corresponding businesses. These techniques are being made more accessible to serious project teams and user groups through the employment of modern framework tools that can not only simplify the process of Requirements Analysis but make it somewhat more enjoyable than the original tedium it has been known for.

One such very affordable tool is “GatherSpace” at the following link… http://www.gatherspace.com/ (the author has no association with this company; it is merely a sample recommendation as there are many such tools available) , which provides teams with all of the necessary tools to formulate such a working framework.

Such tools then provide teams with the following advantages…

- Aids in collaborative efforts among all involved in developing a project’s requirements

- Allows all involved to review and suggest modifications to ongoing planning documents

- Allows for iterative processes throughout the lifetime of the project where enhancements and modifications can be planned for

- Maintains a complete history of all requirements documents and information for later review

One critical area that many of these tools do not support, except those of the far more expensive variety such as the Micro Focus-Borland tool, “Caliber”, is requirements interface visualization. To offset this missing feature, it is expected that many document types will be used to support the visual nature of interface module definition such as PowerPoint.

However, using Microsoft Office or similar tools to support interface visualization, though workable, takes a bit of timely and unnecessary work. Such tools are also not designed for such an aspect of project development.

There are however, a number of prototyping tools that can act as efficient ways to help project planning teams to visualize how their interfaces should appear. One such freely available tool is the “Pencil Project” from Evolus (http://pencil.evolus.vn/). The software is no longer being actively supported as far as one can tell but it is a fairly mature product for its genre. Besides, as was noted, it’s free.

The “Pencil Project” is relatively easy to learn and allows anyone to create visual interfaces and easily modify them using actual interface control constructs.

Such prototyping tools are an excellent way to allow both users and developers develop interface concepts that can be agreed upon. However, it should be stressed that the development team should always have the “last word” as to how any interface module is developed. Problems in this vein do not arise from user requested interface features but instead when a user insists that screens be designed according to their “demands”.

Developers have to remember that as professionals they are the ones who understand how interfaces work technically and that requested features have to be implemented in a certain manner in order to make such modules work effectively for the user.

There are many horror stories of developers allowing users to create the interfaces they want to work with that eventually completely fail to process the workflows properly. There is one story in particular that happened quite a number of years ago where a developer after being continuously badgered by her user to implement the screens as they had designed gave up in frustration and did so according to her user’s demands. Once the project was completed it quickly showed the flaws of poor interface design and it literally didn’t work. Nonetheless, the developer provided exactly what the user wanted who out of arrogance wasted the company’s time and money on a project that could do nothing but fail as it did.

The Details of Complete Requirements Analysis

Though Requirements Analysis has been discussed up to this point in somewhat general terms there are several details that should be clarified. First and foremost there are differing levels of requirements in each project but for the most part they all fall into similar categories that can be broken down as the graphic shows below…

The Westfall Team (“What, Why, Who, When, and How”)

As it can be seen, the initial discussion of this essay, which denoted the generality of the beginning phases of requirements definition, is shown above as the “Business Requirements” at the “Business Level” at the top of the chart. These are the actual business needs that a project’s application will be expected to fulfill.

The second level, which is the “User Level”, is where the mix of general business requirements are now expanded to define how users currently fulfill those requirements in order to understand how the application under consideration will support similar needs of those users who will eventually use it. This is why the chart shows the “Business Requirements” actually feeding into those of the user.

At the lowest rung, the “Product Level”, is where the actual technical requirements are defined in order for the project’s application to be developed in a way that can support the working requirements of the users who in turn will support the necessary general requirements for the business operation. It is this level that as mentioned earlier regarding the interface design, technical personnel must completely control for the project to succeed.

Requirements Analysis is Done Rather Poorly in General

Despite the inherent need for proper requirements’ definition, many IT organizations continue to forgo this crucial aspect of software development. Most often what happens is that a technical manager calls a meeting of the necessary staff, informs them of a new application to be developed, when it has to be completed, provides some very general understanding of what the application is supposed to do and then relies on the staff to make their own assumptions as to how to implement the provided feature-set and the database(s) that will support it.

If the staff is lucky the manager will side-step any statements about a target-date with the request that the staff formulate an estimate. And just as often the expected estimate must fulfill the manager’s own expectation as to when the project “must” be completed so that estimates that fall outside of this expectation are subsequently rejected with the response that the period of development time needed “is too long”.

You would be surprised at how many developers have had this experience over the course of many projects. Just as concerning is that if one were to look at the quality of the IT infrastructures where such demands are made of their developers, it would be found that such environments are poorly managed and as a result, very difficult to work in. Nothing ever changes in such conditions until some catastrophe occurs to the functioning of the business when the only thing that will be done will be to replace the technical manager or managers with personnel of the same ilk.

This issue has plagued commercial Information Technology since its inception whereby only the mainframe environments introduced some constraints on the levels of project incompetence and stupidity that could be allowed, which were simply the result of the complex nature of the environments themselves.

Nonetheless, in today’s environments serious “Requirements Analysis” has only been implemented in those organizations that take their software development seriously as it is a timely process. True, there have been alternatives to mitigate the necessary upfront work that gathering requirements entails. And some of those alternatives can work in situations where there are many iterations to small module sets that are developed in short time-spans to support rather small enhancements to an application. There is nothing wrong with such approaches but with highly complex applications with large feature sets such approaches will not work. An example of this would be the development of a financial system that is not properly defined and designed initially.

A real-world example of this was a large financial system that the author helped develop with 9 other colleagues that was used to enlist local auto after-market (after purchase) companies that would provide the finances and such options as extended warranties or luxury additions such as adding stripes to a car’s exterior (the company at which this system was built was part of the auto industry).

Historically, in the United States the auto after-market has always been quite small, the result of the many scams and swindles that American consumers were victimized of by the US auto industry. To now work on such an application that was expected to expand as new “providers” were found and\or enlisted by company efforts was a rather surprising experience considering the history of this market segment.

The application itself was primarily created as a result of assumptions that would eventually prove to be categorically incorrect eventually costing the company upwards of 1.5 million dollars a year to support while only generating approximately $140,000.00 in revenues after it went into production. The entire system was eventually scrapped as a result.

The failure of this particular system was a direct result of improper Requirements Analysis that would have included in this type of case a feasibility study as to the efficacy of such a system. This, the company did not do and instead went into the planning of its primary features with the literal idea of “if they build it, the providers and customers will come”. Considering that it was expected that a very diverse group of providers would be interested in being listed on the system directory of the system it would have made sense that a relatively structured set-up process would have been one of the major areas of requirements research so that not only could the system operate with generically supplied information but that the providers themselves would be able to store their own unique data in such a format hosted on the company’s servers retrievable by them as necessary for their own records. This expectation would make good common-sense given the potential complexities of such a system running at a national level. This was not to be.

Instead what was done was that where providers’ data did in fact conform to some general format, it would be supported on the company’s servers. Where it didn’t, the application would then be customized to provide hooks to the providers’ servers in order to retrieve the necessary information for use during an after-market sale’s process (the result was that every exception became a requirements’ rule).

Now think about this for a minute. You have a system that by the time the author arrived on the scene already had about 450 providers across the nation using the system for testing purposes to ensure its integrity and by the time the author left the company there were over 850 such providers in the system’s directory; all of who used a combination of the company servers as well as their own.

Needless to say, there were so many issues with both the customization of provider data and the corresponding server requests to the provider servers that on some days the system would go up and down like a yo-yo in both the testing and eventual production environments. The eventual cost to support such a system reached astronomical proportions (as noted earlier) but the company believed they had a success on their hands.

The requirement that the system be able to support corporate and remote provider servers for after-market sale processes was eventually extended to a new set of major imports that would allow companies to import their own data to the company’s servers (holding generic-like data)avoiding to have to send it in by hard-copy. A good idea if you consider that all that was necessary would be a properly formatted CSV file given the nature of the processing.

At the time, the idea of every source-code module having to be generic in nature was very much hyped in the Information Technology industry as the new “in thing” and company technical managers had drunk the entire barrel of “Kool Aid” on offer from various industry experts. Of course the concept was utter nonsense as software engineers attempted to clarify but who wanted to listen to reason?

The result was that the author was tasked to develop an import system that could satisfy any export requirement a provider deigned to implement. Thus, it was expected that not only would standard CSV files be one point of import but so would HTTP, FTP, and MSMQ. There was however, a serious issue with the other points of import; no one had any idea as to how these alternative resources were to be defined and who they should be defined for.

When the author attempted to clarify that these missing definitions could not be included in any current development until they were defined, the reaction by management was that it didn’t matter since the entire sub-application was to be written in such a generic format as to make any additional resources easy to implement. Considering that one could only do this with some level of interface implementation across similar import process classes, the main problems still remained that there were still no definitions as to how such imported data was to be formatted (if it was to be different from the CSV format), for what resource, and for whom making any generic module development rather an impossible feat. Management merely expected some level of magic to suddenly be capable of generating source modules without any underlying requirements for their development.

In the end only a single provider ever used this part of the system with the CSV file formatted data sent by email so that the sub-application would read the email and extract the attachments.

The system in general became so unwieldy over time and so costly to maintain that a year and half after the author left the company it was eventually abandoned.

This of course is an extreme case of poor planning and requirements management on the part of management. However, these instances do in fact manifest themselves today on a daily basis with many sites on the Internet. Go to any news or retail site and users are faced with classic design messes that go against every credible engineering tenet known in the industry.

Amazon.com is a classic with it’s attempt to provide unique experiences for its users as it throws up graphic after graphic of images of book covers and other commodity items a user may or may not be interested in.

In reality, a person going to the Amazon site already knows what he or she wants and this was the same in days past when people went to department stores for similar reasons. “Browsing” was an after-thought or a form of simple relaxation. Yet, Amazon’s design team made assumptions about their users without doing any real Requirements Analysis as to what they would actually want. I doubt any customer wants to be presented with a mass of images on the off chance they may want to review one of the recommendations when in fact the most oft used feature of these huge web-pages is the Search entry at the top of the “Home” page (just like Google).

A better design, which would have fulfilled all of the necessary user requirements, would have been to present the user with simple options on the “Home” page that could be selected if they did want Amazon to make any product recommendations. And if they did, small graphic thumbnails on an alternative page alongside a product title or description would have been more than enough.

Unfortunately, in today’s consumer oriented society, actual serious “Requirements Analysis” is often subordinated to preconceived conceptions of what people want, what marketers want them to want, what technical personnel would like to include, and not what actual analysis would have deemed it to be. If the latter had been implemented across more rational design considerations, the Internet itself would not be the abhorrent mess it is.

To further research the concepts brought up in this essay, please see the following recommendations…

- iSixSigma

“Defect Prevention: Reducing Costs and Enhancing Quality”

- Linda Westfall (The Westfall Team)

“Software Requirements Engineering: What, Why, Who, When, and How”

- Karl Wiegers, Joy Beatty

Software Requirements (3rd Edition) (Developer Best Practices

- Steve McConnell

Rapid Development: Taming Wild Software Schedules (The Bible of all Software Engineers… and “Still The One”)

- Geoff Dutton (CounterPunch.org)

“Mr. Twain’s Complaint: the Technological Scrapheap”

(An inciteful article on what useless requirements actually produce)