Building a search engine with Strus (tutorial)

4.67/5 (8 votes)

This tutorial based on a docker image will guide through the development of a simple search engine based on Strus (project-strus.net), a collection of libraries and line tools for building a search engine.

Goals

The goal of this tutorial is to let you issue a query from your browser, that is executed by a PHP (PHP 7) script on an nginx webserver and to understand the execution of the query. All prerequisites you need are delivered as docker image. You only need to have docker installed. The tutorial will take about 30 Minutes to complete. Have fun !

Update

15th October 2017

This article is now based on a Docker Image with the newer version 0.15 of Strus having language bindings based on Papuga instead of SWIG. The support of PHP 5 has been dropped in favour of PHP 7 in the reimplementation. All examples have been fixed and are working, but the images and results of the different steps havn't been renewed, if they did not differ syntactically.

Introduction

To execute the steps of this tutorial you need some intermediate knowledge about unix shell programming and it would be helpful to know basics about PHP. For the analyzer configuration a simple proprietary definition language is used. But it will not be alien for users that have seen other configuration languages before.

In this tutorial you will download the dataset to build the search index with, preprocess the data with some lines of bash, create a storage for the index, define a configuration for analyzing the data, feed the search index, and finally issue a query with a PHP script. All prerequisites like the nginx webserver, PHP 7 and the strus libraries and programs are available as a docker image. So all you need is to get docker installed on your machine.

The dataset used in this tutorial is from MusicBrainz (musicbrainz.org). Please respect the license of this dataset !

Background

The background of this tutorial is the project strus, hosted on github. For having a look how strus copes with a bigger data collection you could also have a look at the demo search on the complete Wikipedia collection (English). You will find there also a description about this project. The complexity of the Wikipedia project would burst all limits of a tutorial. This tutorial just aims to show how easy it is to build a search engine with strus based on a small collection (282'000) of small data records (about a dozen terms) with a simple structure.

Prerequisites

You need a docker installation.

For running the tutorial you need an internet connection.

Step 1: Start the docker container

To start the docker image typing the follwing command in your shell:

docker run -p 40080:80 -t -i patrickfrey/strus-ub1604-nginx:v0_15 /bin/bash

and you will get a promt like this

root@8cbc7f49f3cf:/home/strus#

All following shell commands of this tutorial are executed in this docker container shell.

Step 2: Download and prepare the data

If you want to skip this section, you can simply execute the script step_fetchData.sh. This script will execute the steps described here in about a minute on a convenient developer machine.

Otherwise you have to manually fetch the data from MusicBrainz. The dump of the record releases will be dumped and converted into a proprietary XML we will use. Because there are many items to process, we will put up to 100 entries into one file. The analyzer will be configured to handle one item of these 100 entries as one document. Download and decompress the data with the following commands:

Prepare data directory

We create a directory for the data because the MusicBrainz files are all unpacked in current directory.

mkdir data cd data

Download and uppack the data dump

wget http://ftp.musicbrainz.org/pub/musicbrainz/data/fullexport/LATEST LATEST=`cat LATEST` wget http://ftp.musicbrainz.org/pub/musicbrainz/data/fullexport/$LATEST/mbdump-cdstubs.tar.bz2 bzip2 -d mbdump-cdstubs.tar.bz2 tar -xvf mbdump-cdstubs.tar

Create the documents

We count the maximum document identifier that will be assigned and we set $ndocs as the maximum number of documents we will get. In every document will be at maximum 100 items to insert. For every document identifier we create a document with the XML header.

cat mbdump/release_raw | sed 's/\&/\&/g' | sed 's/</\</g' | sed 's/>/\>/g' > dump.txt

ndocs=`cat dump.txt | awk -F"\t" '{if(min=="")min=max=$1; if($1>max) {max=$1}; if($1< min) {min=$1}; } END {print int((max+1)/100)}'`

mkdir doc

idoc=0

while [ $idoc -le $ndocs ]

do

echo "<?xml version=\"1.0\" encoding=\"UTF-8\" standalone=\"yes\" ?>" > doc/$idoc.xml

echo "<list>" >> doc/$idoc.xml

idoc=`expr $idoc + 1`

done

Fill the documents with the content of the dump

For every entry we want to process, we create an own XML tag. Every record is marked with an '<item>...</item>' tag.

cat dump.txt \

| awk -F"\t" '{FNAME=int($1/100); print "<item><id>" $1 "</id><title>" $2 "</title><artist>" $3 "</artist><date>" $4 "</date><upc>" $9 "</upc><note>" $10 "</note></item>" >> "doc/"FNAME".xml" }'

idoc=0

while [ $idoc -le $ndocs ]

do

echo "</list>" >> doc/$idoc.xml

idoc=`expr $idoc + 1`

done

Leave the data directory and inspect the result

We have now preprocessed the MusicBrainz example collection with about 282'000 records. We go back to the upper directory and inpect what we got. There should be about 3200 files and each file should contain about 100 items.

ls doc/

You get:

0.xml 1267.xml 1536.xml 1805.xml 2074.xml 2343.xml 2612.xml 2882.xml 3150.xml 461.xml 730.xml 1.xml 1268.xml 1537.xml 1806.xml 2075.xml 2344.xml 2613.xml 2883.xml 3151.xml 462.xml 731.xml ... (more)

Here is an except of an example input file for strus. You can inspect the first 5 lines with:

head -n 7 doc/999.xml

We get:(may differ a little bit because the data used gets updated from time to time)

<?xml version="1.0" encoding="UTF-8" standalone="yes" ?> <list> <item><id>99929</id><title>Flutterstrut</title><artist>Hundred Year Flood</artist><date>2009-06-18 01:37:51.35028+00</date><upc>881387000018</upc><note>CD Baby id:hyf2</note></item> <item><id>99999</id><title>The Hanging Garden</title><artist>Hypnotique</artist><date>2009-06-18 01:37:52.548532+00</date><upc>634479159961</upc><note>CD Baby id:hypnotique</note></item> <item><id>99994</id><title>Hypnopilot</title><artist>Hypnopilot</artist><date>2009-06-18 01:37:52.49057+00</date><upc>088907200068</upc><note>CD Baby id:hypnopilot</note></item> <item><id>99995</id><title>The Sphinx of Imagination</title><artist>Hypnotica</artist><date>2009-06-18 01:37:52.503809+00</date><upc>634479143427</upc><note>CD Baby id:hypnotica</note></item>

Every file created contains about 100 items to insert as document. The grouping of multiple items has been done to reduce the number of files created.

Step 3: Create the storage

Initialize the storage database

We will use the utility programs of strus to create the storage and insert the documents into the search index.

We have two meta data elements 'date' and 'doclen' we would like to use for meta data restrictions. The meta data used for restrictions are put into the meta data table. We can at any time alter the meta data table or add new elements with the command strusAlterMetaData. But we can also define some meta data within the strusCreate command.

The command to create the storage looks as follows.

strusCreate -s "path=storage; metadata=date UINT32, doclen UINT16"

The first argument describes the properties of the storage created with a semicolon-separated list of configuration assigments. The path argument of it defines the directory the key/value store database based on leveldb will write its files to. The storage configuration string is dependend on the storage and key/value store database we use. All following commands introduced in this tutorial later that refer to a storage will have a configuration string parameter with the same syntax, but depending on the case with different parameters.

With this call the storage has been created in the subdirectory 'storage'. Type

ls storage

and you will see (LevelDB files)

000005.log CURRENT LOCK LOG LOG.old MANIFEST-000004

Step 4: Configure the document analyzer

We will use the standard document segmenter as we will process XML documents. The analyzer configuration describes, what elements will be extracted from the items and how items are defined. It also describes how these items are transformed into the form they are stored. The analyzer configuration defines different sections that describe what type of elements are created. For creating an analyzer configuration we create a file with the extension ".ana" and start to edit it:

touch tutorial.ana vi tutorial.ana

The following sub sections show what we define in this configuration file. The complete analyzer configuration is defined in tutorial.ana. The segmenter used in this tutorial defines XML selection expressions is the abbreviated syntax of XPath with 2 exceptions:

- Tag selections like "/list/item/title" do not select a subtree but just the tag.

- Contect selections like "/list/item/title::text()" are written as "/list/item/title()" (the "::text" is omitted).

Define the items to index (collection units aka documents)

As we saw in the preparation of the documents there are about 100 items inserted into one file. For separating these items as documents to insert we have to define a section document that defines what selection expression defines a document in an input file:

[Document] doc = /list/item;

Define the the search index (retrievable items)

The elements that are subject of retrieval are put into the section [SearchIndex]. We decide to make words in the tags "title","artist" and "note" retrievable.

All of them we tokenize the same way (as words) and we use the snowball stemmer ("stem") followed by a lowercase conversion ("lc") and a diacritical character conversion ("convdia") to normalize them.

Our language we instrument the stemmer and the diacritical characer conversion with is English ("en").

The feature type name we assign to the produced search index features is for all features "word".

[SearchIndex] word = convdia(en):stem(en):lc word /list/item/title(); word = convdia(en):stem(en):lc word /list/item/artist(); word = convdia(en):stem(en):lc word /list/item/note();

Intuitively this configuration is understod if you read every feature assignment from right to left: Select an element, select the tokens from an element selection, normalize the tokens: "lc -> stem -> convdia" and assign them to a feature type "word".

Define the elements the forward index (for summarization)

The elements that are used for summarization are put into the section [ForwardIndex]. We decide to use the retrievable words in the tags "title","artist" and "note" also for summarization to show what query features matched in the document. Because the summary should show the original content of the document without markup, we use a whitespace tokenizer ("split") and no normalizer ("orig") to convert them for insert.

[ForwardIndex] orig = orig split /list/item/title(); orig = orig split /list/item/artist(); orig = orig split /list/item/note();

Intuitively this configuration is understod as the search index definition by reading the processing steps from right to left.

Define the attributes

Now assign some attributes to each document inserted. Attributes are used in the representation of the result. There is one reserved attribute name that is very important for our case. It is the attribute "docid". When defining it, the strusInsert program will define the content of this attribute as document identifier. If you do not define it, the input file path will be used as document identifier. Because the document identifier has to be unique, we have to create one when there are multiple documents grouped in one input file as we did before. For all four elements we will use a tokenization ("content") and normalization ("orig"), that keeps the elements in their original state:

[Attribute] docid = orig content /list/item/id(); title = orig content /list/item/title(); upc = orig content /list/item/upc(); note = orig content /list/item/note();

The definitions of the section attribute is read intuitively from right to left as the elements introduced before with the exception that the leftmost identifier specifies the name of the attribute the value is assigned to.

Define the meta data

As final step we assign the meta data element 'date' we want to use for restrictions but also in the representation of the result. For converting the date into a format we can use as meta data table element we use the 'date2int' normalizer. As start date of the date as integer calculation we chose the year of the invention of the phonograph by Thomas Edison and we define the unit as number of days since then. The input format we deduce by looking at an input sample (bravely assuming here that all dates have the same format):

2009-06-18 01:37:52.503809+00

The tokenization is just forwarding the tag content. Here is the configuration of the date. First we cut away the fraction part of the seconds with a regular expression ('regex') tokenizer. Then we convert the date to a number (of days since 1877):

[MetaData]

date = date2int("d 1877-01-01", "%Y-%m-%d %H:%M:%s") regex("[0-9\-]{8,10} [0-9:]{6,8}") /list/item/date();

The definition is read intuitively from right to left as the elements introduced before with the exception that the leftmost identifier specifies the name of the meta data element the value is assigned to.

Some meta data elements can be defined by aggregation (e.g. counting) of other elements in the analyzed document. Aggregated meta data elements are defined in the section 'Aggregator':

[Aggregator] doclen = count(word);

Check the configuration

The be sure that we got everything right, we now test what we got with one candidate input file. We can do this by calling the program strusAnalyze with our configuration and an input file:

strusAnalyze tutorial.ana doc/2333.xml | less

We have a look at the first document analyzed and see that all went well (Multi part document results of the strusAnalyze program are separated by '-- document ' followed by the identifier assigned to it in the [Document] section):

-- document type name doc search index terms: 1 word 'live' 2 word 'in' 3 word 'milan' 5 word '27' 6 word '9' 7 word '1956' 8 word 'maria' 9 word 'calla' forward index terms: 1 orig 'Live' 2 orig 'in' 3 orig 'Milan' 4 orig '(27,9,1956)' 8 orig 'Maria' 9 orig 'Callas' metadata: date '48527' attributes: docid '233372' title 'Live in Milan (27,9,1956)'

Check single steps of the analyzer configuration

What do we do when something went wrong when checking a document with strusAnalyze ?

Let's assume that our output was empty. There are tools to check the different steps of document analysis. The first program is strusSegment that allows us to check wheter a selection expression matched. Let's take the document title as example:

strusSegment -e '/list/item/title()' doc/2333.xml | head -n 2

The output looks as follows:

Live in Milan (27,9,1956) Greatest Hits

This looks good as expected. The second program for checking analyzer steps is strusAnalyzePhrase. This program allows us to check tokenizers and normalizers used in the configuration. Let's check if words are tokenized and normalized correctly:

strusAnalyzePhrase -n "convdia(en):stem(en):lc" -t "word" 'Live in Milan (27,9,1956)'

This leads to the following output:

'live' 'in' 'milan' '27' '9' '1956'

Step 5: Insert the documents into the storage

We got now the analysis of the documents done. Now we would like to insert the documents into the storage created. The program strusInsert helps us to do that. It has several options to boost the insert process. It depends very much on the collection and the hardware used which parameters are best.

An important option is '-c' ('--commit') that sets the number of documents inserted within one transaction. Because out documents are very small (less than a dozen terms), the default of 1000 is not very reasonable. Better is 50000. For bigger collection you might also consider the option '-t' ('--threads') that allows you to run the insert processes with multiple cores. Be aware that the commit phase is a synchronization point that is a bottleneck for parallelization.

Here is our insert command with the proposed options for this collection:

strusInsert -c 50000 -f 100 -s "path=storage" tutorial.ana doc

that does not use multiple inserter threads (default), commits an open transaction after 50000 documents inserted, preallocates document numbers in ranges and fills the storage in the folder 'storage' with XML documents analyzed with the configuration tutorial.ana. This should output the following 2 lines after roughly a minute or half a minute:

inserted 282291 documents (total 282291), 0 transactions open done

Step 6: Inspect the storage

The following examples use the dump from 29th of June 2015. You might get different results with another dump.

Now as we have inserted all documents we are curious to see if everything went right. There is a program strusInspect that lets us inspect some properties of the storage. We chose the document with the document id '10009'. First we find out what internal document number this docid got:

strusInspect -s "path=storage" docno 10009

we get a number (may vary)

123

Then we do some calls to inspect this document

strusInspect -s "path=storage" attribute docid 123 strusInspect -s "path=storage" content orig 123 strusInspect -s "path=storage" attribute title 123 strusInspect -s "path=storage" pos word danc 123 strusInspect -s "path=storage" ff word danc 123 strusInspect -s "path=storage" df word danc strusInspect -s "path=storage" metatable strusInspect -s "path=storage" metadata date 123

and we get something like

10009 Absolute Let's dance 13 Various Absolute Let's dance 13 4 1 1575 date UInt32 doclen Uint32 48253

If we want to check all the content of a storage, we can use the programs strusCheckStorage and strusCheckInsert.

Step 7: Example query with PHP

In this section we examine the possibility to issue queries in a scripting language. As example we use the PHP bindings to query our strus storage built. A documentation of the PHP interface of strus can be found here.

The PHP scripts running in the webserver context can not directly access the storage files. The strus PHP commands issued are using a proxy implementation of the strus interface that redirect the calls to be executed on a server. This server is implemented as program strusRpcServer. Because we started the image with a shell, the docker CMD starting the strus server was ignored. So we have to start it by hand in the background:

strusRpcServer -s "path=storage" &

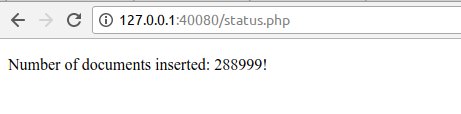

Test execution chain

We start with a simple PHP script that asks the strus storage for the number of documents inserted. We call the script status.php:

<!DOCTYPE html>

<html>

<body>

<?php

try {

// Create the strus context as proxy redirecting the

// commands to the strus server listening on port 7181:

$context = new StrusContext( "localhost:7181" );

// Create the storage client:

$storage = $context->createStorageClient( "" );

echo '<p>';

// Print the number of documents inserted:

echo "Number of documents inserted: " . $storage->nofDocumentsInserted() . "!";

echo '</p>';

}

catch ( Exception $e) {

// Handle possible error:

echo '<p><font color="red">Caught exception: ' . "{$e->getMessage()}</font></p>";

}

?>

</body>

</html>

In order to make it accessible for nginx, we have to copy it to the web servers html pages root directory and give it the right permissions:

cp status.php /usr/share/nginx/html/ chown www-data:www-data /usr/share/nginx/html/status.php chmod +x /usr/share/nginx/html/status.php

Now we can call it via HTTP, say we can enter the following URL into a web browsers address bar. We have specified port 40080 when starting the strus docker container, so we also specify it here:

http://127.0.0.1:40080/status.php

and we get (a bigger number, the image is old):

Issue a query

Now we try to create a simple page showing a ranked list of best matches to a query passed as GET parameters. We call the PHP source file search.php:

<!DOCTYPE html>

<html>

<body>

<?php

// The following helper function will be used to evaluate a query:

function evalQuery( $queryString, $minRank, $maxNofRanks)

{

// We create the strus context as proxy to the strusRpcServer

// started in our docker container listening on port 7181:

$context = new StrusContext( "localhost:7181" );

// We create the storage proxy interface refering to the storage

// served by the server by passing an empty storage configuration:

$storage = $context->createStorageClient( "" );

// We create the analyzer proxy interface:

$analyzer = $context->createQueryAnalyzer();

// We create the query evaluation proxy interface:

$queryeval = $context->createQueryEval();

// Here we define how the query is analyzed. In our example we will introduce

// only one phrase type and call it "qry". The produced feature type "word" matches

// to the feature type in the search index of the document. The tokenizer used

// is the same as for the features in the document ("word"). So is the normalizer

// defined. Note that the execution of the normalizer is read from left to

// right and not as in the analyzer configuration language from right to left.

$analyzer->addElement( "word"/*feat type*/, "qry"/*field type*/, "word"/*tokenizer*/,

["lc", ["stem", "en"], ["convdia", "en"] ] /*normalizer*/);

// Now we define our weighting function, that counts the number of query

// feature matches in the document. Feature references as parameters are

// passed as dictionaries with a single element with key 'feature'.

// Others referencing number or string constants are passed as value:

$queryeval->addWeightingFunction( "tf", ["match" => ["feature" => "word"] ]);

// Next we define what is presented as attribute of the result.

// We would like to see the document identifier and the document title:

$queryeval->addSummarizer( "attribute", [ "name" => "docid" ] );

$queryeval->addSummarizer( "attribute", [ "name" => "title" ] );

$queryeval->addSummarizer( "attribute", [ "name" => "note" ] );

// For the documents to match we have to define a selection expression.

// The selected features will be addressed by the feature set identifier "sel":

$queryeval->addSelectionFeature( "sel");

// Now we have finished the query evaluation scheme definition and we create

// the query to issue. First we define the query object to instantiate:

$query = $queryeval->createQuery( $storage);

// Next we take the query string as phrase and analyze it. In a more complex

// system we would parse the query language first and the elements of the

// query language would be the queryphrases to analyze. In our simple example

// setting, we have just a single phrase query:

$terms = $analyzer->analyzeTermExpression( ["qry", $queryString ]);

if (count( $terms) > 0)

{

// Now we create the query expressions and define the query features.

// The selection feature "sel" will be represented as selection of documents,

// that contain all query features.

// The weighted features "qry" will be the set of terms in the query.

// $selexpr will contain the expression that selects the documents to weight.

// It is represented as array with the identifier of the feature join operator

// "contains" as first element and the structures representing the arguments as

// following elements. "contains" means that the expression selects the documents

// that match all argument expressions.

$selexpr = [ "contains" ];

foreach ($terms as &$term)

{

// Add the term as argument to the select expression:

$selexpr[] = $term;

// Define the features to use for weighting:

$query->addFeature( "qry", $term );

}

// Define the feature to use for document selection:

$query->addFeature( "sel", $selexpr );

}

// At last we define the number of documents to retrieve and the first rank to show:

$query->setMaxNofRanks( $maxNofRanks);

$query->setMinRank( $minRank);

// And we evaluate the query and return it as result:

return $query->evaluate();

}

// Parse the query parameters:

parse_str( getenv('QUERY_STRING'), $_GET);

$queryString = $_GET['q'];

$nofRanks = 20;

$minRank = 0;

if (array_key_exists( 'n', $_GET))

{

$nofRanks = intval( $_GET['n']);

}

if (array_key_exists( 'i', $_GET))

{

$minRank = intval( $_GET['i']);

}

// Call the defined subroutine to evaluate the query:

$results = evalQuery( $queryString, $minRank, $nofRanks);

// Display the result:

echo '<table border=1>';

foreach ($results['ranks'] as $result) {

$docid = '';

$title = '';

$note = '';

foreach ($result['summary'] as $attribute) {

if( strcmp( $attribute['name'], 'title' ) == 0 ) {

$title .= $attribute['value'];

}

if( strcmp( $attribute['name'], 'docid' ) == 0 ) {

$docid .= $attribute['value'];

}

if( strcmp( $attribute['name'], 'note' ) == 0 ) {

$note .= $attribute['value'];

}

}

echo '<tr><td>' . $docid . '</td><td>' . $title . '</td><td>' . $note . '</td></tr>' . "\n";

}

echo '</table>';

?>

</body>

</html>

Now we copy the file into the webservers document area and set the permissions correctly:

cp search.php /usr/share/nginx/html/ chown www-data:www-data /usr/share/nginx/html/search.php chmod +x /usr/share/nginx/html/search.php

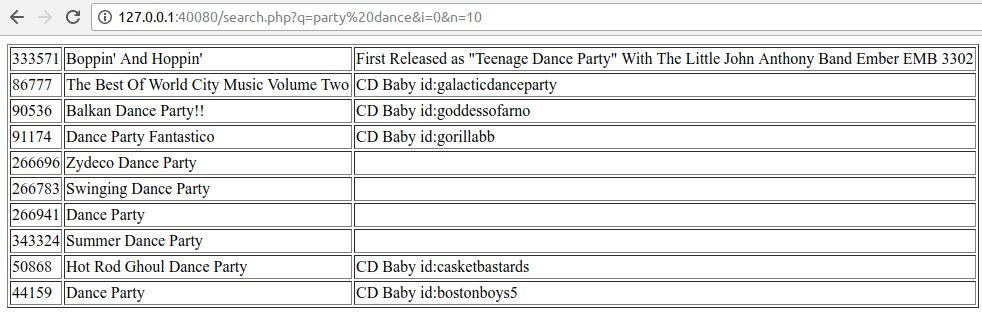

Now we can call the search page via HTTP, say we can enter the following URL into a web browsers address bar as we did it with the status.php page. We search for "party dance", and retrieve the first 10 ranks starting with the best match:

http://127.0.0.1:40080/search.php?q=party%20dance&i=0&n=10

and we get:

.