Microsoft Versus Google: Who is Correct?

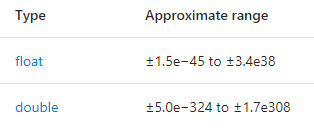

Microsoft Floating Point Range in C# Documentation

Microsoft Floating Point Range

Google Floating Point Range Returned from its Search

Google Floating Point Range

While their higher float point limit tallied, Microsoft and Google are giving different values for lower limit! What is going on? One of them has to be correct! Make a guess before the answer is unveiled!

Answer

Both Microsoft and Google are correct! Google answer is correct from normalized number perspective while Microsoft lower range takes into account subnormal numbers (also known as denormalized numbers).

As you may recollect from your computer science school days, IEEE 754 floating point format consists of 3 components: a sign bit, mantissa and exponent. The actual value is derived from multiplying mantissa by base number raised to power of exponent.

Normal Floating Point

In a normal floating point number, its mantissa always implies a leading binary one on left side of decimal point. Since it is always present, mantissa does not store this leading one.

1.xxxx

Since the left side of decimal point is always one, how do you store, say 0.5?

0.5 can be derived from multiplying 1(mantissa) with 2 raised to power of -1(exponent).

Note: Mantissa and exponent values are stored in base 2, not base 10, so we raise 2 to power of exponent.

1 * 2^(-1) = 0.5

Subnormal Floating Point

In a subnormal floating point number, its mantissa has a leading binary zero on the left side of decimal point.

0.xxxx

But but... didn't I just tell you the left leading one is always there? So how is a subnormal number defined? To give you a definitive answer, we have to go through every nook and cranny of floating point format which is, frankly speaking, too long to fit into this short tip. As promised, the floating-point guide is finally here!

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin