So far (post 1, post 2, post 3) we have seen what is CNTK, how to use it with Python, and how to create a simple C# .NET application and call basic CNTK methods. For this blog post, we are going to implement a full C# program to train Iris data.

The first step in using CNTK is how to get the data and feed the trainer. In the previous post, we prepared the Iris data in CNTK format, which is suitable when using MinibatchSource. In order to use the MinibatchSource, we need to create two streams:

- one for the features, and

- one for the label

Also features and label variables must be created using the streams as well, so that when accessing the data by using variables, the trainer is aware that the data is coming from the file.

Data Preparation

As mentioned above, we are going to use CNTK MinibatchSource to load the Iris data. The two files are prepared for this demo:

var dataFolder = "Data";

var dataPath = Path.Combine(dataFolder, "trainIris_cntk.txt");

var trainPath = Path.Combine(dataFolder, "testIris_cntk.txt");

var featureStreamName = "features";

var labelsStreamName = "label";

One file path contains the Iris data for the training, and the second path contains the data for testing, which will be used in the future post. Those two files will be arguments when creating minibatchSource for the training and validation respectively.

The first step in getting the data from the file is defining the stream configuration with proper information. That information will be used when the data would be extracted from the file. The configuration is completed by providing the number of features and the number of the one-hot vector component of the label in the file, as well as the names of features and labels. At the end of the blog post, the data is attached so the reader can see how data is prepared for the minibatchSource.

The following code defines the stream configuration for the Iris data set.

var streamConfig = new StreamConfiguration[]

{

new StreamConfiguration(featureStreamName, inputDim),

new StreamConfiguration(labelsStreamName, numOutputClasses)

};

Also, features and label variables must be created by providing the above the stream names.

var feature = Variable.InputVariable(new NDShape(1,inputDim), DataType.Float, featureStreamName);

var label = Variable.InputVariable(new NDShape(1, numOutputClasses), DataType.Float, labelsStreamName);

Now the input and the output variables are connected with the data from the file, and minibachSource can handle them.

Creating Feed Forward Neural Network Model

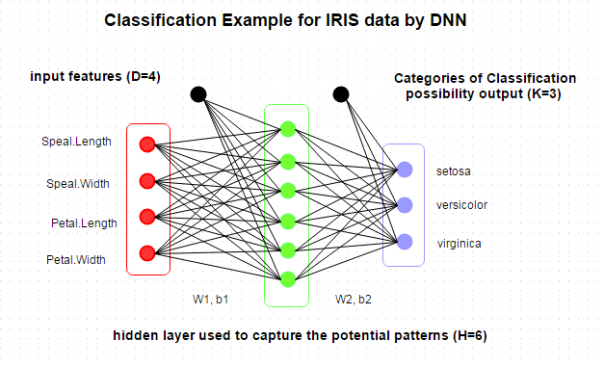

Once we defined the stream and variables, we can defined the network model. The CNTK is implemented so that you can defined any number of hidden layers with any activation function. For this demo, we are going to create a simple feed forward neural network with one hidden layer. The picture below shows the NN model.

In order to implement above NN model, we need to implement three methods:

-

static Function applyActivationFunction(Function layer, NNActivation actFun)

-

static Function simpleLayer(Function input, int outputDim, DeviceDescriptor device)

-

static Function createFFNN(Function input, int hiddenLayerCount,

int hiddenDim, int outputDim, NNActivation activation, string modelName,

DeviceDescriptor device)

The first method just applies specified activation function for the passed layer. The method is very simple and should look like:

static Function applyActivationFunction(Function layer, Activation actFun)

{

switch (actFun)

{

default:

case Activation.None:

return layer;

case Activation.ReLU:

return CNTKLib.ReLU(layer);

case Activation.Sigmoid:

return CNTKLib.Sigmoid(layer);

case Activation.Tanh:

return CNTKLib.Tanh(layer);

}

}

public enum Activation

{

None,

ReLU,

Sigmoid,

Tanh

}

The method takes the layer as argument and returns the layer with applied activation function.

The next method is creation of the simple layer with n weights and one bias. The method is shown in the following listing:

static Function simpleLayer(Function input, int outputDim, DeviceDescriptor device)

{

var glorotInit = CNTKLib.GlorotUniformInitializer(

CNTKLib.DefaultParamInitScale,

CNTKLib.SentinelValueForInferParamInitRank,

CNTKLib.SentinelValueForInferParamInitRank, 1);

var var = (Variable)input;

var shape = new int[] { outputDim, var.Shape[0] };

var weightParam = new Parameter(shape, DataType.Float, glorotInit, device, "w");

var biasParam = new Parameter(new NDShape(1,outputDim), 0, device, "b");

return CNTKLib.Times(weightParam, input) + biasParam;

}

After initialization of the parameters, the Function object is created with number of output components and previous layer or the input variable. This is so called chain rule in NN layer creation. With this strategy, the user can create very complex NN model.

The last method perform layers creation. It is called from the main method, and can create arbitrary feed forward neural network, by providing the parameters.

static Function createFFNN(Variable input, int hiddenLayerCount,

int hiddenDim, int outputDim, Activation activation, string modelName, DeviceDescriptor device)

{

var glorotInit = CNTKLib.GlorotUniformInitializer(

CNTKLib.DefaultParamInitScale,

CNTKLib.SentinelValueForInferParamInitRank,

CNTKLib.SentinelValueForInferParamInitRank, 1);

Function h = simpleLayer(input, hiddenDim, device);

h = ApplyActivationFunction(h, activation);

for (int i = 1; i < hiddenLayerCount; i++)

{

h = simpleLayer(h, hiddenDim, device);

h = ApplyActivationFunction(h, activation);

}

var r = simpleLayer(h, outputDim, device);

r.SetName(modelName);

return r;

}

Now that we have implemented method for NN model creation, the next step would be a training implementation.

The training process is iterative where the minibachSource feeds the trainer for each iteration.

The Loss and the evaluation functions are calculated for each iteration, and shown in iteration progress. The iteration progress is defined by a separate method which looks like the following code listing:

private static void printTrainingProgress

(Trainer trainer, int minibatchIdx, int outputFrequencyInMinibatches)

{

if ((minibatchIdx % outputFrequencyInMinibatches) == 0 &&

trainer.PreviousMinibatchSampleCount() != 0)

{

float trainLossValue = (float)trainer.PreviousMinibatchLossAverage();

float evaluationValue = (float)trainer.PreviousMinibatchEvaluationAverage();

Console.WriteLine($"Minibatch: {minibatchIdx} CrossEntropyLoss =

{trainLossValue}, EvaluationCriterion = {evaluationValue}");

}

}

During the iteration, the Loss function is constantly decreasing its value showing by indicating that the model is becoming better and better. Once the iteration process is completed, the model is shown in context of the accuracy of the training data.

Full Program Implementation

The following listing shows the complete source code implementation using CNTK for Iris data set training. At the beginning, several variables are defined in order to define structure of NN model: the number of input and output variables. Also, the main method implements the iteration process where the minibatchSource handling with the data by passing the relevant data to the trainer. More about it will be in a separate blog post. Once the iteration process is completed, the model result is shown and the program terminates.

public static void TrainIris(DeviceDescriptor device)

{

var dataFolder = "";

var dataPath = Path.Combine(dataFolder, "iris_with_hot_vector.csv");

var trainPath = Path.Combine(dataFolder, "iris_with_hot_vector_test.csv");

var featureStreamName = "features";

var labelsStreamName = "labels";

int inputDim = 4;

int numOutputClasses = 3;

int numHiddenLayers = 1;

int hidenLayerDim = 6;

uint sampleSize = 130;

var streamConfig = new StreamConfiguration[]

{

new StreamConfiguration(featureStreamName, inputDim),

new StreamConfiguration(labelsStreamName, numOutputClasses)

};

var feature = Variable.InputVariable(new NDShape(1, inputDim), DataType.Float, featureStreamName);

var label = Variable.InputVariable

(new NDShape(1, numOutputClasses), DataType.Float, labelsStreamName);

var ffnn_model = CreateFFNN(feature, numHiddenLayers,

hidenLayerDim, numOutputClasses, Activation.Tanh, "IrisNNModel", device);

var trainingLoss = CNTKLib.CrossEntropyWithSoftmax(new Variable(ffnn_model), label, "lossFunction");

var classError = CNTKLib.ClassificationError(new Variable(ffnn_model), label, "classificationError");

var minibatchSource = MinibatchSource.TextFormatMinibatchSource(

dataPath, streamConfig, MinibatchSource.InfinitelyRepeat, true);

var featureStreamInfo = minibatchSource.StreamInfo(featureStreamName);

var labelStreamInfo = minibatchSource.StreamInfo(labelsStreamName);

var learningRatePerSample = new TrainingParameterScheduleDouble(0.001125, 1);

var ll = Learner.SGDLearner(ffnn_model.Parameters(), learningRatePerSample);

var trainer = Trainer.CreateTrainer(ffnn_model, trainingLoss, classError, new Learner[] { ll });

int epochs = 800;

int i = 0;

while (epochs > -1)

{

var minibatchData = minibatchSource.GetNextMinibatch(sampleSize, device);

var arguments = new Dictionary<Variable, MinibatchData>

{

{ feature, minibatchData[featureStreamInfo] },

{ label, minibatchData[labelStreamInfo] }

};

trainer.TrainMinibatch(arguments, device);

Helper.PrintTrainingProgress(trainer, i++, 50);

if (Helper.MiniBatchDataIsSweepEnd(minibatchData.Values))

{

epochs--;

}

}

double acc = Math.Round((1.0 - trainer.PreviousMinibatchEvaluationAverage()) * 100, 2);

Console.WriteLine($"------TRAINING SUMMARY--------");

Console.WriteLine($"The model trained with the accuracy {acc}%");

}

The full source code with formatted Iris data set for training can be found here.

Bahrudin Hrnjica holds a Ph.D. degree in Technical Science/Engineering from University in Bihać.

Besides teaching at University, he is in the software industry for more than two decades, focusing on development technologies e.g. .NET, Visual Studio, Desktop/Web/Cloud solutions.

He works on the development and application of different ML algorithms. In the development of ML-oriented solutions and modeling, he has more than 10 years of experience. His field of interest is also the development of predictive models with the ML.NET and Keras, but also actively develop two ML-based .NET open source projects: GPdotNET-genetic programming tool and ANNdotNET - deep learning tool on .NET platform. He works in multidisciplinary teams with the mission of optimizing and selecting the ML algorithms to build ML models.

He is the author of several books, and many online articles, writes a blog at http://bhrnjica.net, regularly holds lectures at local and regional conferences, User groups and Code Camp gatherings, and is also the founder of the Bihac Developer Meetup Group. Microsoft recognizes his work and awarded him with the prestigious Microsoft MVP title for the first time in 2011, which he still holds today.

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin