Problem Description

The main aim of this work is an extraction of a typical workload in a production SQL database. By a typical workload, we understand a set of parametrized SQL statements (JDD, JDM, SELECT or function calls) that are processed in the database during the monitoring period. We would like to collect such set of SQL statements together with several parameter value examples and approximate number of invocations that occurred during the monitoring, however, we would like to avoid SQL statement duplication. In other words, if the same SQL statement is invoked million times only with subtle difference in parameter values, we would like to store this SQL command only once in our typical workload storage with a few parameter value examples. Typical workload can be useful for unit testing purposes, SQL command performance tuning and to create an overview about the range of different SQL statements used in the production and their frequency.

There are tools in SQL Server that allow to collect SQL statements for a certain period. The problem of these tools is that the amount of data recorded in this way may not be small and that the recorded data requires further processing. Also, work with these tools may not be easy. This article describes a tool that solves the problem described above. This tool can automatically collect information about executed SQL statements and it does their analysis and stores the required information in a custom SQL database, avoiding unnecessary or duplicate SQL statements being stored.

Requirements

- Management Studio 2012 or lower

- Login to database with sysadmin server role

Solution Preview

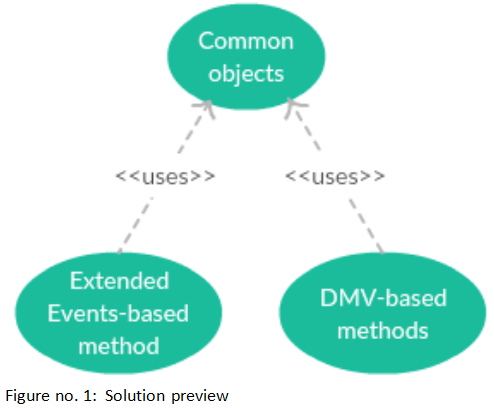

Figure number 1 shows a preview of the solution. Later in this article, we propose two solutions:

- The first is based on a usage of extended events (see #Extended Events), and

- The second is based on data management views (DMV) (see #DMV).

For easier work, WorkLoad Add-In was created, which is described in #WorkLoad Add-In.

Methods Used to Solve Problem

WorkLoad Add-In for SQL Server Management Studio (2012 or Lower)

We have created SQL Server Management Studio Add-In which allows simple set up of the methods proposed in the following chapters. The WorkLoad Add-In is described in more detail in #WorkLoad Add-In.

Common Objects

We use the name “Common objects” for all parts of the solution that are shared by both methods. This includes CLR stored procedures PG_WorkLoad, PG_DeleteFile, and CLR FN_IsFileExists.

PG_WorkLoad – CLR stored procedure that processes the captured SQL statements described in #Processing SQL statements. The input parameter is the SQL statement text that needs to be processed. After processing the SQL statement, processed SQL statement is stored in the typical workload tables described in #Workload storage.

The main function of the module is to perform a SQL statement normalization. Normalization of a SQL statement is a process in which the SQL statement is transformed into a standardized form in which two SQL statements will be identical only if they have the same semantics. This removes differences between SQL statements that have been generated by different syntaxes of the same semantics. This module is created as a CLR stored procedure called "PG_WorkLoad". When creating the module, I used the trial version of the "gudusoft.gsqlparser.dll" library, which can be downloaded from the official site. This version of the library has its limitations. If anyone needs to use all of the library's features, you can purchase a license at http://www.sqlparser.com/.

In Figure no. 2, we can see tables that are used as our workload storage. These tables are used as a main storage unit during the monitoring, however, we can export/import data into/from an XML file. The "TB_Query" table is used to store unique SQL statements and their information such as SQL statement text, number of occurrences, type of SQL statement, number of parameters and creation datetime. The "TB_Param" table represents the SQL statement parameters and information such as value of the parameter and order in the SQL statement. The last table "TB_QueryParam" represents the individual processing of the SQL statement with creation datetime. It is possible to obtain for each SQL statement its parameters within one processing step.

Typical workload stored in tables can be exported / imported from / into an XML file. In the listing below, you can see the XML format.

="1.0"="utf-8"

<workload>

<sql count="1" id="1" paramcount="1"

text="SELECT * FROM AUKCE T0 WHERE T0.ID = @VAR1" type="dql">

<values>

<p>10</p>

</values>

</sql>

<sql count="1" id="2" paramcount="0"

text="SELECT @@TRANCOUNT" type="dql">

<sql count="2" id="3" paramcount="1"

text="SELECT T0.NAME

FROM SYS.DATABASE_PRINCIPALS T0 WHERE T0.TYPE = @VAR1"

type="dql">

<values>

<p>'A'</p>

</values>

<values>

<p>'R'</p>

</values>

</sql>

</workload>

FN_IsFileExists – CLR stored function that checks whether the file exists on the disk. The input parameter is the path to the file to be checked.PG_DeleteFile – CLR stored procedure that deletes the file from the disk. The input parameter is the path to the file that needs to be deleted.

This method uses several techniques suitably combined to capture a typical workload. Figure no. 3 contains the main logic of the method.

- Extended Events – Extended Events are used to capture SQL statements that are executed in SQL Server. As soon as an SQL statement is executed, Extended Events stores an information about it into a file.

PG_ExtendedEventsJob – The procedure analyses SQL statements read from the Extended Event file and it stores them in the tables if necessary.- Job – Job runs the stored procedure

PG_ExtendedEventsJob on a regular basis. During installation, you can choose your own job name. The created job is set to run every minute. - SQL Plan Cache – SQL Plan Cache stores information about query plans of the SQL statements processed in a SQL database. We use it to detect a situation where no new SQL commands has been processed. In such case, Extended Event file analysis is unnecessary.

- TB_SQLHash – The table into which the hash values of the SQL statements obtained from the SQL Plan Cache are stored.

- File Query1.xel and Query2.xel – Extended Events writes raw SQL commands into this files.

Compared to the Extended Event-based method, this solution is simpler and less resource-intensive because we avoid reading and analysis of a potentially large number of SQL statements stored in a file. On the other hand, the disadvantage is the longer timeframe that is needed to capture the same amount of different SQL statements. It is possible to use two different DMVs as a SQL statement source:

sys.dm_exec_requests – It is a view in which the currently processed SQL statements are stored.- SQL Plan Cache (see previous chapter)

The selection of the DMV is driven by a value of @FromCache variable and we can easily select the source DMV in the Add-In described later. Figure no. 4 depicts the logic used in the DMV based method.

PG_RunningQueriesJob – The procedure gathers the SQL statements from a DMV. We use the DMV of currently processed SQL statements (sys.dm_exec_requests) or a DMV corresponding to the SQL Plan Cache.Job – Job runs the stored procedure “PG_ExtendedEventsJob” on a regular basis. During installation, you can choose your own job name. Job is invoked every 10 seconds and every 5 minutes if we use sys.dm_exec_requests and SQL Plan Cache, respectively.tmpStatementTab - Temporary table for storing SQL statements obtained from the DMV.

Method Based on Currently Processed SQL Statements (sys.dm_exec_requests)

In this case, we describe the "PG_RunningQueriesJob" procedure that reads sys.dm_exec_requests. The SQL statements from the DMV are stored in the "tmpStatementTab" temporary table created within the stored procedure. There is a very small chance that we hit new SQL statements in one moment. Due to this fact, we repeat the DVM read several times. This will increase the probability that we find a new SQL statement and we converge to the result of the Extended Event-based method faster. The number of repetition is set to 30. If the success rate is still low, the user can increase the number of repetitions manually. The procedure then analyzes every SQL statement in the temporary using the "PG_WorkLoad" CLR procedure a store new SQL statements in our workload storage.

Method Based on SQL Server Plan Cache

PG_RunningQueriesJob" procedure has very similar logic if we read the SQL statements from the SQL Server Plan Cache. The main difference is that there is no need to repeat the read from the Plan Cache. Therefore, the procedure only populates the temporary table, process the analysis using the "PG_WorkLoad" CLR procedure and then store new SQL statements. Another difference is that SQL Server Plan Cache usually stores the SQL commands parametrized, therefore, we do not have the SQL statement values in this case in the workload storage.

Workload Add-In installation application was created to simplify the process of Add-in installation. It is necessary to say that it is possible to execute the scripts that are creating and manipulating Extended Event-based method and the DMV-based methods without installing WorkLoad Add-In, however, it is much easier to do it with Workload Add-In. Scripts are located in a folder installer/WorkLoadAddin/data.

Installer is a WinForms application with GUI. Installation has the following steps:

Step 1

The user has to allow the User Account Control (UAC) as a first step, otherwise the installation application cannot continue. UAC is necessary because we need to write into the "Program Files" folder that requires administrator rights. Then the user see the first window and If he wants to proceed, then he needs to click "Next".

Step 2

In this step, you can select the installation directory, and SSMS Add-Ins directory that are run when you start SQL Server Management Studio. The installation directory is by default set to "C:\Program Files (x86)\WorkLoadAddin” since this path is implicit for any Windows installation.

This path is default set as "C:\ProgramData\Microsoft\MSEnvShared\Addins". Here are all XML files of all Add-Ins used by SQL Server Management Studio. Click "Next" to continue to the step 3.

Step 3

All files that are used by WorkLoad Add-In are copied to the "WorkLoadAddin" folder located in "Program Files". XML file is copied to the "C:\ProgramData\Microsoft\MSEnvShared\Addins" and at the end, the WorkLoad Add-In configuration file is copied to the path "C:\Users\UserName\AppData\Local\WorkLoadAddin". Once the copy is completed and there is no error, the WorkLoad Add-In installation finishes.

Uninstall

Step 1

The uninstaller is stored in the installation path by default in "C:\Program Files (x86)\WorkLoadAddin\Uninstall.exe" or where the WorkLoad Add-In is installed.

Once the uninstallation is started, the UAC will be displayed to the user again and it has to be confirmed by the user to continue. When UAC is confirmed, a window appears in which the option to uninstall the application is selected. Clicking the "Next" button will show the user whether he really wants to uninstall.

Step 2

In this step, WorkLoad Add-In is uninstalled. Uninstalling means removing the WorkLoad Add-In from a folder that contains all the Add-Ins for SQL Server Management Studio. Next, deleting configuration settings for the WorkLoad Add-In. Finally, deleting the folder in "Program Files". After deleting all of the above files and folders, the uninstallation finishes.

After WorkLoad Add-In uninstall in SQL Server Management Studio, you need to restart SQL Server Management Studio. If you do not restart it and click "WorkLoad" in the "Tools" tab will display an error message indicating that such Add-In no longer exists.

Working with WorkLoad Add-In

WorkLoad Add-In can be found in the "Tools" menu after installation under "WorkLoad Add-In Settings" name. WorkLoad Add-In window can be seen in figure no. 5.

Let us explain in the following sections the functions that are available in the Workload add-in. We start with functions that are shared by all methods in Section called Common functions and in the subsequent sections, we describe the functionalities that are specific for each method.

Common Functions

Install/Uninstall "Common Objects"

Installation of "Common objects" is done using the "Install" button, which is located in the "Common objects" section (see figure no. 5). This installation executes "CreatePermissionAndAssembliesScript" script to enable permissions to work with CLR stored procedures, and to create assemblies to work with CLR stored procedures in SQL database. It also executes the "CreateTableScript" script to create all tables for store typical workload. Finally, "CreateCLRObjectScript" script is executed to create CLR stored procedures and functions. All scripts are executed in one transaction. If an error occurs during the transaction, all changes will be cancelled for the entire transaction by rollback, and error that occurred will be shown to user. The state in Common objects section shown in figure no. 5 indicates whether the installation of Common objects is complete or not. The Common objects has to be installed in order to run the specific methods (Extended Event-based or DMV-based).

Uninstallation is similar to installation. The only change is in the names of the scripts that are being executed. During the installation, the scripts were called "Create", while scripts with the prefix "Delete" are called during the uninstallation. The uninstall button is shown in figure no. 5.

Connection Test

Using this test, you can determine whether the login data entered in the WorkLoad Add-In windows for a given SQL database are correct. The button for connection functionality test is shown in figure no. 5.

Check the Functionality of Earlier Installations

The WorkLoad Add-In settings are saved in the configuration file. As a result, after restarting, everything is set as it was before you finished working with WorkLoad Add-In. After WorkLoad Add-In start, it is possible to determine which installations are currently installed by the configuration file. According to this, you can see installation status as shown in figure no. 5. However, someone can change the SQL database manually and the WorkLoad Add-In is not aware of the changes. For example, changes means manual renaming name of job, Extended Event, stored procedures, also changes in our stored procedures and so on. Therefore, the Add-in then displays information that the Extended Event-based method is installed, although, it may not be true. Each of the described installations has its own script stored in the WorkLoad Add-In data. In this script, SQL statements are used to check whether the method is installed or not. If a check fails, then an error with code will occur. This code, together with the message, is then displayed to the user. Checking for all methods is performed when the WorkLoad Add-In is started.

Export into File

You can export SQL Server typical workload from a SQL database to an XML file. The export button is shown in figure no. 5.

The XML file must have a specific structure to properly store the SQL Server typical workload. How XML file structure looks like can be seen in [Listing No.1]. For export to an XML file, it is necessary to have "Common Objects" installed.

Import from File

You can import SQL Server typical workload from an XML file into a SQL database. Import works on the principle of reading an XML file with SQL Server typical workload and saving it to the SQL database. The import button is shown in figure no. 5. Before the SQL statement is saved, it is checked whether the SQL statement already exists in the SQL database. If the SQL statement already exists, only the parameters of this SQL statement are inserted. However, if the SQL statement does not exist, a new SQL statement is inserted with its parameters. The XML document structure must follow the structure depicted in [Listing No. 5]. If the structure is not correct, an error occurs and an error message is shown to the user.

Extended Events Functions

Figure No. 6 shows the details of the Workload Add-in that is specific for the Extended Events method. Clearly, there is a combobox where we select the specific method that we want to configure and control (Extended Events in this case). We install this method by clicking on the "Install method" button. The scripts "CreatePG _ExtendedEventsJob", "CreateExtendedEventsScript" and "CreateExtendedEventMethodJobScript" are executed during the installation as a one transaction. If an error occurs, the rollback is executed and error is shown. It is possible to select the path of the Extended Event file before the installation. It is also possible to specify the name of the job as well as the Extended Event itself. Once installed, it is no longer possible to change the path or the Extended Event name. Once the installation is complete, you can use the buttons to run a job and also capture SQL statements using Extended Events.

Enabling/disabling job is by clicking on button “Enable” (see figure no. 6).

Start/stop capture typical workload into file is by clicking on button “Start” (see figure no. 6).

These two buttons are here to be able to capture SQL statements using Extended Events into file, but do not perform the job analysis immediately, but leave it for later.

Uninstallation is done by clicking on the “Uninstall method” button (see figure no. 6).

DMV Functions

Installation of the DMV method is performed in the same way as the previous installation. The only difference is that we need to select the "2. Method (DVM)" option in the combobox (see figure no. 7). It is possible to choose a job name and also whether we want to use a currently running SQL statements or SQL Server Plan Cache as main source of SQL statements. The scripts "CreatePG _RunningQueriesJob" and "CreateRunningQueriesMethodJobScript" are executed during the installation as a one transaction. Once method is installed, we can only enable/disable this method or uninstall it.

Testing

Let us describe a performance testing that was conducted to show a CPU overhead that can occur if someone employs our monitoring methods. Clearly, the results can be different depending on a database workload, however, we believe that these results give a good picture about a typical monitoring overhead. We do this test since we are aware that performance is important in a production and we want to show that we tuned our solution carefully and the overhead of our methods is not significant.

Testing was performed on a notebook with a 2.0 GHz Intel Core i3 processor with an integrated graphics card, 8 GB RAM and 1 000 GB HDD. Microsoft Windows 10 operating system is installed on the notebook. Microsoft SQL Server 2016 Developer Edition (version 13.0.1601.5) that contains the SQL Agent is used as the database environment. Finally, Microsoft SQL Server Management Studio 2012 (version 11.0.2100.60) was used to access the database in SQL Server. Windows Performance Monitor, which is part of the Windows operating system, is used to monitor system resources. Counter called "% Processor Time" was used to capture CPU usage.

A console application has been used to make workload, which sends 11 different SQL statements to the SQL database. Each method test was performed 5 times and each measurement lasted 10 min. During each measurement was send 76,4 requests per second. The average values were obtained from each run and total average was calculated for each of methods as it is shown in figure no. 8.

If we compare the results of the methods shown in figure no. 8, we can observe that the differences in CPU utilization are not as significant.

Conclusion

Finally, we would like to say that even though the Extended Events-based method has a slightly higher overhead when compared to others, it captures all types of SQL statements during monitoring. On the other hand, DMV-based methods do not capture all of them, but it may be useful if SQL statements typically run for a longer time. Moreover, the SQL Plan Cache method usually does not offer parameter values.

It implies that each of these methods has its advantages and disadvantages and each method is suitable for another situation. The choice of these methods depends on the particular case of use. The main advantages of all described methods is a simple installation and use, as there is a WorkLoad Add-In that allows setup and all methods have a minimum memory space requirements.

Authors

This article was created by Bc. Tomáš Bauer and professor Ing. Radim Baca, Ph.D.

This member has not yet provided a Biography. Assume it's interesting and varied, and probably something to do with programming.

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin