Introduction

Today, I want to discuss the "caching" topic and show you my implementation of a generic memory cache, Cache<K,T>. You will find my full implementation documented here and as download, including some simple unit tests for demonstration.

Update: The original article had the class named Cache<T> with only generic contents of the cache. It has been extended, to allow Cache<K,T> to allow you to specify the type of the key of the cache too. Cache<T> is still available, with no change! It just derives from Cache<K,T> as:

public class Cache<T> : Cache<string, T>

Update 2: With credits to @Kochise, I added two new methods to the Cache class:

Clear() and AddOrUpdate(K, T) with a default lifetime of Timeout.Infinite. Thanks for those ideas, Kochise :). This brought me to the idea, to implement a Remove() method that takes a keyPattern as a Predicate<K>, so you can remove multiple entries from the cache with a single call. You can find detailed explanations below in the "Using the Code" section.

Both zip files in the download have been updated. The LoadTest had a strange behavior in Release mode due to optimization. This is fixed. I also updated the unit tests contained in Cache.zip to call the new Clear() method and the new Remove() method.

I wrote my first object cache back in 2005, when .NET 2.0 arrived. This is (more or less :)) still the same class, it grew up over the years, uses Threading Timers (that utilize the ThreadPool) now, is thread-safe and recently I updated it to C# 6.0 syntax.

The reason why I write this down today for you is a discussion I had with a fellow colleague, why I don't use the MemoryCache class, which ships with .NET Framework anyway. Well, one reason for sure is, that I "just got used to the interface of my own cache", but another one (and at least as important as my first reason) is: MemoryCache has an ugly interface. I don't like it.

What I like, when I see a class? A simple, straightforward interface. When I want to cache an object, or better: an item of type T, I want to see:

- How can I add/update it and for how long is it stored?

- How can I access (Get) it?

- How can I remove it?

- And sometimes: Does it already exist?

What I do *not* want to see:

- Use a

CacheItemPolicy, DateTimeOffsets, different strategies, expirations,... tons of sub-classes and sub-structures - A documentation several pages long on the website.

Unfortunately, all this happens, when you look up the MemoryCache class on MSDN (https://msdn.microsoft.com/de-de/library/system.runtime.caching.memorycache(v=vs.110).aspx).

If you think the same way than me, if you prefer a simple and easy-to-use interface without distractions, you probably will like this class.

I have never been a fan of the MemoryCache because it puts lots of overhead on my shoulders. Things I do not want to think about.

Next thing, I didn't like about MemoryCache: It is not generic. It works with object.

Background

Caching in memory makes sense in many situations. Be it results of database queries that might run in high frequency, or be it reflection, and many more.

Especially reflection is such a thing... You load an Assembly, get the Types, the Interfaces, maybe look for Attributes, find some MethodInfos that you want to Invoke later... Why scan through the Assembly over and over again? Just keep the MethodInfo you want to invoke in a cache, give it a unique Key and then call it, whenever you need it. Eliminate the Reflection cost. Do it only once. Yes, of course you could create a private Dictionary and store your MethodInfo objects there. But you then have to take care about thread safety, handle exist/replace and all those things, that this Cache implementation already does for you. So, from my point of view, it's fine to use the "Cache" for storing item<T> for the process lifetime, because all the surrounding noise is handled and encapsulated there for you.

Next good option is the local caching of database query results. A Cache<DataTable> doesn't make much sense, you say? Because the DB Server has its own cache anyway? Well, right, it has. And it does caching. But in a larger scale, say, 2000 clients or even more (I am working on a 50k client system in my job at the moment), it makes a huge difference for the DB cluster. 50k queries that use the DB cache still make 50k requests to the DB cluster! If most of them can be handled by a local Cache<DataTable>, you take away a real pain, a huge load from the DB.

So your local client can refresh "at will", every second or every 3 seconds to get some status - if you are in a polling scenario - and you can define in your business logic or data layer, far away from the GUI client layer, how often the client will be presented new data, by just setting a single value: the cache time. And all this happens local, the DB doesn't know anything about it and doesn't need to care.

Many of my applications utilize many different instances of this Cache, some keeping entries only for a few seconds, some keeping entries for the lifetime of the process (many Reflection scenarios do this).

So, let's take a look at my generic cache, shall we?

Using the Code

As I said at the beginning of this article, I like a clean and straightforward interface, when I look at a class.

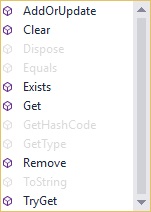

The Cache<T> class looks like this:

It is reduced to what you really *need*: Add(Update), (Try)Get, Remove, Exist and Clear. For convenience, there is also an Indexer implemented, so you can Get() a Cache-Entry with:

cache.Get(key);

or

cache[key];

whatever you prefer more.

Let's start with the basics of the Cache class. The downloadable file contains three classes:

Cache : Cache<object>

Cache<T> : Cache<string, T>

Cache<K,T>

The non-generic Cache class just implements a plain object cache if you need to store objects of different types in a single cache instance.

A personal recommendation: If you only have 2 or 3 different types you want to cache (which will be true for the vast majority of use cases), it is a better and cleaner approach to create 2 or 3 Cache<T> instances than a single Cache<object>. There are no background threads involved, the timers use ThreadPool threads when they fire, so there is no real reason to stick to one single Cache instance. Please keep that in mind.

What does the class do, and what does it *not* do:

- It caches objects based on second-intervals, not milliseconds.

- It supports

Timeout.Infinite to keep items forever (= process lifetime). - It is

IDisposable. - It is thread-safe.

- It is generic.

- Cache expiration uses

System.Threading.Timers Timer, which utilizes ThreadPool Threads when doing the callbacks for expiration, so you don't have a ton of inactive sleeper threads hanging around when your cache gets bigger or when you have multiple instances of the Cache running.

What it does not do:

- Offer monitoring, listing, iteration, examination of the contents.

- Different strategies and ways to expire items. Either you want it in the cache or not. If not,

.Remove it. - Offer events to react on an item before it gets purged.

- Maximum memory settings and such things. The responsibility of how much you put into the cache is up to you.

Let's start with a little demo.

I will show the demo by some unit testing code, because it explains very clean, how to use the class.

[TestMethod]

public void Cache_Generic_Expiration_Ok()

{

Cache<DataTable> cache = new Cache<DataTable>();

DataTable testTable = new DataTable();

c.AddOrUpdate("test", testTable, 1);

Assert.IsTrue(c.Exists("test"));

Assert.AreSame(c.Get("test"), testTable);

Thread.Sleep(1050);

Assert.IsFalse(c.Exists("test"));

}

Those few lines of code already explain the most important things you need to know about the class. Just create an instance - no complicated threading, no noise.

AddOrUpdate an item to put it to the cache. The third parameter is the cache time. Specify Timeout.Infinite here to keep it forever.

Exists tells you whether a specific key already exists in the cache.

Remove removes an item from the Cache.

Get(key), TryGet(key, out T item) or [key] returns you the item from the cache or default(T), if it is not found. No exception is raised, if the item does not exist. Why? Because it does not really make a difference. The normal use case is a code construct like this:

(This has been taken from a data layer I wrote, using the cache, implicit null check and query execution, if null).

result.Data = sqlCache[commandKey] ?? ExecuteQuery(command);

sqlCache.AddOrUpdate(commandKey, result.Data, cacheTime);

Here would not be a benefit from an Exception. If you really want to behave completely different, if an item is currently not cached, use TryGet or Exists.

If you looked at these two lines closely, you might say now: "Wait! If you update this entry with cacheTime again, the entry will never expire! This is wrong!".

The thing here is: The AddOrUpdate has a fourth (optional) parameter which defaults to false. It's name is restartTimerIfExists. This parameter gives you full control over the cache entry. By default, the timer is not modified (and the cacheTime parameter is ignored if you only update an entry), so updating an entry just updates the contents of the entry, not it's TTL (Time to live). The control (and therefore, the decision), whether you want to reset the timer to a new value, it totally up to you, depending on the situation/reason why you just updated the entry.

Removing items from the cache is worth a closer look, as it offers a neat little signature to bulk-remove items that fulfill a certain condition.

The Remove-method offers two signatures:

Remove(K key)

and

Remove(Predicate<K> keyPattern)

The second one allows you to specify any predicate (like a Lambda expression) that has to evaluate to true for each key you want to remove from the cache.

One of the unit tests demonstrates this very well (look at the c.Remove line in the middle of the test):

[TestMethod]

public void Cache_Remove_By_Pattern_Ok()

{

Cache c = new Cache();

c.AddOrUpdate("test1", new object());

c.AddOrUpdate("test2", new object());

c.AddOrUpdate("test3", new object());

c.AddOrUpdate("Other", new object());

Assert.IsTrue(c.Exists("test1"));

Assert.IsTrue(c.Exists("Other"));

c.Remove(k => k.StartsWith("test"));

Assert.IsFalse(c.Exists("test1"));

Assert.IsFalse(c.Exists("test2"));

Assert.IsFalse(c.Exists("test3"));

Assert.IsTrue(c.Exists("Other"));

}

As you can see, any Lambda can be used to remove multiple items. If you build your cache keys by a specific pattern, no matter whether they are int or string keys (or any other type), you can wipe sections of the cache with a single call.

Let me give you a simple example, so you get the idea:

I take an ImageCache as an example as this is quite a common use case:

Your keys are built like [content].[userid] to have them unique:

UserPortrait.356

?UserPortrait.409

?UserPortrait.1158

?UserPortrait.22

Avatar.869

Avatar.223

Avatar.127

Avatar.256

Avatar.1987

So it is easy for you to wipe all Avatar Images from your cache by calling:

Remove(k => k.StartsWith("Avatar."));

but you can also remove all images for a specified [userid] with:

Remove(k => k.EndsWith($".{userid}");

That's all for the usage of the class! Add, Remove, Get and one or two little convenience methods. Clean and simple!

The Source Code of Cache<K,T>

Now I want to show and discuss the source code of the Cache<T> class. It's quite simple, no big magic here, but I find it important in an article to do a look "behind the scenes" and explain, what's going on.

Constructing a Cache<T>

All Cache implementations only have one single, default constructor with no parameters.

Cache<DataTable> sqlCache = new Cache<DataTable>();

Internal data storage is done in two simple Dictionaries. Yes I could've used just one Dictionary, but as the Timers can be more or less reset and handled independently from the cached objects, two Dictionaries are fine.

Thread safety is achieved with a ReaderWriterLockSlim that handles parallel access.

public class Cache<K, T> : IDisposable

{

#region Constructor and class members

public Cache() { }

private Dictionary<K, T> cache = new Dictionary<K, T>();

private Dictionary<K, Timer> timers = new Dictionary<K, Timer>();

private ReaderWriterLockSlim locker = new ReaderWriterLockSlim();

#endregion

The IDisposable implementation is completely standard, so I will not discuss it here in detail and instead jump to the more interesting parts of the class.

Add, Remove, Get and Exist

These methods are your interface to work with the Cache<T>.

AddOrUpdate:

public void AddOrUpdate(K key, T cacheObject, int cacheTimeout, bool restartTimerIfExists = false)

{

if (disposed) return;

if (cacheTimeout != Timeout.Infinite && cacheTimeout < 1))

throw new ArgumentOutOfRangeException("cacheTimeout must be greater than zero.");

locker.EnterWriteLock();

try

{

CheckTimer(key, cacheTimeout, restartTimerIfExists);

if (!cache.ContainsKey(key))

cache.Add(key, cacheObject);

else

cache[key] = cacheObject;

}

finally { locker.ExitWriteLock(); }

}

What happens in AddOrUpdate?

- If we are disposed, no more access

- Parameter value check

- In a

write lock:

- A check of the timer (more on that later)

- Simple

Add/Replace of the cacheObject in the Dictionary

As promised, not much magic here :).

Remove

public void Remove(K key)

{

if (disposed) return;

locker.EnterWriteLock();

try

{

if (cache.ContainsKey(key))

{

try { timers[key].Dispose(); }

catch { }

timers.Remove(key);

cache.Remove(key);

}

}

finally { locker.ExitWriteLock(); }

Remove is simple, too. Just a write-lock-safe Remove of both entries, the timer and the cached object.

The second signature of Remove allows a Predicate<K> to be specified to find the key(s) to remove:

public void Remove(Predicate<K> keyPattern)

{

if (disposed) return;

locker.EnterWriteLock();

try

{

var removers = (from k in cache.Keys.Cast<K>()

where keyPattern(k)

select k).ToList();

foreach (K workKey in removers)

{

try { timers[workKey].Dispose(); }

catch { }

timers.Remove(workKey);

cache.Remove(workKey);

}

}

finally { locker.ExitWriteLock(); }

}

The Linq that fills my var removers must use a .ToList() as it is not allowed to modify a collection while iterating through it. The predicate is only used here in the where clause and must evaluate to true for each key that shall be removed.

Get

public T Get(K key)

{

if (disposed) return default(T);

locker.EnterReadLock();

try

{

T rv;

return (cache.TryGetValue(key, out rv) ? rv : default(T));

}

finally { locker.ExitReadLock(); }

}

public bool TryGet(K key, out T value)

{

if (disposed)

{

value = default(T);

return false;

}

locker.EnterReadLock();

try

{

return cache.TryGetValue(key, out value);

}

finally { locker.ExitReadLock(); }

}

In the Get method, you can see the reason, why I took a ReaderWriterLockSlim over a simple lock() statement. In case, you don't know it: A lock() is always a write-lock and therefore a lock()ed block can only be entered sequential, one thread after the other, while a ReadLock as shown here can be processed parallel by multiple threads.

When a thread requests a WriteLock, this WriteLock has to wait, until the current readers have finished, then the WriteLock runs exclusive, like a lock() statement (all readers have to wait until write is finished). When the WriteLock is done, all readers may continue.

Exists

public bool Exists(K key)

{

if (disposed) return false;

locker.EnterReadLock();

try

{

return cache.ContainsKey(key);

}

finally { locker.ExitReadLock(); }

}

Exists plain and simple returns, whether a specific entry is contained in the internal Dictionary.

Clear

public void Clear()

{

locker.EnterWriteLock();

try

{

try

{

foreach (Timer t in timers.Values)

t.Dispose();

}

catch

{ }

timers.Clear();

cache.Clear();

}

finally { locker.ExitWriteLock(); }

}

Clear takes care that all pending timers are .Dispose()d before both Dictionaries are cleared.

Timer Check / Timer Callback

Let's take a look at how the timing (TTL) of a cache entry is handled in Cache<T> and how the restartTimerIfExists flag is processed.

Code first :)

#region CheckTimer

private void CheckTimer(K key, int cacheTimeout, bool restartTimerIfExists)

{

Timer timer;

if (timers.TryGetValue(key, out timer))

{

if (restartTimerIfExists)

{

timer.Change(

(cacheTimeout == Timeout.Infinite ? Timeout.Infinite : cacheTimeout * 1000),

Timeout.Infinite);

}

}

else

timers.Add(

key,

new Timer(

new TimerCallback(RemoveByTimer),

key,

(cacheTimeout == Timeout.Infinite ? Timeout.Infinite : cacheTimeout * 1000),

Timeout.Infinite));

}

private void RemoveByTimer(object state)

{

Remove((K)state);

}

#endregion

First, I check whether a Timer for this cache entry exists. It is possible that none exists, when this entry is currently added, and not updated.

If the Timer exists, it gets modified, if the restartTimerIfExists is set to true, otherwise it is left alone.

The parameters supplied to the timer are:

- When adding:

- The callback (

RemoveByTimer) to be invoked when dueTime expires - The

key of the cache entry (this is the state parameter of the callback method and my key to find the cache entry to remove) - The

dueTime (time to wait before calling the callback method) - The

period. This is always Timeout.Infinite in this class, as each entry can be removed only once.

- When updating/changing:

- The same two

dueTime and period parameters as supplied, when adding, just with new values.

The RemoveByTimer method simply calls the (thread safe) Remove method of the Cache<T> class.

The non-generic Cache : Cache<object> class

Last but not least, I want to show you the more-than-simple code behind the non-generic version, Cache class:

public class Cache : Cache<object>

{

#region Static Global Cache instance

private static Lazy<Cache> global = new Lazy<Cache>();

public static Cache Global => global.Value;

#endregion

}

This class is a Cache<object> that can store any type of item and is used in highly mixed scenarios, with all the drawbacks object brings to the field.

Only interesting thing to mention is the Lazy initialization of the static Global member.

I always try to support as many scenarios as possible, and I can imagine that there are cases where you don't want to instantiate your own cache instance (maybe you are too lazy :)), so this class even provides a static Global cache member.

Performance

In the comments, there were some concerns about the performance of the cache. That's why I want to make clear again, this is a local cache, not a server cache for a webserver that handles 50k clients in parallel access! It is made for your frontend applications or maybe your business/data layer.

We use it in a professional environment without any problems.

You can download the load test and test the cache on your local machine and compare your values to mine.

My test ran on a Lenovo Y50 Quad-Core (8 logical cores) Gaming Notebook with 16GB of RAM.

These are the results of my local load tests:

Cache<T> load test.

===================

1: Single threaded tests

1.1: Adding 1 Million entries.

<K,T> = <long, string*32>

Performance: 492/ms

-

1.2: 1 Million random Get calls

Performance: 4166/ms

-

1.3: Removing 1 Million entries (with exists check)

Performance: 3623/ms

-

2: Multi threaded tests

2.1: Adding 1 Million entries.

Threads: 100, each adds 10k

<K,T> = <long, string*32>

Performance: 355/ms

-

2.2: 1 Million random Get calls

Threads: 100, each adds 10k

<K,T> = <long, string*32>

Performance: 3937/ms

-

2.3: Removing 1 Million entries (with exists check)

Threads: 100, each removes 10k

<K,T> = <long, string*32>

Performance: 1689/ms

-

--- End of test ---

I will copy my explanation of this test from the comment I gave one member:

- In a single threaded scenario (maybe when used only as a reflection cache or as database query result cache for a GUI app), I can add an entry in ~2 microseconds to a range of 1 million entries (which GUI app caches 1 million different queries or 1 million reflections?)

On the loaded cache, there are more than 4000 get requests per millisecond, or 4 per microsend or 1 access in less than 250 nanoseconds.

Remove is almost as fast as Get.

Then, with a thread load of 100 threads (again, this is not a server cache for thousands of parallel access requests - and even those run full parallel due to readlocks - only thousands of parallel Adds might slow down).

So the threaded tests result in:

- A drop from 492 to 355

Adds per millisecond which still ends up in only 3 microseconds per add. - Get requests show almost equal performance (3930:4160) due to the read locks

- Removing drops down in performance, agreed, losing about 50% but still in the area of nanoseconds per remove.

This performs very well even in a scenario with 100 parallel threads which is more than the average frontend application will use.

That's it!

I hope you see use in the class, feel free to adapt it to your special needs.

Please take the time to comment your votes so I have a chance to reply!

Cheers,

Mike

History

- 2015-09-30 Initial post of this article.

- 2015-10-08 With credits to @Roger500, updated the class to have generic key,

Cache<K, T>. Thanks! - 2015-10-10 Added a load test with single-thread and multi-thread (100 threads) access.

- Corrected a compile error in Cache.zip - SORRY - This download compiles!

- 2015-10-16 Fixed a bug in the

LoadTest (new zip attached)

- New methods in

Cache: Remove(pattern), Clear() and AddOrUpdate(new signature) - Documented the new methods in the article

- Fixed some formatting issues in the Performance section of the article

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin