Preface

Although the Pareto principle is frequently mentioned in software optimization discussions [2], the way this principle affects the optimization process is usually left obscure. Hence, I considered it interesting to devote this brief discussion to the impact of the Pareto principle in software optimization.

Basic Theory and Practice

The Pareto principle [1] generally states that roughly 80% of the effects come from 20% of the causes and is hence also known as the 80-20 rule. When applied in software optimization [2], this principle denotes that 80% of the resources are typically used by 20% of the operations. More specifically, regarding the execution speed of a software entity, the Pareto principle denotes that 80% of the execution time is usually spent executing no more than 20% of the code.

The validity of the Pareto principle in software optimization is practically indisputable. Anyone who has even a limited experience in optimization will acknowledge that responsible for most of the system resources consumption is almost always a very small percentage of the overall code. The Pareto principle applies so well in speed optimization, that there are even cases in which almost 90% of the execution time is spent executing only 10% of the code (90-10 rule). Furthermore, we will see in the next paragraphs that some well-known optimization rules and practices, which are frequently mentioned in relative discussions, are in fact consequences of this important principle.

However, it is important to clarify that the Pareto principle is essentially a widely accepted rule of thumb [1,9], which is not expected to apply in exactly the same way or same degree in all situations. In the context of this article, it is considered that the Pareto principle is in force, when no more than 20% of the operations consume at least 80% of the resources. For example, in case 93% of the resources are used by 16% of the operations, this still is acknowledged as a situation in which the Pareto principle applies well. (Please note that there is no need for the percentages of the code and the resources to add up to 100%, since they are measures of different things.) Furthermore, sometimes a particular malfunction or performance bug may lead to a situation in which a couple of code-lines consume almost all the system resources. These cases are out of the scope of this discussion, which is mostly about the performance improvement of software entities that already function reasonably well.

The Positive Impact of the Pareto Principle

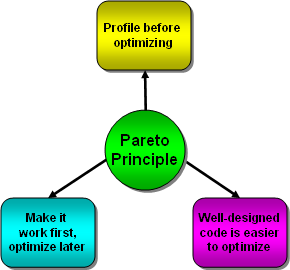

The obvious outcome of the Pareto principle is that, all parts of the implementation code in a typical software entity are not equally responsible for the system resources consumption and only a small portion (10%-20%) of the overall code is actually performance critical. However, the full power of this principle cannot be revealed unless we study further its three most notable consequences, which are frequently regarded as optimization rules and practices: [5,6,7] (see also figure 1).

- It is a good practice to profile [3] before optimizing [6].

According to the Pareto principle, most of the implementation code is usually almost irrelevant to the overall software performance, except of some small code portions, (10%-20%) which consume most (80%-90%) of the system resources. Hence, it is very important to effectively locate these critical code portions and concentrate all our optimization efforts on them. Optimizing non-critical code is not only a waste of time, but will probably reduce the stability and maintainability of our product as well. Consequently, it is very beneficial to profile our code first and judiciously optimize only the code portions which have been proven performance critical.

- It is often preferable to start optimizing when the implementation is complete and functional [5].

It is actually much easier to make accurate performance measurements and effectively locate the performance bottlenecks [4] when the implementation is complete and functional. Thanks to the Pareto principle, the critical code is usually relatively small in size, hence a limited rewrite of the bottlenecks is not expected to cost as much as prematurely optimizing a much larger portion of code. This particular practice is also known as: Make it work first, optimize later.

- Well-designed code is usually much easier to optimize.

A good software design will help us to both locate the performance bottlenecks and also improve small portions of code, without affecting the rest of the program. On the other hand, poor software design will probably reduce the positive impact of the Pareto principle, by increasing the undesirable side-effects of the performance modifications and will eventually make the optimization process disproportionally difficult, in relation to the relatively small size of the critical code. In the words of Martin Fowler: "Well-factored software is easier to tune" [7].

Figure 1: Pareto consequences

The fact alone that the Pareto principle enables and supports the above fundamental optimization rules and practices, is already quite remarkable. However, the overall impact of the Pareto principle goes far and beyond the above three consequences. Thanks to the validity of this principle, it is possible to design software solutions without having the performance considerations and restrictions constantly in our minds. It is also possible, for the software designers and developers, to often favor clarity, flexibility, simplicity, maintainability, reusability and other important qualities, over the performance and efficiency. Consequently, thanks to this important principle, both the complexity and the cost of producing quality software have been significantly moderated.

Finally, last but not least, the Pareto principle and its consequences can provide a very good defence against the premature optimization, [2,8] which is a particularly bad habit of the software developers who care a lot about performance. A good understanding of this principle virtually eliminates any temptation for optimizing too early, or unnecessarily optimizing non-critical parts of code.

Misconceptions and Restraining Factors

It is quite natural to expect that a principle as powerful and indisputable as the Pareto principle, will probably cause some exaggerations and misconceptions, along with its positive impact. More particularly, there is at least one common exaggeration and two common misconceptions, which seem to be related to the Pareto principle:

| Exaggeration: | It is always easy to optimize a complete implementation. |

| Misconception 1: | There is no need at all to care about performance during development. |

| Misconception 2: | Designing for performance is completely useless. |

The logical path which leads to the above false conceptions is quite short and simple (see also figure 2). Since the Pareto principle applies generally well in optimization, it is practically guaranteed that almost always a small percentage of the overall code (10%-20%) will be responsible for most of the system resources consumption. Because of the small size of the performance critical code, it is tempting to assume that it will be always convenient and effective to optimize a software entity, after it has been fully implemented. This in turn, will lead to the false conclusion that, there is no need at all to care about performance when implementing the software and designing for performance is completely useless!

Figure 2: Common Pareto exaggerations and misconceptions

The major logical fault which enables the above inaccurate conceptions is the assumption that the optimization of a fully implemented software entity will be always easy and effective, just because the Pareto principle applies. But in fact the reality can be much more complicated. In a way, we can say that the impact of the Pareto principle in optimization resembles the impact of the money in our life. Money can help us improve many things in our life, but cannot guarantee us a better overall quality of life. Likewise, although the Pareto principle facilitates a lot the optimization at the end of the software development cycle, this is not the same as guaranteeing an easy and successful optimization process. It is important to understand that, the Pareto principle gives us no guaranties regarding the effort required for improving the software performance, it just states that the performance critical code is relatively small in size and nothing more.

But what can actually go wrong and make the optimization process really difficult, when we know for a fact that the performance critical code is small in size? Unfortunately in practice, there are several restraining factors, which may reduce the positive impact of the Pareto principle and can make the optimization process disproportionally difficult, in relation to the relatively small size of the critical code. To obtain a more concrete and practical understanding of these restraining factors, it can serve us well to discuss some indicative examples here:

- The performance critical code already performs too well to be significantly improved.

When the critical code already performs well, we should concentrate our efforts on reducing its use, instead of actually improving its performance. This sometimes requires difficult design changes or even architectural changes, which in turn increase the complexity and the side-effects of the optimization very much. Consequently, when the critical code already performs well, the effort required for improving the software performance may not be proportional to the size of the critical code and the positive impact of the Pareto principle is expected to be weakened.

- The performance critical code, even if it is small in size, may be widely scattered.

When the critical code is distributed in many places, solving the performance problems will probably require a big number of changes, and may produce a lot of side-effects and increase instability. Consequently, the existence of scattered performance bottlenecks, which can be particularly frequent in poorly designed software entities, usually moderates the positive impact of the Pareto principle, by making the optimization disproportionally difficult in relation to the size of the critical code.

- The improvement of the performance critical code may cause side-effects in much larger code portions.

The undesirable and dangerous side-effects, which can be caused by performance improvements are the main reason why we have already discussed that well-designed code is easier to optimize. However, in the real word, software design is often imperfect and unexpectedly severe side-effects are not uncommon during the optimization. Obviously, this is yet another case in which, the optimization is disproportionally difficult in relation to the size of the critical code. Consequently, we should consider the weak design as one more factor which can reduce the positive impact of the Pareto principle.

- The overall software performance is too poor to become acceptable by merely improving small portions of code.

When the 80-20 rule applies, it is mathematically impossible to make the overall performance more than five times better by merely improving the critical 20% of the code. Likewise, when the 90-10 rule applies, the best we can possibly do by improving only the critical 10% of the code, is to make the overall performance up to ten times better. These limitations may seem very relaxed at first glance, but in practice the improvements which can be easily achieved, will usually be considerably smaller than the above theoretical best cases. Hence, if we neglect the performance issues too much when building the software, it is quite possible to experience situations in which, optimizing the most critical 10-20% of the code will not be enough to compensate for the overall poor performance. Of course, we can continue optimizing the less critical code as well, but this will probably force us to rewrite a big part of our already complete and functional software, which in turn increases the difficulty of the optimization process too much and will almost eliminate the positive impact of the Pareto principle. Speaking metaphorically, if you have originally designed an elephant, it will be extremely difficult to optimize it into a cheetah at the final stages of its implementation!

The above examples clearly demonstrate that several restraining factors can prevent the Pareto principle from guaranteeing an easy and effective optimization of a fully implemented software. In other words, it is not reasonable to assume that any software, regardless of its initial state, can easily achieve acceptable performance just because the Pareto principle applies. Since the Pareto principle cannot always guarantee an easy and successful optimization process, this principle is not a good enough reason for the developers to stop caring about performance while building their software. Furthermore, in some performance critical applications, designing for performance may be the only effective way to achieve demanding performance goals.

Conclusion

The Pareto principle has a great positive impact in software optimization, which the software developers should take advantage of. However, like most things in life, the positive impact of the Pareto principle has its limitations, which the software developers should also acknowledge and respect. The Pareto principle is a very good reason for avoiding the premature optimization and, at the same time, a really bad excuse for neglecting the software performance during the design and the implementation phases of the software development. Generally, when it comes to optimization, overestimating this important principle can be at least as wrong as ignoring or underestimating it.

Needless to say that the solid understanding of the Pareto principle is essential for software developers who deal with optimization tasks. Being able to take advantage of the positive consequences of the Pareto principle, while avoiding the dangerous misconceptions which surround this principle is a very useful optimization skill which can be significantly improved with experience and practice. However, it is not uncommon even for experienced developers to underestimate the limitations of the Pareto principle. Unfortunately, the fact that these limitations can be very inconvenient makes them also difficult to be acknowledged by the software developers, who are often prone to underestimate and overlook whatever seems inconvenient to them.

References

- Wikipedia: Pareto principle

http://en.wikipedia.org/wiki/Pareto_principle - Wikipedia: Program optimization

http://en.wikipedia.org/wiki/Optimization_(computer_science) - Wikipedia: Profiling

http://en.wikipedia.org/wiki/Profiling_(computer_programming) - Wikipedia: Performance bottlenecks

http://en.wikipedia.org/wiki/Program_optimization#Bottlenecks - Optimize Later

http://c2.com/cgi/wiki?OptimizeLater - Profile Before Optimizing

http://c2.com/cgi/wiki?ProfileBeforeOptimizing - Tuning Performance and Process: Creating Tunable Software

http://www.artima.com/intv/tunableP.html - Tuning Performance and Process: Creating Tunable Software

http://c2.com/cgi/wiki?PrematureOptimization - Wikipedia: Rule of thumb

http://en.wikipedia.org/wiki/Rule_of_thumb

I live in Greece with my wife and our two daughters. I am a professional software developer since 1999, using mostly C/C++ in my work.

My main expertise are: C/C++, STL, software optimization, generic programming and debugging. I am also very experienced in client–server programming, communications, concurrent programming, software security and cryptography. Finally, in my early professional years, I have worked a lot on cross-platform programming (Mac+Win).

I am familiar with the MFC, wxWidgets and Cplat GUI frameworks and the Python, Java, Pascal, Fortran, Prolog and Rexx programming languages.

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin