Introduction

I wanted to try this great thing called speech recognition. Luckily lots of sample are there in C#. Speech recognition technology is part of windows and .Net framework supports this feature.Surprisingly this cool feature is very easy to use.Thanks to .net. It is not much difficult than adding a button to the form and handling its clicked event. Since I have some experience with WPF, I tried creating simple app using WPF in which if user says "Change colour to green" , the speech engine recognizes this and changes Window background colour to green.The app worked well but I was not quite satisfied with the code itself. Since WPF programmers are used to declarative XAML and binding, I felt this is missing from the sample app. WPF has in build support for handling inputs from devices like Keyboard, mouse and stylus. InputBinding lets you create relationship between input gesture and command. In short I wanted same support for speech. Something which looks like below

<Window.InputBindings>

<SpeechBinding Command="{Binding ChangeColour}" Gesture="Change Colour To Green"/>

</Window.InputBindings>

Here Gesture property defines text of the sentence and Command which handles action when speech is recognized by engine. Lets see how we can do this.<o:p>

Speech Recognition in .net

If you are completely new to speech recognition in .net I would recommend reading this great article. If you are familier with this , you can skip this section.Lets brush up how speech recognition works. If you want to use speech recognition in your application mostly you will be using SpeechRecognitionEngine class from System.Speech.Recognition namespace which is defined in System.Speech.dll. This class can handle input from audio device or even WAV file,an audio stream or a WAV stream. However you need to create a Grammar object which defines the words and phrases that need to recognized by engine. The engine can work in synch or asynch mode. Engine works on event based model. Lets see typical code needed to setup SpeechRecognitionEngine.

var voiceEngine = new SpeechRecognitionEngine(new System.Globalization.CultureInfo("en-US"));

voiceEngine.LoadGrammar(new Grammar(new GrammarBuilder("Hello")) { Name = "TestGrammer" });

voiceEngine.SpeechRecognized += voiceEngine_SpeechRecognized;

voiceEngine.SetInputToDefaultAudioDevice();

voiceEngine.RecognizeAsync(RecognizeMode.Multiple);

void voiceEngine_SpeechRecognized(object sender, SpeechRecognizedEventArgs e)

{

if (e.Result.Text == "Hello" )

{

Console.WriteLine("I know you said :" + e.Result.Text);

}

}

Above code can be used with any Console,WinForm or WPF app. Please note that if engine do not recogniz voice as expected , then you can try two things 1) Make use good quality microphone.

2) Train your computer to understand your voice. To tain your computer follow these steps:

- Open Control Panel

- Go to Ease of Access

- Choose Speech Recognition

- Then choose Train your computer to better understand you

From above it is clear that it is much easier to get started with speech recognition and develop speech aware application.But one can imagin there are many things happening behind the scense. Sound waves are converted to electric signals , electric signal are converted to digital and is accessible via device driver. The OS and Speech Engine and API does rest of the hard work of processing ,recognizing and raisig several events.

Input Binding in WPF

InputBinding represents a binding between an InputGesture and a command. Lets see typical example of input binding in xaml.

<Window.InputBindings>

<KeyBinding Key="B"

Modifiers="Control"

Command="ApplicationCommands.Open" />

</Window.InputBindings>

MSDN documentation says:

All objects that derive from UIElement have an InputBindingCollection named InputBindings. All objects that derive from ContentElement have an InputBindingCollectionnamed InputBindings.

However, if these collections are set in XAML, then the items in the collection must be derived classes of InputBinding rather than direct InputBinding objects. This is because InputBinding does not support a default public constructor. Therefore, the items in a InputBindingCollection that was set in XAML will typically be an InputBinding derived class that does support a default public constructor, such as KeyBinding or MouseBinding.

WPF Input System is closed for extending

I wanted WPF to consume input from mic.My first thought was that WPF probably have a extension point which allows to inject input from custom device. I found InputManager class.The InputManager class is responsible for coordinating all of the input systems in WPF.It provides as list of registered InputProviders. But this class do not provide any way to add your own InputProvider. Here is a relevant link. But as per this link, during design phase of Avalon(WPF was named as Avalon before release) there was a proivsion to add new devices.

Implementing Speech Binding

We already know how to use speech recognition and also how to bind a keyboard gesture to command. We need a way to bind speech gesture to command.MSDN documentation clearly says to add anyting to InputBindingCollection it must be derived from InputBiding class. Lets do the same.

public class SpeechBinding : InputBinding

{

protected static readonly SpeechRecognitionEngine recognizer

= new SpeechRecognitionEngine(new System.Globalization.CultureInfo("en-US"));

static bool recognizerStarted = false;

static readonly Dispatcher mainThreadDispatcher = Dispatcher.CurrentDispatcher;

static SpeechBinding()

{

recognizer.RecognizerUpdateReached += recognizer_RecognizerUpdateReached;

}

static void recognizer_RecognizerUpdateReached(object sender, RecognizerUpdateReachedEventArgs e)

{

var grammar = e.UserToken as Grammar;

recognizer.LoadGrammar(grammar);

Debug.WriteLine("Loaded :"+grammar.Name);

StartRecognizer();

}

[TypeConverterAttribute(typeof(SpeechGestureConverter))]

public override InputGesture Gesture

{

get

{

return base.Gesture;

}

set

{

base.Gesture = value;

LoadGrammar();

}

}

protected virtual void LoadGrammar()

{

var speechStr = Gesture.ToString();

var grammarBuilder = new GrammarBuilder(speechStr);

grammarBuilder.Culture = recognizer.RecognizerInfo.Culture;

var grammar = new Grammar(grammarBuilder) { Name = speechStr };

grammar.SpeechRecognized += grammar_SpeechRecognized;

Debug.WriteLine("Loading " + speechStr);

recognizer.RequestRecognizerUpdate(grammar);

}

protected static void StartRecognizer()

{

if (recognizerStarted == false)

{

try

{

recognizer.SetInputToDefaultAudioDevice();

}

catch (Exception ex)

{

Messenger.Default.Send<Exception>(new Exception("Unable to set input from default audio device." + Environment.NewLine + "Check if micropone is availble and enabled.", ex));

return;

}

recognizer.RecognizeAsync(RecognizeMode.Multiple);

recognizerStarted = true;

}

}

protected void grammar_SpeechRecognized(object sender, SpeechRecognizedEventArgs e)

{

mainThreadDispatcher.Invoke(new Action(() =>

{

OnSpeechRecognized(sender, e);

}), DispatcherPriority.Input);

}

protected virtual void OnSpeechRecognized(object sender, SpeechRecognizedEventArgs e)

{

if (Command != null)

{

Command.Execute(e.Result.Text);

}

}

}

}

This class takes care of initializing the SpeechRecognitionEngine and this engine instance is static. So all instances of SpeechBinding makes use of same shared engine. This class has two important inherited properties Gesture and Command. The Gesture Property is of type InputGesture. InputGesture is abstract class which represents gesture from device. Since it is abstract we need to provide a concrete implementation which represents a speech which user is going to say. SpeechGesture derives from InputGesture. SpeechGestureConverter converts sting specified in xaml to SpeechGesture and setter of Gesture property creates Grammar object from the string. SpeechRecognized event of Grammar is handled. The event handler invokes the Command with argument as recognized text. Since the event is on non UI thread we need UI thread’s dispatcher object to delegate command invocation to UI thread.

After using the app I realized that ability to specify one string is not good enough to create grammar. For example "Change colour to someColour" .If I need to support 100 colour then I need to create 100 such xaml entries, one for each colour which is not nice. Luckily the grammar object can be created using xml files. W3C has specification for that called Speech Recognition Grammar Specification (SRGS). So to consume these grammar files I created another class called SRGSDocBinding derived from SpeechBiding. It has GrammarFile property which is name of xml file having the grammar rules. Usage is as below.File need to be in app folder.

<o:p>

<Window.InputBindings>

<speech:SRGSDocBinding Command="{Binding OpenDotCom}" GrammarFile ="Grammar.xml" />

</Window.InputBindings>

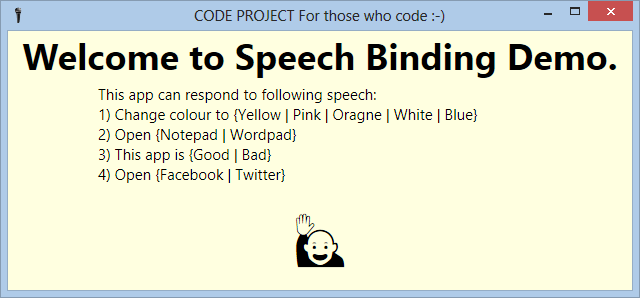

About Sample App

The solution is created with VS2012 has two projects one is WPF sample app and other is library project implementing SpeechBiding.WPF app is created with MVVMLight project template. The ViewModel for window defines RelayCommands used for SpeechBinding. Please download and try youself. Speek to the app and have some fun.You need to have desktop/laptop with microphone and .net framework 4.5.

Some Observations

SpeechRecognitionEngine<o:p> can give you false results. For example you say "Red" and it recognizes "Green". I did not find any way to determice the accuracy of recognitoin.There is RecognitionResult.<o:p>Confidence property of type float whose value can be between 0 to 1. Even with false results value can be 0.9. That means engine is 90% confident but still 100% wrong.

Points of Interest

It was fun for me to discover a mvvm friendly approach to invoke commands based on user's voice input. It save writting repeated code to load grammar. I expect WPF to have native support for VoiceCommand. I appreciate your feedback,

History

First posted on 12/20/2014.

This member has not yet provided a Biography. Assume it's interesting and varied, and probably something to do with programming.

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin