Introduction

When Intel put a call out for apps that would transform the way we interface with traditional PCs, Jacob and Melissa Pennock, founders of Unicorn Forest Games, entered their spell-casting game, Head of the Order*, into the Intel® Perceptual Computing Challenge using technical resources from the Intel® Developer Zone. They took home a top prize.

Figure 1: Unicorn Games' teaser trailer for Head of the Order*.

The idea was to control an app with zero physical contact. Head of the Order (Figure 1) immerses players in a tournament-style game through beautifully illustrated indoor and outdoor settings where they conjure up spells using only hand gestures. When the gameplay for the demo was in the design stages, one of the main goals was to create a deep gestural experience that permitted spells such as shields, fire, and ice to be combined. "While it feels great to cast your first fireball, we really wanted to give the player that moment of amazement the first time they see their opponent string a number of gestures together and annihilate them," said Jacob. He imagined it being like the first time watching a highly skilled player in a classic fighting game and wanted there to be a learning curve so players would feel accomplished when they succeeded. Jacob's goal was to make it different than mastering play with a physical controller. "By using gesture, we are able to invoke the same feelings people get when learning a martial art; the motions feel more personal," he said.

The Creative Team

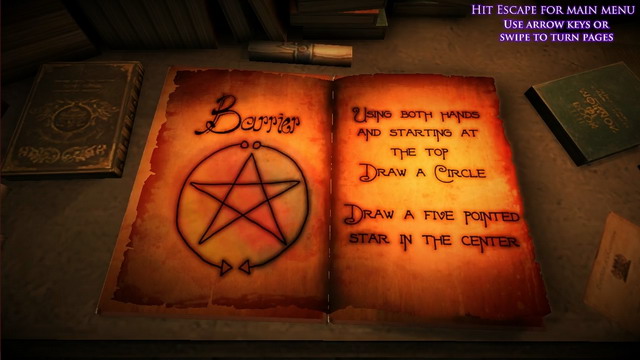

While Jacob created the initial game concept, he and Melissa are both responsible for the creative elements, and they work together to refine the game's design. According to Jacob, Melissa's bachelor's degree in psychology gives her valuable insight into user-experience design, and her minor in studio art led to Head of the Order's impressive visuals. "Her expertise is in drawing, especially detailed line work," said Jacob. Melissa also has a strong background in traditional and 2D art, and created all of the 2D UI assets, including the heads-up display, the main menu, and the character-select screen. Together, they set up rendering and lighting, worked on much of the texturing, and designed levels around what they could do with those assets and special effects. "Melissa added extensive art direction, but we cannot take credit for the 3D modeling, most of which was purchased from the Unity Asset Store," said Jacob. Melissa also created the interactive spell book, which players can leaf through in the demo with a swipe of the hand (see Figure 2).

Figure 2. The pages of the Head of the Order* spell book are turned with a swipe of the hand.

Jacob studied at East Carolina University, where he developed his own gesture-recognition system while earning bachelor's degrees in mathematics and computer science, with a focus on human-computer interaction. His work includes a unistroke gesture-recognition algorithm that improved the accuracy of established algorithms of the day. Originally created in 2010 and written in C++, Jacob has licensed his HyperGlyph* library to major game studios. "It was my thesis work at graduate school [and] I have used it in several projects," he said. "It's quite accurate and designed for games." Although the library is very good at matching input to things within the gesture set, Jacob added that it's also very good at rejecting false positives and input outside of the template set, which is a large problem for many other stroke-based gesture systems that can lead to "…situations where the player scribbles anything he wants, and the power attack happens." The strictness and other factors of the library's recognizer are configurable.

His education also proved valuable when it came to the complexities of processing the incoming camera data; that was something he had already mastered. "I had a lot of experience with normalizing and resampling input streams to look at them," said Jacob. While at the university, he developed an extension of formal language theory that describes inputs involving multiple concurrent streams.

Harnessing the 3D Space

The perceptual camera used at Unicorn Forest Games is a Creative* Senz3D. When paired with the Intel® Perceptual Computing SDK, applications can be made to perform hand and finger tracking, facial-feature tracking, and some voice and hand-pose-based gesture recognition. Jacob said he prefers this camera for its close-range sensing; Intel's camera is designed to track the hands and face from a few inches to a few feet away. "It is lighter and faster, and it feels like there are fewer abstraction layers between my code and the hardware," he said.

To process data coming from the camera, Jacob set up a pipeline for each part of the hand that he wanted to track. The app then requests streams of information through the SDK and polls the streams for changes. Those data streams become a depth map of the 3D space. The SDK pre-segments hands from the background, and from that a low-resolution image is created. "We also request information on the hand tracking, which comes as labels mapped to transforms with position and rotation mapped in the 3D space," he added. The Intel Perceptual Computing SDK provides labels for left hand, right hand, individual fingers, and palms.

Describing a small quirk in the Intel Perceptual Computing SDK, Jacob said that the labels at times can become mixed up; for example, the user's right hand might appear in the data under the left-hand label, or vice versa. Jacob addressed the issue by making the system behave exactly the same when using either hand. "In fact, the [Intel] Perceptual Computing SDK is often reporting that left is right and vice versa, and the game handles it just fine," he said.1

Mapping labels aside, Head of the Order implements a hand-tracking class that initializes the pipelines and does all the interacting with the Intel Perceptual Computing SDK. "This class stores and updates all of the camera information," said Jacob. "It also performs various amounts of processing for things like hands being detected or lost, changes in speed, hands opening and closing, and pose-based gestures, all of which produce events that subsystems can subscribe to and use as needed."

Grasping at Air

Although Head of the Order was built specifically for the Intel Perceptual Computing Challenge, the idea came to Jacob in 2006 while listening to a TED Talk about multi-touch before it had become commonplace. Being the first time he had seen anything multi-touch, Jacob was sparked to think past the mouse and keyboard and realized that the future would be full of touch and perceptual interfaces. He began to imagine content for them; unfortunately, the technology for non-touch inputs hadn't yet been invented.

Fast forward a few years, and with hand-tracking technology available, Jacob and Melissa were able to bring their idea to life, but not before Jacob had a chance to actually contribute to the development of perceptual computing technology itself. During graduate school, Jacob focused on making effective stroke-based gesture recognition. However, the actual recognition of shapes for Head of the Order is designed around touch, which led to one of the biggest challenges Jacob faced when building perceptual interfaces: Inputs that take place in mid-air don't have a clear start-and-stop event. As Jacob explained, "You don't have a touch-down and a lift-off like you have with a touch screen, or even a mouse." For Head of the Order, Jacob had to develop something that mimicked those events.

The Hand-Rendering System

Jacob created a hand-rendering system that can re-sample the low-resolution hand images provided by the Intel Perceptual Computing SDK and add them to the game's rendering stack at multiple depths through custom post-processing. "This allows me to display the real-time image stream to the user with various visually appealing effects." Figures 3.1 through 3.3—and the following code samples—illustrate how the hand-rendering system works. (The code samples show the renderer and segments of the hand-tracker class on which it relies.)

Figure 3.1: The raw, low-resolution image feed texture applied to the screen though a call to Unity's built-in GUI system. The code is from the Unity demo project provided by Intel.

Figure 3.2: This hand image is first blurred and then applied to the screen with a simple transparent diffuse shader through post processing done by the HandRender script (below). It has the advantage of looking very well-defined at higher resolutions but requires Unity Pro*.

Figure 3.3: A Transparent Cutout shader is applied, which renders clean edges. Multiple hand renders can also be done in a single scene, at configurable depths. For example, the hand images can render on top of any 3D content, but below 2D menus. This is how the cool ghost-trail effects were produced in the main menu of Head of the Order*.

Code sample 1. The HandRenderer script implements a post-processing shader that is a simple transparent diffuse. Only the initialization and key rendering portions of the script are shown here.

using UnityEngine;

using System;

using System.Collections;

using System.Collections.Generic;

using System.Runtime.InteropServices;

public class RealSenseCameraConnection: MonoBehaviour

{

private PXCUPipeline pp;

[HideInInspector]

public Texture2D handImage;

public Color32[] colors;

public PXCMGesture.GeoNode[][] handData;

public List<HandRenderer> Renderers;

private static RealSenseCameraConnection instance;

public static RealSenseCameraConnection Instance

{

get

{

if (instance == null)

{

instance = (RealSenseCameraConnection)FindObjectOfType(typeof(RealSenseCameraConnection));

if (instance == null)

instance = new GameObject ("RealSenseCameraConnection").AddComponent<RealSenseCameraConnection> ();

}

return instance;

}

}

void Awake()

{

if(Instance != null)

{

if(Instance != this)

{

Destroy(this);

}

else

{

DontDestroyOnLoad(gameObject);

}

}

}

void Start()

{

pp=new PXCUPipeline();

int[] size=new int[2]{320,240};

if (pp.Init(PXCUPipeline.Mode.GESTURE))

{

print("Conected to Camera");

}

else

{

print("No camera Detected");

}

handImage=new Texture2D(size[0],size[1],TextureFormat.ARGB32,false);

ZeroImage(handImage);

handData=new PXCMGesture.GeoNode[2][];

handData[0]=new PXCMGesture.GeoNode[9];

handData[1]=new PXCMGesture.GeoNode[9];

}

void OnDisable()

{

CloseCameraConnection();

}

void OnApplicationQuit()

{

CloseCameraConnection();

}

void CloseCameraConnection()

{

if (pp!=null)

{

pp.Close();

pp.Dispose();

}

}

void Update()

{

if (pp!=null)

{

if (pp.AcquireFrame(false))

{

ProcessHandImage();

pp.ReleaseFrame();

}

}

}

void ProcessHandImage()

{

if (QueryLabelMapAsColoredImage(handImage,colors))

{

handImage.Apply();

}

foreach(HandRenderer renderer in Renderers)

{

renderer.SetImage(handImage);

}

}

public bool QueryLabelMapAsColoredImage(Texture2D text2d, Color32[] colors)

{

if (text2d==null) return false;

byte[] labelmap=new byte[text2d.width*text2d.height];

int[] labels=new int[colors.Length];

if (!pp.QueryLabelMap(labelmap,labels)) return false;

Color32[] pixels=text2d.GetPixels32(0);

for (int i=0;i<text2d.width*text2d.height;i++)

{

bool colorSet = false;

for(int j = 0; j < colors.Length; j++)

{

if(labelmap[i] == labels[j])

{

pixels[i]=colors[j];

colorSet = true;

break;

}

}

if(!colorSet)

pixels[i]=new Color32(0,0,0,0);

}

text2d.SetPixels32 (pixels, 0);

return true;

}

public Vector3 PXCMWorldTOUnityWorld(PXCMPoint3DF32 v)

{

return new Vector3(-v.x,v.z,-v.y);

}

public Vector3 MapCoordinates(PXCMPoint3DF32 pos1)

{

Camera cam = Renderers[0].gameObject.GetComponent<Camera>();

return MapCoordinates(pos1,cam,Vector3.zero);

}

public Vector3 MapCoordinates(PXCMPoint3DF32 pos1, Camera cam, Vector3 offset)

{

Vector3 pos2=cam.ViewportToWorldPoint(new Vector3((float)(handImage.width-1-pos1.x)/handImage.width,

(float)(handImage.height-1-pos1.y)/handImage.height,0));

pos2.z=pos1.z;

pos2 += offset;

return pos2;

}

public Vector2 GetRawPoint(PXCMPoint3DF32 pos)

{

return new Vector2(pos.x,pos.y);

}

public Vector3 GetRawPoint3d(PXCMPoint3DF32 pos)

{

return new Vector3(pos.x,pos.y,pos.z);

}

private void ZeroImage(Texture2D image)

{

Color32[] pixels=image.GetPixels32(0);

for (int x=0;x<image.width*image.height;x++) pixels[x]=new Color32(0,0,0,0);

image.SetPixels32(pixels, 0);

image.Apply();

}

public static void AddRenderer(HandRenderer handrenderer)

{

Debug.Log("Added Hand Renderer");

Instance.Renderers.Add(handrenderer);

}

public static void RemoveRenderer(HandRenderer handrenderer)

{

if(Instance != null)

{

if(!Instance.Renderers.Remove(handrenderer))

{

Debug.LogError("A request to remove a handrender was called but the render was not found");

}

}

}

}

Code sample 2. This component gets attached to the camera (requires Unity Pro*). The HandRenderer script keeps the rendered hands appearing sharp through a range of resolutions and is used throughout most of the game.

using UnityEngine;

using System.Collections;

public class HandRenderer : MonoBehaviour

{

public bool blur = true;

[HideInInspector]

public Texture2D handImage;

public Material HandMaterial;

public int iterations = 3;

public float blurSpread = 0.6f;

public Shader blurShader = null;

static Material m_Material = null;

public Vector2 textureScale = new Vector2(-1,-1);

public Vector2 textureOffset= new Vector2(1,1);

protected Material blurmaterial

{

get

{

if (m_Material == null)

{

m_Material = new Material(blurShader);

m_Material.hideFlags = HideFlags.DontSave;

}

return m_Material;

}

}

public void SetImage(Texture2D image)

{

handImage = image;

}

void OnDisable()

{

RealSenseCameraConnection.RemoveRenderer(this);

if(m_Material)

{

DestroyImmediate( m_Material );

}

}

void OnEnable()

{

RealSenseCameraConnection.AddRenderer(this);

}

private void ZeroImage(Texture2D image)

{

Color32[] pixels=image.GetPixels32(0);

for (int x=0;x<image.width*image.height;x++) pixels[x]=new Color32(255,255,255,128);

image.SetPixels32(pixels, 0);

image.Apply();

}

public void FourTapCone (RenderTexture source, RenderTexture dest, int iteration)

{

float off = 0.5f + iteration*blurSpread;

Graphics.BlitMultiTap (source, dest, blurmaterial,

new Vector2(-off, -off),

new Vector2(-off, off),

new Vector2( off, off),

new Vector2( off, -off));

}

void OnRenderImage (RenderTexture source, RenderTexture destination)

{

if(handImage != null)

{

HandMaterial.SetTextureScale("_MainTex", textureScale);

HandMaterial.SetTextureOffset("_MainTex", textureOffset);

if(blur)

{

RenderTexture buffer = RenderTexture.GetTemporary(handImage.width, handImage.height, 0);

RenderTexture buffer2 = RenderTexture.GetTemporary(handImage.width, handImage.height, 0);

Graphics.Blit(handImage, buffer,blurmaterial);

bool oddEven = true;

for(int i = 0; i < iterations; i++)

{

if( oddEven )

FourTapCone (buffer, buffer2, i);

else

FourTapCone (buffer2, buffer, i);

oddEven = !oddEven;

}

if( oddEven )

Graphics.Blit(buffer, destination,HandMaterial);

else

Graphics.Blit(buffer2, destination,HandMaterial);

RenderTexture.ReleaseTemporary(buffer);

RenderTexture.ReleaseTemporary(buffer2);

}

else

Graphics.Blit(handImage, destination,HandMaterial);

}

}

}

Once the hand is recognized as an input device, it's represented on-screen, and a series of hand movements now control the game. Raising one finger on either hand begins input, opening the whole hand stops it, swiping the finger draws shapes, and specific shapes correspond to specific spells. However, Jacob explained that those actions led to another challenge. "We had trouble getting people to realize that three steps were required to create a spell." So Jacob modified the app to factor in finger speed. "In the new version, if you're moving [your finger] fast enough, it's creating a trail; if you're moving slowly, there's no trail." With this new and more intuitive approach (Figure 4), players can go through the demo without any coaching.

Figure 4: By factoring finger speed into recognition logic, new players have an easier time learning to cast spells.

Gesture Sequencing

In addition to the perceptual interface that he developed, Jacob believes that the biggest innovation delivered with the Head of the Order is its gesture sequencing, which permits players to combine multiple spells into a super-spell (called "combo spells" in the game; see Figure 5). "I haven't seen many other systems like that," he said. "For instance, most of the current traditional gestures are pose-based; they're ad hoc and singular." In other words, others write one gesture that's invoked by a single, two-finger pose. "That is mapped to a singular action; whereas in Head of the Order, stringing gestures together can have meaning as a sequence," Jacob added. For example, a Head of the Order player can create and cast a single fireball (Figure 6) or combine it with a second fireball to make a larger fireball, or perhaps a ball of lava.

Figure 5: Head of the Order* permits individual spells to be combined into super-spells, giving players inordinate power over opponents, provided they can master the skill.

Developing the ability to combine spells involved dreaming up the possible scenarios of spell combinations and programming for each one individually. "The main gameplay interaction has a spell-caster component that "listens" to all the hand-tracker events and does all the higher-level, stroke-based gesture recognition and general spell-casting interaction," Jacob explained. He compared its functions to those of a language interpreter. "It works similar to the processing of programming languages, but instead of looking at tokens or computer-language words, it's looking at individual gestures, deciphering their syntax, and determining whether it's allowed in the situation."

Figure 6: Fireball tutorial.

This allows for many complex interactions and actually works if all those scenarios are handled correctly. Amazingly, this allows players to string gestures together, and the system then recognizes them and creates new gestures. "Once you can land some of the longer spells, it feels like you're able to do magic. And that's the feeling we're after."

Figure 7: What makes Head of the Order* unusual is the ability to combine spells. In this video, developer Jacob Pennock shows how it's done.

Innovations and the Future

Having contributed in large part to its advancement, perceptual computing in Jacob's mind will someday be as pervasive as computers themselves—perhaps even more so. And perceptual computing will have numerous practical applications. "Several obvious applications exist for gesture in the dirty-hand scenario. This is when someone is cooking, or a doctor is performing surgery, or any instance where the user may need to interact with a device but is not able to physically touch it."

To Jacob, the future will be less like science fiction and more like wizardry. "While we do think that gestures are the future, I'm tired of the view where everybody sees the future as Minority Report or Star Trek," Jacob lamented. He hopes that hand waves will control household appliances in three or five years. Jacob believes that gesturing will feel more like Harry Potter than Iron Man. "The PlayStation* or Wii* controller is essentially like a magic wand, and I think there is potential for interesting user experiences if gestural interaction is supported at an operating-system level. For instance, it would be nice to be able to switch applications with a gesture. I'd like to be able to wave my hand and control everything. That's the dream. That's the future we would like to see."

Intel® Perceptual Computing Technology and Intel® RealSense™ Technology

In 2013, Head of the Order was developed using the new, intuitive natural user interfaces made possible with Intel® Perceptual Computing technology. At CES 2014, Intel announced Intel® RealSense™ technology, a new name and brand for Intel Perceptual Computing. In 2014 look for the new Intel® RealSense™ SDK as well as Ultrabook™ devices shipping with Intel® RealSense™ 3D cameras embedded in them.

Resources

While creating Head of the Order, Jacob and Melissa utilized Unity, Editor Auto Save*, PlayModePersist* v2.1, Spline Editor*, TCParticles* v.1.0.9, HOTween* v1.1.780, 2D Toolkit* v2.10, Ultimate FPS Camera* v1.4.3, and nHyperGlyph* v1.5 (Unicorn Forrest's custom stroke-based gesture recognizer).

1 This is a known issue with the 2013 version of the Intel® Perceptual Computing SDK. The 2014 version, when released, will differentiate between left and right hands.

Intel®Developer Zone offers tools and how-to information for cross-platform app development, platform and technology information, code samples, and peer expertise to help developers innovate and succeed. Join our communities for the Internet of Things, Android*, Intel® RealSense™ Technology and Windows* to download tools, access dev kits, share ideas with like-minded developers, and participate in hackathons, contests, roadshows, and local events.

This member has not yet provided a Biography. Assume it's interesting and varied, and probably something to do with programming.