Now that you are familiar with building and deploying a Node.js application to a Kubernetes cluster on Azure Kubernetes Service (AKS), let’s create a more complex application. Then, we’ll automate the entire build and deployment process using an Azure DevOps pipeline.

In this article, we’ll build a job listings web application in TypeScript by running multiple services: a web server and a helper process that regularly pulls jobs data from the GitHub Jobs API. Through an Azure DevOps pipeline, we’ll containerize the services and configure and deploy them in a single pod to AKS.

Multi-Container Pods

While it’s generally good practice to run just one microservice process per Kubernetes pod, in some cases, you may run multiple processes on the same pod to share data or resources. For example, your application may include a helper process that acts as a proxy for routing and rate-limiting HTTP requests, loading data in the background, or monitoring and logging the primary process.

Some common multi-container patterns are:

- Sidecar containers: "helper" containers such as health monitoring or logging processes

- Ambassador containers: communication containers that access data from external services

- Adapter containers: connector containers that provide different interfaces for external applications

Setting up the Project

Let’s start the project. You can find the full code on GitHub.

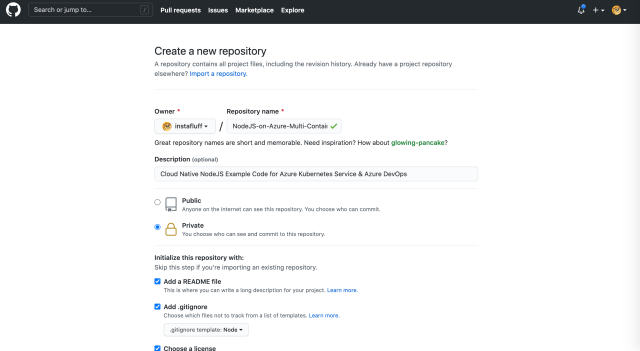

Creating a Repository

Create a new repository on GitHub for your Node.js project and clone it to your computer.

Create two folders inside the project folder and name them "main" and "jobs". These folders correspond to the two Node.js applications we’ll build for this project.

Building the Jobs Cache

First, build a side process inside the jobs folder that pulls the latest job listings every 15 minutes using the GitHub Jobs API then caches them. To do this:

Preparation

Open a command prompt or terminal window to the project folder and run npm init inside the jobs folder. Set the entry point value to "index.ts" for Typescript. Keep all other values as the default.

Next, update the package.json file to work with Typescript. Add the following line for a start command into the scripts section:

"start": "tsc && node dist/index.js"

This allows us to compile and run the code using the npm start command.

Then, install Typescript dependencies by running npm install @types/node typescript. Create a tsconfig.json file with the following:

{

"compilerOptions": {

"module": "commonjs",

"esModuleInterop": true,

"target": "es6",

"moduleResolution": "node",

"sourceMap": true,

"outDir": "dist"

},

"lib": ["es2015"]

}

To finish preparations, we’ll install several project dependencies for this app to host a web server and make HTTP requests. Use this command:

npm install webwebweb node-fetch

Code

Now, create the code to run this project.

Create a new index.ts file inside the jobs folder and import the modules at the top:

import fetch from "node-fetch";

import * as Web from "webwebweb";

Below it, add a function to retrieve and cache the latest jobs from the GitHub API and call it:

let jobs = [];

async function getLatestJobs() {

jobs = await fetch( "https://jobs.github.com/positions.json" )

.then( r => r.json() );

}

getLatestJobs();

Let’s also schedule this function to run every 15 minutes.

setInterval( () => {

getLatestJobs();

}, 60000 * 15 );

Lastly, define a search API endpoint that returns a set of job listings filtered using the text field of the query string. Then, run the HTTP server on port 8081.

Web.APIs[ "/search" ] = ( qs, body, opts ) => {

if( qs.text ) {

let terms = qs.text.split( "," );

return jobs.filter( x => terms.every( term => x.description.match( new RegExp( term, "i" ) ) ) );

}

else {

return jobs;

}

};

Web.Run( 8081 );

Now, test to ensure everything is set up correctly. Run npm start inside this folder and open a browser window to http://localhost:8081/search to confirm we receive the JSON object of job listings.

Dockerfile

While we’re at it, we’ll prepare this application for containerization. We don’t need to create the image ourselves. We only need to configure it. The Azure DevOps pipeline creates the image for us.

Create a Dockerfile that copies the package configuration, installs the dependencies, copies the remaining files, opens port 8081, and starts the process, like this:

FROM node:8

WORKDIR /usr/src/app

COPY package*.json ./

RUN npm ci --only=production && npm install typescript@latest -g

COPY . .

EXPOSE 8081

CMD [ "npm", "start" ]

Add a .dockerignore file here as well containing the following text:

node_modules

npm-debug.log

That’s it for this side process.

Creating the Main Web Server

In the next part of this project, we create the main Node.js web server that communicates with Jobs Cache. This process is similar to creating the Jobs Cache.

Preparation

Open a command prompt or terminal window to the project folder we named main and run npm init inside the folder. Set the entry point value to "index.ts" for Typescript and leave all other values as the default.

Update the package.json file to work with Typescript, similar to what you did before, by adding the following line for a start command into the scripts section:

"start": "tsc && node dist/index.js"

Then, install Typescript dependencies by running npm install @types/node typescript. Create a tsconfig.json file with the following:

{

"compilerOptions": {

"module": "commonjs",

"esModuleInterop": true,

"target": "es6",

"moduleResolution": "node",

"sourceMap": true,

"outDir": "dist"

},

"lib": ["es2015"]

}

Next, install the same project dependencies for this app as we did for the first app, with:

npm install webwebweb node-fetch

Code

Now, create the code to run this project.

First, create a new index.ts file inside the main folder and import the modules at the top:

import fetch from "node-fetch";

import * as Web from "webwebweb";

Next, define a /jobs API endpoint that fetches the list of job openings from the Jobs Cache via a localhost HTTP request, then starts the HTTP server on port 8080.

Note: For demonstration purposes, this example uses HTTP to communicate between the two apps. As both processes run on the same logical instance on AKS, you could instead use file storage or other methods to communicate.

Web.APIs[ "/jobs" ] = async ( qs, body, opts ) => {

let jobs = await fetch( `http://localhost:8081/search?text=${qs.text}` )

.then( r => r.json() );

return jobs;

};

Web.Run( 8080 );

Before we package this main web server with a web page, let’s test to ensure everything is set up correctly between both applications. Run npm start inside this main folder as well as inside the jobs folder, then open a browser window to http://localhost:8080/jobs?text=nodejs to verify that we get the JSON object of job listings related to Node.js.

Finally, make a folder called "public", which is the default folder where the webwebweb module looks for web files, and add an index.html file. You can create a web page to retrieve and display the job listings related to Node.js from the main server. Use this version we built using Bootstrap and jQuery:

<!doctype html>

<html lang="en">

<head>

<!--

<meta charset="utf-8">

<meta name="viewport" content="width=device-width, initial-scale=1">

<!--

<link href="https://cdn.jsdelivr.net/npm/bootstrap@5.0.0-beta2/dist/css/bootstrap.min.css" rel="stylesheet" integrity="sha384-BmbxuPwQa2lc/FVzBcNJ7UAyJxM6wuqIj61tLrc4wSX0szH/Ev+nYRRuWlolflfl" crossorigin="anonymous">

<title>Job Openings!</title>

</head>

<body class="container">

<h1>Latest Node.js Jobs!</h1>

<div id="jobs" class="row row-cols-1 row-cols-md-3 g-4">

</div>

<script src="https://cdn.jsdelivr.net/npm/bootstrap@5.0.0-beta2/dist/js/bootstrap.bundle.min.js" integrity="sha384-b5kHyXgcpbZJO/tY9Ul7kGkf1S0CWuKcCD38l8YkeH8z8QjE0GmW1gYU5S9FOnJ0" crossorigin="anonymous"></script>

<script src="https://code.jquery.com/jquery-3.6.0.min.js" integrity="sha256-/xUj+3OJU5yExlq6GSYGSHk7tPXikynS7ogEvDej/m4=" crossorigin="anonymous"></script>

<script>

fetch( "/jobs?text=node.js" )

.then( r => r.json() )

.then( jobs => {

jobs.forEach( job => {

$( "#jobs" ).append( `

<div class="col">

<div class="card">

<img src="${job.company_logo}" class="card-img-top" alt="${job.company}">

<div class="card-body">

<h5 class="card-title">${job.company}</h5>

<h6 class="card-subtitle mb-2 text-muted">${job.title}</h6>

<p class="card-text">${job.description}</p>

<a href="${job.url}" class="btn btn-primary">Apply</a>

</div>

</div>

</div>` );

});

});

</script>

</body>

</html>

Now, when you open your browser to http://localhost:8080, you should see a web page populated with job openings.

Dockerfile

Let’s prepare this app for containerization. Once again, we only need to configure it, not create the image ourselves, because the Azure DevOps pipeline creates the image for us.

Create a Dockerfile that copies the package configuration, installs the dependencies, copies the remaining files, opens port 8080, and starts the process like this:

FROM node:8

WORKDIR /usr/src/app

COPY package*.json ./

RUN npm ci --only=production && npm install typescript@latest -g

COPY . .

EXPOSE 8080

CMD [ "npm", "start" ]

Add a .dockerignore file here as well containing the following:

node_modules

npm-debug.log

We’re now ready to deploy.

Be sure to commit and push these changes to your repository before we move forward.

Setting up Azure Kubernetes Service

In the next steps, we set up an Azure group and a new Kubernetes cluster where we deploy this project. If you already have an AKS cluster configured with an Azure Container Registry for your images, you can skip this section.

Install the Azure CLI if you do not have it already.

Log into your Azure account using the console command az login. This opens a browser window to the Azure login web page.

Create an Azure resource group for this project:

az group create -n [NAME] --l [REGION]

For example:

az group create -n nodejsonaks --l westus

After that, create the Azure Container Registry for this resource group, to host your images:

az acr create --resource-group [NAME] --name [ACR-NAME] --sku Basic

For example:

az acr create --resource-group nodejsonaks --name nodejsonaksContainer --sku Basic --attach-acr

Now, create the AKS cluster with the following command. (This step takes several minutes, so it could be a good time for a quick coffee break.)

az aks create --resource-group [NAME] --name [CLUSTER-NAME] --node-count 1 --generate-ssh-keys

For example:

az aks create --resource-group nodejsonaks --name nodejsonaksapp --node-count 1 --generate-ssh-keys

Note: We’ll look at monitoring and scaling in the next part of this series, but if you like, you can enable monitoring here with the additional options: --enable-addons monitoring

Automating Continuous Integration and Deployment

It’s time to deploy code to your Kubernetes cluster on AKS.

Let’s look at how to automate this entire process using Azure DevOps. Then, whenever we push code changes to the repository, the code deploys to the cluster.

First, sign in to Azure DevOps and create a new project.

Create a new pipeline and select where your code resides, in this case, GitHub.

Select your repository. You may need to authorize access to the GitHub repository.

Then, select Deploy to Azure Kubernetes Service and select your Azure Subscription. The subscription needs to match the account you used to log into the console with the Azure CLI.

Select the AKS cluster and ACR you created. Set the port to 8080 to match the Main Web Server app, then press Validate and configure.

Azure DevOps generates an azure-pipelines.yml configuration file for you. Because we are deploying multiple containers, we need to make a few changes. Let’s walk through them.

Update the main dockerfilePath to:

‘**/main/Dockerfile’

Add two variables to correspond to the Jobs Cache image and Dockerfile:

jobsRepository: 'instafluffnodejsonazuremulticontainerpods-jobs'

jobsDockerfile: '**/jobs/Dockerfile'

Add a second Docker@2 task in the build stage to containerize the Jobs Cache app:

- task: Docker@2

displayName: Build and push jobs container image

inputs:

command: buildAndPush

repository: $(jobsRepository)

dockerfile: $(jobsDockerfile)

containerRegistry: $(dockerRegistryServiceConnection)

tags: |

$(tag)

Finally, add the Jobs Cache image to the deploy stage at the bottom of the configuration:

$(containerRegistry)/$(jobsRepository):$(tag)

When you save and run this new pipeline, it commits the configuration files for you in the GitHub repository. You need one last change to deploy the Jobs Cache to your cluster, so don’t be alarmed if your application isn’t working properly yet.

Now that you have finished making changes to your configuration files, pull the latest changes to your computer and edit manifests/deployment.yml by adding the Jobs Cache image to the list of containers:

- name: instafluffnodejsonazuremulticontainerpods-jobs

image: nodejsonakscontainer.azurecr.io/instafluffnodejsonazuremulticontainerpods-jobs

ports:

- containerPort: 8081

It’s time to check out our cloud-based application in action. You can find the IP address of the AKS cluster load balancer by going to your Azure DevOps Pipeline and clicking on the Environments tab, going to View Environment, clicking on the cluster, and then opening the Services tab.

In this case, it is http://40.83.163.48:8080. You might also notice that the Jobs Cache process is protected and unavailable at http://40.83.163.48:8081.

Cleaning Up

Now that we’ve seen our app in action, here are the steps to delete the project when you are ready to clean up the resources. This way, you don’t run out of free build minutes.

The easiest way to delete your cluster and resources is to delete the resource group like this:

az group delete --name [NAME]

For example:

az group delete --name nodejsonaks

To confirm your resource group is deleted, use this command:

az group list --output table

Finally, open Project settings in Azure DevOps and delete the project.

Next Steps

In this part of the cloud native for Node.js series, you learned how to configure and automatically deploy a multi-container Node.js application on Azure. Continue with this series to discover how Azure helps you easily monitor and scale your microservices on AKS.

Raphael Mun is a tech entrepreneur and educator who has been developing software professionally for over 20 years. He currently runs Lemmino, Inc and teaches and entertains through his Instafluff livestreams on Twitch building open source projects with his community.