JSON (C++) is a streaming JSON processor that introduces a navigation and extraction paradigm to efficiently search and pick data out of JSON documents of virtually any size, even on tiny machines with 8-bit processors and kilobytes of RAM.

Introduction

JSON libraries typically use an in-memory model to store their data, but this quickly breaks down when you're dealing either with huge data, or very little RAM or both.

Here, we'll endeavor to put JSON on a diet, and stream everything so we can handle huge data on any machine regardless of how much or little RAM it has. Now you can supersize your JSON without the guilt.

The library provides something innovative - a streaming search and retrieve, called an "extraction". This allows you to front load several requests for related fields, array elements or values out of your document and queue them for retrieval in one highly efficient operation. It's not transactionally atomic because that's impossible for a pure streaming pull parser on a forward only stream, but it's as close as you're likely to get. When performing extractions, the reader automatically knows how to skip forward over data it doesn't need without loading it into RAM so using these extractions allows you to tightly control what you receive and what you don't without having to load field names or values into RAM just to compare or skip past them. You only need to reserve space enough to hold what you actually want to look at.

My last JSON offering was recent, but I decided to rewrite it to clean it up, to change the way data is retrieved from the source, and to make sure it could target 8-bit processors like the ATmega2560. I no longer support whitelist and blacklist "filters", and instead we use a different mechanism for efficient retrieval, again called "extraction"

This new and improved library also features more readable code and more sample code as well as fixing a number of bugs.

It can be used to process bulk JSON data of virtually any size, efficiently searching and selectively extracting data, and should compile on nearly any platform where C++ is available.

Early Release Note

Currently, this library does not support UTF-8 Unicode, only ASCII. UTF-8 is currently being added and will be updated soon.

Conceptualizing this Mess

Forget in-memory trees. When you're dealing with 8kB of total RAM and/or gigabytes of data, in-memory trees won't get you very far.

This library is based around a pull-parser. A pull parser is a type of parser that reads input a small step at a time. You call its read() method in a loop until it returns false, and then for each iteration you can query the parser for various bits of information about the current node you're examining in the document.

The advantage of pull parsers is that they are very time/space efficient, meaning they're fast and don't use a lot of memory. The primary disadvantage is they can be difficult to use. However, I've taken some steps to reduce the difficulty in using it because you can "program" it to extract data at various paths within the document all at once, and it will faithfully execute your extraction before returning the parser to a known state/predictable location.

Because of this, you don't have to actually use much if any of the base pull parser reading functions. You can primarily rely on skipToField()/skipToFieldValue(), skipToIndex(), and extract().

For each section below, I will give you an overview and then cover important methods. Later on, we'll explore some actual code.

Some Terms Used in this Document

- Element - a logical unit of JSON data. It can be an array, an object, or some kind of scalar value. A document is logically composed of these. Some elements - namely arrays and objects, have children. The child elements are the values off each field or the items in an array.

- Node - a marker for some kind of JSON. A document is more or less "physically" composed of these. For example, the

JsonReader::Object node type indicates we've just passed a { in the document, while the JsonReader::EndObject node type indicates we've just passed a } in the document. Elements are self-contained units whereas as nodes are not. For example, for a document to be well formed, each start node (like JsonReader::Array or JsonReader::Object) must have a corresponding end node (JsonReader::EndArray or JsonReader::EndObject). Elements do not have corresponding "end elements" - a JSON element and its logical descendants span several "physical" nodes. - Document - a logical construct indicating an entire well-formed source for JSON data, such as a file. A document starts with a

JsonReader::Initial node and ends on a JsonReader::EndDocument node. A document has a single root element - either an array, an object or a scalar value. Any other elements can only exist underneath the root element or its descendants.

Initialization and Resetting

Let's start with how you create a reader over an input source, and the related method of feeding it a new input source to start over on.

JsonReader()

Parameters

lexContext - the input source to use, of type LexContext

This starts the reader out in the initial state.

The constructor takes a single argument - an instance of a LexContext derivative which it uses as an input source and a capture buffer for holding relevant data it picks out of the file. Different LexContext derivatives are used to read from different types of inputs, although the ArduinoLexContext takes an Arduino Stream which makes it pretty universal since files and network connections and serial ports all derive from it - at least on the Arduino platform. Once you choose and declare the appropriate LexContext, you can pass an instance to the JsonReader constructor.

reset()

Parameters

lexContext - the input source to use, of type LexContext

Resetting the reader will also return it to the initial state, and at that point, you can pass it a new LexContext. It's basically like calling the constructor again.

Retrieving the Associated LexContext

Sometimes, you might want to get to the attached LexContext of the reader. One particularly good reason is you can coax the current physical cursor location out of it, including line and column information. This can help you debug queries.

context()

Retrieves the lexical context information associated with this reader.

Returns

- A

LexContext& referencing the currently attached input source and capture buffer.

Use this primarily for informational purposes to help you keep track of the reader during a query. It has the location information and capture buffer with it which can sometimes be useful, although you can usually get to the capture buffer simply by calling value() off the reader - see later.

The Cursor and Location Information

If you're not sure where your reader is in the document, you can check this. Keep in mind that the physical cursor is always at the start of the next node, which means it won't register a nodeType() of JsonReader::Object until after it has passed the { marker. Therefore to track your logical position, just look one node behind where your physical cursor is currently located. If you're at the start of the first field in an object, you are JsonReader::Object, not JsonReader::Field. Once you have passed the field name and the ":" you will be on JsonReader::Field. Once you have passed the scalar value, you will be on JsonReader::Value. The physical cursor position isn't reported directly off the JsonReader for the simple fact that it does not match the logical cursor position reflected by nodeType(), value(), et al. While it's possible to track the start and end position for each logical node that requires more member variables, ergo more stack to run. I didn't feel it justified itself, because that sort of feature is more suited to highlighting editors and such.

Lexing and Custom Input Sources

LexContext is the base class for all lexical input sources, whether they draw from a file, in-memory string, a network socket or some other source.

A LexContext manages two things - an input source and a capture buffer. The reason these are on the same class is that they interoperate and the two tasks of lexing and capturing are tightly intertwined. For example, you can use tryReadUntil('\"','\\',false) to read all the content between the quotes of a C style string, respecting (but not decoding) escapes. While performing this operation, LexContext is doing two things - it's reading from an input source, and as it reads, it's capturing to the capture buffer. If these concerns of capturing and reading were separated into different classes, it would greatly complicate using and extending these facilities with more tryReadXXXX() methods.

Currently FileLexContext (in FileLexContext.hpp) and SZLexContext (in LexContext.hpp) implement two custom input sources. Note that they are also abstract, since they don't implement the capturing facilities.

You typically won't need to implement the capturing facilities yourself. You can create a template class that derives from StaticLexContext<TCapacity> and pass the template parameter (size_t TCapacity) through to the StaticLexContext<TCapacity> template instantiation. You can look in FileLexContext.hpp for the class of the same name, and then the StaticFileLexContext<TCapacity> template class for an example of combining the two areas of functionality onto the final class.

You can see that the class FileLexContext derives from LexContext and then overrides read() as well as providing its own open(), attach(), and close() methods. You'll do something similar when you make your custom SocketLexContext or whatever. Implementing read() meanwhile, is dead simple. Simply advance the cursor by one and return the character at that location, or return LexContext::EndOfInput if you're past the end of the data or LexContext::Closed if closed or an error.

There's no buffering done on this read, so if you want to buffer you'll have to do it yourself. I avoided it due to this library using enough RAM as it is, and because in my tests, even loading an entire 200kB document into memory and searching off that did not improve performance versus my hard drive which led me to think that the hard drive controller's buffering is more than sufficient in this case. Your mileage may vary. Profile if you have problems and then decide where the improvements need to be, as always.

Navigation

The first step to an efficient query is to navigate to the node representing the start of the logical element of the data you want to extract from. While you can extract from the root fairly efficiently, extract() always moves the reader to the end of the object or array you were querying because that way it can continue from somewhere you expected, and that's consistent. However, this means if you extract() from the root, you'll wind up having to scan the entire document because it will scan until the end of the root object. Even though that's relatively efficient because it won't normalize the data, it's not as efficient as getting what you need and then aborting early, especially with network I/O.

The bottom line is navigate first, then extract. We'll cover navigation here, and then later we'll cover extraction.

To navigate efficiently, we have several skipXXXX() methods that allow you to advance logically to a particular object field, or array item, or skip over your current location in various ways.

These methods do minimal validation and no normalization/loading of values with the exception of skipToFieldValue() which can cause scalar values to be loaded. Because of this, they are very efficient, but they will not always catch document well-formedness errors. This was decided because the alternative requires a lot more memory and CPU cycles.

In fact, great pains were taken to keep the RAM usage especially low, including doing all string comparisons character by character straight from the stream rather than loading them into memory first. The code also undecorates strings (removing quotes and translating escapes) inline without loading the entire string into memory. Due to this, when you use this engine, it only allocates space for the values you actually retrieve. If you never retrieve a value, you will never load it into RAM. This makes this parser pretty unique among JSON parsers, and allows it to do complicated queries even with very little memory.

Navigation to where you need to go should almost always be your first recourse and consideration, since using the navigation methods are the most efficient.

skipToField()

This method sees a lot of use. It is one of the primary ways of navigating. Basically what it does is it skips to the field with the specified name, and on the specified axis.

Parameters

field - indicates the name of the field to skip to.axis - indicates the search axis to use. This tells the parser what "direction" to search and when to stop. You can scan forward through the document, ignoring heirarchy, scan only the member fields of the current object (siblings), or scan its descendants using JsonReader::Forward, JsonReader::Siblings, JsonReader::Descendants, respectively.pdepth - a pointer to a "depth tracking cookie" used to return the reader to the current level of the heirarchy after using axis JsonReader::Descendants. When that axis is specified, this parameter is required. Otherwise, it is ignored. Note that all you have to do is declare an unsigned long int and pass its address in. You don't need to read or write it. It's used so that you can mark when the series of descendant calls starts, rather than having a pair of methods like skipToFirstDescendant() and skipToNextDescendant() which I felt would have been a bit uglier and harder to use, and only slightly easier to understand.

Returns

- a value indicating

true if success, otherwise false if not found or error. You can check hasError() to disambiguate the false result between an error condition and simply not finding the field. Not finding the field is not an error, just a result.

It works pretty simply, given the parameters. Depending on the axis you use, you may wind up calling it in a loop, such as while(jsonReader.skipToField(...)) which is especially useful for axes other than JsonReader::Siblings. It succeeds if you're on a JsonReader::Object node, or a JsonReader::Field node when called and if the field exists. Otherwise it doesn't succeed. Note that the field under the cursor will not be examined. The next field will. This is to facilitate being able to call it in a loop properly. In other words, the first thing skipToField() does is advance the cursor, before it checks anything. Also note that when you successfully skip to a field, you cannot retrieve the field name from the reader's value() method, which will be empty. This is so the reader does not have to reserve space for the field name in RAM nor copy a string to the capture buffer. The rationale is that if you skipped to a field successfully, you already know its name, and can retrieve it from the same place you got the field argument from.

Warning About skipToField() and the JSON Specification

In JSON fields can be in any order. You cannot and must not rely on fields to be in a known order. It's perfectly acceptable for you to get fields in some order the first time you query a source and then a different order the next time - and you must handle that. YOU CANNOT AND MUST NOT RELY ON FIELD ORDER.

The problem comes when you're trying to use skipToField() with JsonReader::Siblings. If you want to retrieve muliple fields off the same object, say name and id, which do you query for first? If you query for the wrong one, you will end up skipping the other one, and you don't know what order they are in ahead of time.

Because of this, you cannot use skipToField() to retrieve multiple fields off the same object. The way to do it is to use an extraction which you declare and then pass to the reader's extract() method. In the extraction, you name multiple fields, and then the reader will fetch them all at once regardless of their order. We're going to get to that later in the article.

skipToFieldValue()

This method also sees a lot of use, but it's basically a convenience method that calls skipToField() followed by read(). It's simply a shortcut to avoid having to do that step yourself since it is so common. Note that read() will read the entire value into a buffer if it's a scalar value, like a string or number. so don't use this method with fields whose values you don't intend to retrieve, or your queries will be less efficient.

Parameters

field - indicates the name of the field whose value you want to skip to.axis - indicates the search axis to use. This tells the parser what "direction" to search and when to stop. You can scan forward through the document, ignoring heirarchy, scan only the member fields of the current object (siblings), or scan its descendants using JsonReader::Forward, JsonReader::Siblings, JsonReader::Descendants, respectively.pdepth - a pointer to a "depth tracking cookie" used to return the reader to the current level of the heirarchy after using axis JsonReader::Descendants. When that axis is specified, this parameter is required. Otherwise, it is ignored.

Returns

- a value indicating

true if success, otherwise false if not found or error. You can check hasError() to disambiguate the false result between an error condition and simply not finding the field. Not finding the field is not an error, just a result.

This works identically to skipToField() except it also reads to the field's value. Note that it will cause scalar values to be loaded into memory.

skipToIndex()

You can advance to a particular item in an array using skipToIndex().

Parameters

index - the zero based index of the array element you wish to retrieve

Returns

true if the element was retrieved, and false if it was not found or if there was an error. Use hasError() to disambiguate negative results.

The reader must be positioned on a JsonReader::Array node when called in order to succeed, and an item with the specified index must be in the array. If not, this method will return false. Note that you cannot skipToIndex() repeatedly to retrieve multiple values from the array because it only works when you start from the beginning of the array. This wasn't meant to be called in a loop. To retrieve multiple values at different indices, you can use skipToIndex() for the first index you want to move to, and then use read() or skipSubtree() over each item to advance further along the array.

skipSubtree()

Returns

true if the subtree was skipped, and false if no subtree or if there was an error. Use hasError() to disambiguate negative results.

This method advances past the logical element at the current position (including all its subelements) and advances past it to its next sibling, if there is one, or to the end of the object or array otherwise. It is useful for skipping past elements while remaining on the same depth in the heirarchy.

skipToEndObject()

Returns

true if the end of the current object was found and moved to , and false if there wasn't one or if there was an error. Use hasError() to disambiguate negative results.

This method is useful when you're reading data out of fields in an object and then you finish, and you need to find your way to the end of it.

skipToEndArray()

Returns

true if the end of the current array was found and moved to, and false if there wasn't one or if there was an error. Use hasError() to disambiguate negative results.

This is the corollary to the previous method, except for arrays.

Memory Reservation, Tracking and Management

The JSON reader operates with two separate memory buffers. The first one is used for capturing data when scanning off of an input source. This must be the length of the longest field name or scalar value that you actually want to examine including the trailing null terminator character. For example, if you're only looking at name fields, you might get away with a capture buffer of only 256 bytes, but if you want to retrieve the value of overview fields, you may need up to 2kB or even more for long fields. I prefer to start my code allocating more space than I need, and then reducing it once I profile. Eventually, I'll provide a way to stream scalar values (really, strings) but for now, it has to load the entire scalar value at once, which is maybe its biggest current limitation aside from lack of Unicode.

Besides the capture buffer, certain operations, such as building in-memory results require an additional buffer known as a MemoryPool. These are reserved fixed length blocks of memory that support very fast allocation. They do not support individual item deletion, but the entire pool can be recycled/freed all at once.

Basically, to do just about anything with this reader, you'll need to declare a LexContext derivative with a particular capacity in bytes, and you'll probably need a MemoryPool derivative with a particular capacity in bytes as well.

While I told you how to compute the longest LexContext capture buffer as the length of the longest value you'll examine (not including quotes or escapes), it's also similarly simple to come up with a MemoryPool size for your extractions with some profiling. It's the sum of the sizes of the values retreived. For scalar values computing, it is simple as I said. If on the other hand, you're holding non-scalar elements (objects or arrays), there's overhead as well in order to store things like field names and then to link all that data together. Furthermore, due to differing pointer sizes, different machines can require different amounts of RAM to fulfill the same query. The good news is that generally, the amount of space you'll need is mostly dictated by the combined lengths of retrieved data. For the most part, the same query will take the same amount of RAM on the same machine except for variance due to the sizes of the retrieved values like the length of the strings. Therefore, once you profile, it's easy to ballpark it and then allocate a little extra space in case the data is longer than your test data.

When you declare these, you must have the capacities available up front, usually at compile time. They cannot expand their size at runtime for performance reasons. The trade off is well worth it in terms of efficiency, but it does require some initial legwork to figure out what values to plug in. Of course, you could just declare them as 2kB a piece and you'd be safe for most situations, but that uses 4kB of RAM in total and honestly your pool could probably be much smaller at least - a quarter kB is often well more than enough depending on your query. For a PC this might not matter, but for a little ATMega2560 with a mere 8kB of SRAM you better believe it matters. Fortunately, 8kB of RAM is plenty for this because of the way it's written. More the worry with the little 8-bit monster is how slow the poor thing is! There's not much to be done about that however. It's a little 8-bit processor with a lot of I/O, but not a lot of horsepower.

Generally, what you do with the LexContext derivatives is you declare one as a global and give it a size, for example StaticFileLexContext<512> declares a LexContext over a file based source with a 512 byte capture buffer. Note that the size includes a null terminator, so for the above you'd actually have 511 bytes of string space, and then a null terminator. If you need sources other than file or string sources, you can easily derive your own and overload read().

You do much the same thing if you need a MemoryPool. You'll need one if you're going to do extraction or parsing, but otherwise you might not use one at all. You'll know you need one because certain functions take a MemoryPool& pool parameter. An example declaration looks like StaticMemoryPool<1024> which declares a memory pool with a 1kB capacity known at compile time.

You will not generally use the LexContext members or the MemoryPool members directly. You simply pass them as parameters to various methods.

Since they aren't part of the JSON API directly, we won't cover their individual members here.

If you want to read more about the memory pools, see this article. This codebase is updated but the concepts and most of the functionality is the same.

If you want to track how much memory you are using, you can use a profiling LexContext from ProfilingLexContext.h and use one of those and then get the used() value, which tracks the maximum allocation size, and is resetable by resetUsed(). Pools already have a used() accessor method so you can simply check that, and of course the pool's freeAll() method resets the used() value as it frees/invalidates memory.

That's as much as I'll cover about those here, since again, they are somewhat outside the scope of the JSON functionality itself which is what this article covers.

Parsing, In-Memory Trees and the JsonElement

For certain situations, you may need a little bit of JSON to reside in memory. A good reason for that might be that the reader is engaging in an extract operation that returns multiple values from the document in a single run and it needs to hold them around so that it can return them to you.

Another reason might be ... well there really isn't one - at least a good one. Yes, you can parse objects out of the document with parseSubtree() and given the RAM, you could parse on the root and load the entire document in memory if you want, and then operate on that.

Don't.

The overhead I've found is another quarter of the file size. My 190kB+ file clocks in at over 240kB in memory, due to maintaining things like linked lists. Just because you can do something doesn't mean you should. The whole point of this library is to be efficient. These in-memory trees are not efficient compared to the pull parser. If you want an in-memory library, there are plenty of more capable offerings than this in terms of in-memory trees*. These, of course, use a lot more RAM. Almost every other library out there is an in-memory library, which is why I wrote this one. Using this library requires a different way of thinking about your JSON queries than you're probably used to, but it pays off.

* an in-memory library can often do things like JSONPath which are not possible (at least fully) with a pull parser. This library does not include JSONPath for its in-memory trees due to the memory requirements of it. This library also does not hash or pool strings so field lookups and storage aren't especially efficient for in-memory trees.

Okay, now that I've said that, small numbers of directly pertinent in-memory scalar JSON elements are fine, which is what this library is designed to give you.

When you do receive JSON elements back, they are represented by a JsonElement which is a kind of variant that can hold any sort of JSON element, be it an array, an object or some kind of scalar value. The JsonElement arrays and objects contain other JsonElements which is how the tree is formed. For example, a JSON object can have fields that contain other objects.

A JsonElement has a type, and then one of several different values depending on the type. You check the type() before determining which accessor to use, for example string() or integer().

You can edit JsonElements but you'll almost always want to treat them as read-only. Editing them is not efficient, and this library was not built for making a DOM. The creation/editing methods are there because I felt like I couldn't necessarily justify the work in making them semi-private/friend methods just because I couldn't think of a use case for building trees manually. So you can.

Now let's explore the JsonElement class:

JsonElement()

This constructor simply initializes a JsonElement with an undefined value.

JsonElement() (multiple overloads)

Parameters

value (various types) - the value to initialize the JsonElement with. These never cause allocations.

type()

Returns

- an

int8_t representing the type of the JsonElement. Possible values are JsonElement::Undefined for an undefined value, JsonElement::Null for the null value, JsonElement::String for a string value, JsonElement::Real for a floating point value, JsonElement::Integer for an integer value*, JsonElement::Boolean for a boolean value, JsonElement::Array for an array, and JsonElement::Object for an object.

* an integer is not part of the JSON specification. However, limited precision floating point on less capable processors forces our hand, such that we cannot risk storing things like an integer id in a 4 byte floating point number because we risk losing precision thus corrupting the id. As such, both the reader and the in-memory trees detect and support integers as well as floating point values.

Since JsonElement is a variant, it can be one of many types. This accessor indicates the type of the element we're dealing with. You'll almost always check this value before deciding to call any of the getter methods.

Simple Value Accessor Methods

Each accessor method pair has one method that takes no values and returns a specific type, and one method of the same name that takes one parameter of the same type, and returns void. None of these cause allocations, which is why I call them simple. For example, if you use string() to set the string, a copy of the string is not made. A reference to the existing string is held instead. Scalar values however, are copied. This is the same behavior as the constructor overloads. There is one accessor pair for each type except undefined() which only has a getter. The name of each method corresponds to the name of the type it represents. Setting a value automatically sets the appropriate type. Type conversion is not supported so if you try to get a particular type of value from a different type of element the result is undefined. Note that a few of the set accessors, specifically the null(), pobject() and parray() set accessors take a dummy nullptr value simply to distinguish them from the get accessors. Calling these with nullptr will set the type to the appropriate value, and that's their main purpose in this case.

Here are the accessors by name:

undefined() (no set accessor)null()string()real()integer()boolean()parray()pobject()

Navigating the Tree

There are two indexer operator[] overloads, one that takes a string for objects, and one that takes a size_t (some kind of integer) for arrays. You can use these to navigate objects and arrays. Keep in mind they return pointers, and those pointers will be null if the requested field or element doesn't exist.

Allocating Setters

Allocating setters are alternatives to the accessor methods that actually allocate memory while they set a value. In two cases - elements of type array and object, these methods are the only way to add values. All of these setters require a MemoryPool. Some of these setters have "Pooled" in the name, and those methods are for string pooling. However, I've removed the string pooling functionality for now since it didn't live up to what I had hoped - it increased memory usage as often as not, and didn't make anything faster either. I may add it back in if I can improve it somehow, but for now don't use these methods, since they basically require string pointers already allocated to a dedicated MemoryPool used to store strings.

Note again that you really shouldn't be building/editing these objects in your code. This is provided in the interest of completeness only. I don't encourage this use.

allocString()

This method sets the JsonElement's value to a newly allocated copy of the passed in string.

Parameters

pool - the MemoryPool to allocate to. You can allocate to whatever pool you like but usually you'll use the same pool for everything. If there is insufficient memory in the pool, the function will return false without allocating.sz - the null terminated string to allocate a copy of. If this is null, the function will return false without allocating.

Returns

- A

bool indicating success or failure. Failure happens if the pool has insufficient space or if a parameter is invalid.

addField() (various overloads)

These methods add a field to an object type.

Parameters

pool - the MemoryPool to allocate toname - the null terminated name of the field - a copy of the name is allocatedvalue/pvalue - the value for the field - a copy of the value is allocated

Returns

- A

bool indicating success or failure. Failure happens if the pool has insufficient space or if a parameter is invalid.

Fields are stored in a linked list of JsonFieldEntry structs accessed from the pobject() accessor. For this method to succeed, pobject(nullptr) must have already been called on the element.

addItem() (various overloads)

These methods add an item to an array.

Parameters

pool - the MemoryPool to allocate tovalue/pvalue - the value for the item - a copy of the value is allocated

Returns

- A

bool indicating success or failure. Failure happens if the pool has insufficient space or if a parameter is invalid.

Arrays are stored in a linked list of JsonArrayEntry structs accessed from the parray() accessor. For this method to succeed, parray(nullptr) must have already been called on the element.

Getting a String Representation

toString()

This method allocates a string containing a minified copy of the JSON represented by the JsonElement and returns it.

Returns

- A null terminated string with the minified JSON data

This method is mostly for query debugging, as this library is primarily geared for querying JSON, not writing it. If it were made for writing, I'd have included streaming writes with it, but doing so requires different code for the Arduino than standard C and C++ libraries provide for. You can use this for display or debugging but it's not really designed for efficiency. It might be best, if you must use it, to dedicate a special pool to it so you can recycle the pool after each allocation. Another, slightly more dangerous option is to use unalloc() on the pool immediately after you've displayed the string. You won't need this as much as you think you might. I never use it myself.

Extracting Data

Extracting data is the primary way of getting data out of JSON streams aside from raw navigation. Once we've navigated, we build an "extraction" which is a series of nested extractor structs that tell the reader what values to retrieve, relative to our current position. That last bit is important, and also useful because it means we can reuse the same extractor for each element of an array for example by simply calling extract() for each item as you're also navigating through the array.

JsonExtractor is our primary structure here, and it can operate in three different ways:

- As an object extractor where it follows one or more fields off an object

- As an array extractor where it follows one or more indexed elements off an array

- As a value extractor where it retrieves the current element as a value.

To be useful, at least one JsonExtractor in form #3 is necessary. Otherwise, no values will be retrieved. The idea here is the first two forms are basically your navigation - they get your cursor where you need it - pointing to the right value, which you then extract with a value extractor.

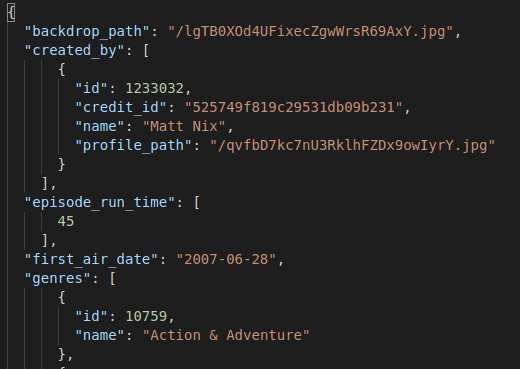

To compose extractions, you basically build paths using the pchildren member which points to an array of JsonExtractor structs. For example, the root level might have a field for created_by which will fetch that field off the object at the reader's current position. It might have a corresponding pchildren entry that fetches index zero, which itself has a pchildren entry which fetches field name before finally getting the value. The JSONPath for that mess would be $.created_by[0].name. Remember, each extractor can specify multiple fields or indices.

The JsonExtractor constructors take three basic forms:

- One that takes fields and children

- One that takes indices and children

- One that takes a pointer to a

JsonElement

These correspond to the three extractor types. Because the constructors take children, you'll have to build the children beforehand, meaning you construct your extraction "bottom to top" starting at the leaves and working your way toward the root.

This is actually good, because of the value extractors. They take a pointer to your JsonElements meaning you must declare those elements first. The extraction will then fill those values any time an extract() method is called either off the reader or off an in-memory tree. Usually, I declare all of my JsonElements first, and then build the extraction that fills them.

With extractions, you can only return a known quantity of values. If you must return a list, try actually returning the array you wanted to build the list from, or better yet, rework your query to use the reader to navigate the array, and then extract() on each item position instead.

So it works like this:

- Decide which values you want

- Declare your

JsonElement variables that correspond to #1 - Declare your value extractors (type 3) to point to those elements from #2

- Declare your path extractors using type 1 and type 2 extractors, going from the leaves (#3) to the root, which will be a single

JsonExtractor. - Pass that single root extractor to an

extract() method, usually on the reader

Both JsonElement and JsonReader support an extract() method. The methods both work the same way but the JsonReader version requires allocation so it needs a MemoryPool passed to it as well, while the JsonElement version is already dealing with in-memory objects so no further memory allocation is necessary.

Remember every time you call extract() one or three things happen depending if you're extracting from a JsonReader or from JsonElements:

- Memory is allocated from the pool to store results (applies only for the reader)

- Your

JsonElement values declared in #2 prior will be populated with the latest results of extract() - If using the reader, the reader will be moved to the end of the current value, object, or array, depending on the kind of of extractor the root is.

If it can't find values, that doesn't indicate failure. Values will simply be of type JsonElement::Undefined if it can't find it. Failure happens if there's not enough free space in the pool or if an invalid parameter was passed, or some form of scanning/parsing error occurs.

This is a lot of rote code and it begs for some kind of query code generation app but I'd need to devise my own query language, or somehow figure out a consistent workable subset of JSONPath I could use. I'm kicking around the idea.

I do not want a runtime query engine on top of this even though you might think it's begging for one. The problem is more RAM use, and lag on slower processors when transforming the query into extractor trees. There's another issue in that the extractor can navigate multiple values at once off an object or array but I'm not sure how to represent that in say, JSONPath.

extract()

Extracts values from the current position

Parameters

pool - the MemoryPool used for allocations (JsonReader only)extraction - the root JsonExtractor for the extraction

Returns

- A

bool value indicating success or error. If successful, any JsonElements referenced by JsonExtractors will be populated with the found and extracted values, if any.

Error Handling

This library does not use C++ exceptions due to the platforms it targets not always supporting it. On the reader, you can look at the nodeType() to see if it's JsonReader::Error or you can look at hasError(). If hasError() is true, lastError() will report the error code and value() will report the text of the error message. Sometimes, methods return false on error, or on failure - two different conditions. For example, skipToField() can return false if a field is not found, but that does not necessarily indicate an error occured.

I have made some attempts to make the parser recover on "recoverable errors" - that is, after an error, you should be able to call most methods again to restart the parse, but it may not work. Intelligent error recovery in parsing can be maddening due to all the corner cases, which can be hard to find so the library is just going to have to mature for this to improve. Some errors are not recoverable, usually because the reader wound up at the end of the document due to an unterminated string, array or object.

hasError()

Indicates whether or not the reader has encountered an error.

Returns

- A

bool value indicating whether or not the reader is in an error state.

lastError()

Indicates the last error code that occurred.

Returns

- A

JSON_ERROR_XXXX error code, or JSON_ERROR_NO_ERROR if there was none

Again check value() for the error message if there's an error.

The Remainder: Reading and Parsing

Note that I want to discourage the use of these methods in the cases where other methods will do because as a rule, they are slower and tend to take a lot more memory.

A read is a full step of the parse, applying normalization to any encountered data, and loading it into the LexContext. This includes large field values you may want to skip. Reading can sometimes be useful to advance the parser a step, but you should use it sparingly, because while it's still relatively fast, it simply can't compare to the other methods for navigation in terms of just raw efficiency. On the other hand, it will also find badly formed data the quickest, as it does full checking unlike the skipXXXX() methods or even extract().

read()

Reads a step of the parse

Returns

- A

bool indicating whether or not there is more data. If it returns false, it may be due to an error. You can call hasError() to disambiguate.

You can call read() in a loop until it returns false to read every node of the document. Once you've read a step of the parse, you can query the parser for various information about the parse.

nodeType()

Returns a value indicating the type of node the document is on. This starts with JsonReader::Initial, and advances through the various types until it encounters JsonReader::EndDocument or JsonReader::Error.

Returns

- An

int8_t value which will be either JsonReader::Initial for the inital node, JsonReader::Value for a scalar value node, JsonReader::Field for a field, JsonReader::Object for the beginning of an object, JsonReader::EndObject for the end of an object, JsonReader::Array for the beginning of an array, JsonReader::EndArray for the end of an array, JsonReader::EndDocument for the end of the document and JsonReader::Error for an error. Note that JsonReader::Initial and JsonReader::EndDocument never show up after a read() while true, which is to say you'll never actually see them reported inside a while(read()) loop, which only reports the nodes between those nodes. Those nodes are effectively "virtual" and do not correspond to an actual position within the data.

objectDepth()

Indicates the number of nested objects that exist as a parent of the current position.

Returns

An unsigned long int value that indicates the object depth. This starts at 0 on the JsonReader::Initial node and moves to 1 upon the first read if there is a root object. At the end of the document, it will be 0.

Retrieving Scalar Values

Retrieving values depends on the type of value you're examining. There are several methods for getting typed data out of the reader or you can use value() to get the value in lexical space (exactly as it appears in the document) with anything other than JsonReader::String types which are undecorated upon being read so their original lexical stream including escapes and quotes is discarded to save RAM and improve performance. The following methods operate on values.

value()

Gets the JSON value of the node as a string.

Returns

- A null terminated string indicating the value of the node. string values and field names are already undecorated, meaning all quotes and escapes have been removed or replaced with their actual values.

This only works for node types of JsonReader::Value, JsonReader::Field and JsonReader::Error. All other nodes will return null for this. For anything but strings, which get undecorated, the value comes straight out of the document, so if it's for example, a numeric value, value() will give you the opportunity to view it exactly as it appeared written in the document, so if you ever need to distinguish between say "1.0" and "1.00" you can using this accessor.

valueType()

Indicates the type of the value under the node.

Returns

- An

int8_t value indicating the type of the value under the node, which can be JsonReader::Undefined if the node does not have a value, JsonReader::Null for null, JsonReader::String for a string, JsonReader::Real for a floating point number, JsonReader::Integer for an integer*, or JsonReader::Boolean for a boolean value.

* Once again, integers are not part of the JSON spec, but the reader supports them because doubles on small platforms are sometimes not very precise. Lossy floating point numbers are not appropriate for things like integer ids. Lexically they work because of effectively total precision in lexical space, but in binary space they don't quite pan out because you only have 4 or 8 bytes depending on the platform and an integer itself can be 4 bytes. Obviously, JSON is more of a lexical spec than anything, and binary considerations aren't really part of it. On 32-bit platforms, you might mostly get away with using doubles, which are 64-bit. On 8-bit platforms, not so much.

realValue()

Indicates the floating point value under the node.

Returns

- A

double value, or NAN if no number is available at the current node. How precise it actually is depends on the platform. If you need perfect precision or round tripping, use value() to get the number in lexical space.

integerValue()

Indicates the integer value under the node.

Returns

- A

long long int value, or 0 if no number is available at the current node. If the number was floating point, it will be rounded. How large it actually is depends on the platform. Sometimes, it may not be large enough to hold the integer from JSON because arbitrarily large integers are not supported. If you need perfect representation, use value() to get it in lexical space.

booleanValue()

Indicates the boolean value under the node.

Returns

- A bool value, or

false if the value is not a boolean

Parsing Elements into In-Memory Trees

You can parse elements into in-memory trees using parseSubtree() which I very nearly did not expose. I recommend you do not use this because it's just inefficient to do this directly. Consider that 9 times out of 10 once you parse that in-memory object, you're going to want to navigate it. Well, why load it into memory to navigate it and extract values from it when you can just call extract()? Extractors enable you to parse only what you need which is the point. It's really not any easier to do this in memory. The only reason I decided to expose it was because I alone cannot determine the full use cases for this project, so some of this I'm leaving up to your best judgement, dear reader. Just bear in mind that it makes no sense to use an ultra low-memory impact libary and then load a big in-memory tree with it!

parseSubtree()

Parses the element under the cursor and all its descendants into an in-memory tree

Parameters

pool - the MemoryPool to use for allocations.pelement - the pointer to the JsonElement you want to populate. This element will be filled with the element data at the current location in the document.

Returns

- A

bool that indicates true if success or false if it failed. Check lastError() if it failed.

Probably just do not use this method. There are better ways, like extract(). I'd be happy to be wrong however, so if you have some crazy neat idea that requires it, go ahead. Just don't say I didn't warn you.

What's Next?

We've covered so much ground I don't expect you to have absorbed it all yet. Treat the above as reference material. We're going to get to the code now, and all that abstract stuff will finally be concrete.

Coding this Mess

We'll be doing this on a PC for right now. I've tested it with Linux, gcc, PlatformIO and the Arduino IDE, but your mileage may vary. I haven't gotten around to testing it with Microsoft's compiler or whatver you use on Apples. I'm fairly confident they'll work though, and if you must make changes, they'll be very minor. I like to start on PCs with lots of room and use them to profile to figure out how much space I'll actually need.

Note that I've included a json.ino file with the project as well. This is for compilation targeting Arduino devices from inside the Arduino IDE. The code is basically the same as the code in main.cpp, although it was a quick and dirty port. Compile main.cpp to target platforms other than Arduino.

Setup

The first thing we should probably do is declare a proper LexContext the reader can use, and possibly, or rather probably a MemoryPool so we can run extractions with it. I like to start with big values before I construct my queries, just so I'm not dealing with out of memory errors complicating my life. Once I tune the query, I profile and trim them way down especially if I want it to run on little 8-bit Arduinos and things.

Here's some code for the global scope:

ProfilingStaticFileLexContext<2048> fileLC;

StaticMemoryPool<1024*1024> pool;

You don't really need a pool that big. I just hate out of memory errors. With this much space, it's not likely you'd ever encounter them. The whole test document I used for this article only weights in at about 190kB, or about 240kB as an in-memory tree so it would take some doing to somehow use 1MB. It's a ridiculous value I set just because. On a typical query, each of these may be as small as 128 bytes each or even much smaller. I usually like somewhere around 2kB though with most of it going toward the LexContext, but that's for simple extractions. Profile, profile profile!

Put thought into your final values, however. You want it to be as big as it can be, but no bigger than that for your platform. That way, it can gracefully handle longer values than you profiled for. It's a bit of art and a bit of experience, plus testing that gets you to the optimal values. The thing is, even on the little 8-bit monsters, they don't have to be optimal, just good enough, leaning toward too big if anything.

data.json: Our Test Data

It might help to download the project and extract data.json in order to follow along. It's almost 200kB of data so I won't be going over it here. If you're going to run it on the Arduino, you'll need to drop the file on an SD card in the root directory and rename it to data.jso because some Arduino SD implementations only support filenames in 8.3 format.

The data.json file contains several combined dumps from tmdb.com which have been merged into one large file for testing. It contains comprehensive information about the USA television series "Burn Notice", including information about each of its 7 seasons, 111 episodes and specials.

Many online JSON repositories, like those typically backed by mongoDB and similar, while not returning 200kB in one go, nevertheless tend to return a lot of JSON for each HTTP request. This is for performance ultimately, so that multiple requests don't need to be made to get related data as it's already included. However, these can be difficult for little devices to process. Processing data that's way too big for your device is what this library shines at, whether it's a sever searching and bulk uploading massive JSON data dumps, or whether it's an IoT device querying a "chunky" online webservice like TMDb's service.

In fact, that's what this library was really designed for more than anything - hooking into JSON sources that dump big data and picking through it to get only what you need as efficiently as possible. Consider the data.json to be an example of such a dump.

"Big data" is relative. Obviously, the file is easy for anything laptop size or bigger to chew through but it's quite a feat for an ATmega2560 or even an ESP32. The file's size is what I consider to be perfect for testing - not too big so that it bogs down the development cycle on a desktop when your parse takes 2 minutes, but big enough that I can really test its mettle on an IoT gadget to see how it performs on a less capable device.

Anyway, before we get to the rest of it, know that these queries were written to work against this file. Take a look at it.

Exploring Navigation in Code

Let's start with some simple navigation. Say we wanted the show name, which happens to be on line 45 of the file. We don't even need an extractor for this, which would be JSONPath $.name:

fileLC.resetUsed();

if (!fileLC.open("./data.json")) {

printf("Json file not found\r\n");

return;

}

JsonReader jsonReader(fileLC);

milliseconds start = duration_cast<milliseconds>(system_clock::now().time_since_epoch());

if (jsonReader.skipToFieldValue("name",JsonReader::Siblings)) {

printf("%s\r\n",jsonReader.value());

} else if(JsonReader::Error==jsonReader.nodeType()) {

printf("\r\nError (%d): %s\r\n\r\n",jsonReader.lastError(),jsonReader.value());

return;

}

milliseconds end = duration_cast<milliseconds>(system_clock::now().time_since_epoch());

printf("Scanned %llu characters in %d milliseconds using %d bytes of LexContext\r\n",

fileLC.position()+1,(int)(end.count()-start.count()),(int)fileLC.used());

fileLC.close();

There's a lot of fluff around it but the operative code is simply jsonReader.skipToFieldValue("name",JsonReader::Siblings).

This is fast. It doesn't even register 1ms on my rickety old desktop. It stops scanning as soon as it read the name's value because there was no reason to go further. This is exactly how it should be. On my ESP32, it takes a little longer:

Burn Notice

Scanned 1118 characters in 15 milliseconds using 12 bytes

15ms off an SD card and 12 bytes is all it took, because "Burn Notice" is 11 characters plus the null terminator.

Now let's do something a little more complicated - navigating to season ordinal index 2 and episode ordinal index 2, and retrieve the name like the JSONPath expression $.seasons[2].episodes[2].name:

fileLC.resetUsed();

if (!fileLC.open("./data.json")) {

printf("Json file not found\r\n");

return;

}

JsonReader jsonReader(fileLC);

milliseconds start = duration_cast<milliseconds>(system_clock::now().time_since_epoch());

if(jsonReader.skipToFieldValue("seasons",JsonReader::Siblings) ) {

if(jsonReader.skipToIndex(2)) {

if(jsonReader.skipToFieldValue("episodes",JsonReader::Siblings) ) {

if(jsonReader.skipToIndex(2)) {

if(jsonReader.skipToFieldValue("name",JsonReader::Siblings)) {

printf("%s\r\n",jsonReader.value());

milliseconds end = duration_cast<milliseconds>

(system_clock::now().time_since_epoch());

printf("Scanned %llu characters in %d milliseconds

using %d bytes of LexContext\r\n",fileLC.position()+1,

(int)(end.count()-start.count()),(int)fileLC.used());

}

}

}

}

}

if(jsonReader.hasError()) {

printf("Error: (%d) %s\r\n",(int)jsonReader.lastError(),jsonReader.value());

}

fileLC.close();

The output from this on my destop is:

Trust Me

Scanned 42491 characters in 1 milliseconds using 9 bytes of LexContext

Now we'll try searching descendants. We're going to get the names of the show's production companies. The equivalent JSONPath would be $.production_companies..name:

fileLC.resetUsed();

if (!fileLC.open("./data.json")) {

printf("Json file not found\r\n");

return;

}

JsonReader jsonReader(fileLC);

size_t count = 0;

milliseconds start = duration_cast<milliseconds>(system_clock::now().time_since_epoch());

if (jsonReader.skipToFieldValue("production_companies",JsonReader::Siblings)) {

unsigned long int depth=0;

while (jsonReader.skipToFieldValue("name", JsonReader::Descendants,&depth)) {

++count;

printf("%s\r\n",jsonReader.value());

}

}

milliseconds end = duration_cast<milliseconds>(system_clock::now().time_since_epoch());

printf("Scanned %llu characters and found %d companies in %d milliseconds using %d bytes

of LexContext\r\n",fileLC.position()+1,(int)count,

(int)(end.count()-start.count()),(int)fileLC.used());

fileLC.close();

Notice how we pass the address of an unsigned long int depth variable to skipToFieldValue() when we use JsonReader::Descendants. This is required. We don't have to do anything with it, but it should be scoped right alongside our while loop, assuming one is being used. All this does is mark where we were in the document so we can get back to the same level in the heirarchy. It's a cookie. The value of it is useless for you and also should not be written to. Consider it opaque.

Stepping it Up: Navigation With a Simple Extraction

Here, we are going to perform basically the equivalent of the following JSONPath: $..episodes[*].name,season_number,episode_number except all of our field values will be part of a tuple on the same row, instead of 3 rows for each result like JSONPath would give you.

We don't want this: (JSONPath result)

[

"The Fall of Sam Axe",

0,

1,

"Burn Notice",

1,

1,

"Identity",

1,

2,

...

What we want is more like this:

0,1,"The Fall of Sam Axe"

1,1,"Burn Notice"

1,2,"Identity"

Same data, but a better arrangement where it's 1 row per result instead of 3. I also moved the season and episode numbers to the beginning.

For each row we get back, we're essentially going to printf("S%02dE%02d %s\r\n",season_number,episode_number,name) which will give us something like "S06E18 Game Change" and then we'll advance to the next row.

This will be easier if we break it down into simple steps. First, create your JsonElement variables to hold your data. We want seasonNumber, episodeNumber, and name:

JsonElement seasonNumber;

JsonElement episodeNumber;

JsonElement name;

As per earlier, we have three types of extractors and which type is determined by how they were constructed. We have object extractors, array extractors and value extractors.

Now let's create our object extractor:

const char* fields[] = {

"season_number",

"episode_number",

"name"

};

JsonExtractor children[] = {

JsonExtractor(&seasonNumber),

JsonExtractor(&episodeNumber),

JsonExtractor(&name)

};

JsonExtractor extraction(fields,3,children);

Remember, an object extractor is any JsonExtractor that has been initialized with an array of fields. An array extractor is one that has been initialized with an array of indices and an a value extractor is one that has been initialized with a pointer to a single JsonElement.

Basically all this says is grab the three named fields off the object and stuff their associated values into seasonNumber, episodeNumber and name.

Now that we have that we just need to move through the document looking for episodes - we use the JsonReader::Forward axis for that since starting from the root it's the equivilent to $..episodes and it's fast. The one downside is it will always keep going until the end of the document. There is no other stopping condition other than finding a match. In this case, it's acceptable because there's really not much left of the document after the last episodes entry.

Next when we find episodes, we assume it contains an array like "episodes": [ ... ]

while(jsonReader.skipToFieldValue("episodes",JsonReader::Forward)) {

while(!jsonReader.hasError() && JsonReader::EndArray!=jsonReader.nodeType()) {

if(!jsonReader.read())

break;

...

Once we're on the array element, we simply perform the extraction:

if(!jsonReader.extract(pool,extraction))

break;

And then once our extraction is run, we have access to the values in seasonNumber, episodeNumber and name. Now we can format them and print them:

printf("S%02dE%02d %s\r\n",

(int)seasonNumber.integer(),

(int)episodeNumber.integer(),

name.string());

One thing I didn't cover here yet is the pool. We're using it in extract() but if we don't free it after (or before) every extract() call, we'll wind up adding all of the results to the pool even after we don't need them anymore.

Basically, we only need the values around long enough to print them. Once we've printed them, we can free their memory. If we don't, we'll end up using up our entire pool pretty quickly. The moral of this is to always freeAll() on your pool as soon as you don't need your data anymore. Don't wait for out of memory errors!

Putting it all together:

pool.freeAll();

fileLC.resetUsed();

if (!fileLC.open("./data.json")) {

printf("Json file not found\r\n");

return;

}

JsonElement seasonNumber;

JsonElement episodeNumber;

JsonElement name;

const char* fields[] = {

"season_number",

"episode_number",

"name"

};

JsonExtractor children[] = {

JsonExtractor(&seasonNumber),

JsonExtractor(&episodeNumber),

JsonExtractor(&name)

};

JsonExtractor extraction(fields,3,children);

JsonReader jsonReader(fileLC);

size_t maxUsedPool = 0;

size_t episodes = 0;

milliseconds start = duration_cast<milliseconds>(system_clock::now().time_since_epoch());

while(jsonReader.skipToFieldValue("episodes",JsonReader::Forward)) {

while(!jsonReader.hasError() && JsonReader::EndArray!=jsonReader.nodeType()) {

if(!jsonReader.read())

break;

if(pool.used()>maxUsedPool)

maxUsedPool = pool.used();

pool.freeAll();

if(!jsonReader.extract(pool,extraction))

break;

++episodes;

printf("S%02dE%02d %s\r\n",

(int)seasonNumber.integer(),

(int)episodeNumber.integer(),

name.string());

}

}

if(jsonReader.hasError()) {

printf("\r\nError (%d): %s\r\n\r\n",jsonReader.lastError(),jsonReader.value());

return;

} else {

milliseconds end = duration_cast<milliseconds>(system_clock::now().time_since_epoch());

printf("Scanned %d episodes and %llu characters in %d milliseconds

using %d bytes of LexContext and %d bytes of the pool\r\n",

(int)episodes,fileLC.position()+1,(int)(end.count()-start.count()),

(int)fileLC.used(),(int)maxUsedPool);

}

fileLC.close();

Notice we didn't use an array extractor even though we were dealing with an array. The problem is that extractors can only deal with one-to-one mappings between values in the extraction and your JsonElement variables. To return an indeterminate number of values, you'd need an indeterminate number of variables! Normally, the solution is ... using arrays! but here, we're trying to reduce memory and handle really long data, so I don't want to store arrays using this library. If you want to use an extractor with an array, it must have one or more fixed indices to map, yielding a determinate number of elements. In other words, there's no way to set indices to "*" or "all" and there never will be.

Therefore, you must navigate any array where you want to extract an arbitrary number of values yourself.

The same limitation applies to objects, but it's not something that one might typically notice unless you particularly miss having a wildcard match for the field name, because that's the only way the same problem as with arrays would be introduced.

This is by design. Think about your flow. Are you streaming? If you're storing your resultset in memory and working off of that, you are not streaming. If you're storing an array rather than moving through it, that's the same thing, and it's not streaming. Process a single result, discard it and move on to the next. The bottom like is that means not storing your results around in arrays.

Now, you are perfectly capable of extracting whole JSON arrays using value extractors and just getting the whole thing as a JsonElement parray() but again, there's no sense in using something with a tiny memory footprint if you use it in such a way that it requires more RAM than it was ever designed for. It's self-defeating to use it this way. If you find yourself extracting non-scalar values like arrays and objects you might want to rethink how you're performing your query. Lean on the reader more, and the in-memory elements less.

More Complicated Extraction

We're going to get several fields off the root object. Because of this, we need to do an extraction off the root. Normally, we prefer to avoid this since extracting off the root requires scanning all the way to the end of the document. However, due to the earlier issue I raised about the JSON spec and indeterminate field order, you don't have a better option. Luckily, even though we have to scan the whole document, it's still pretty efficient since at least we're not normalizing all of it.

We're also going to get field values and array elements from fields underneath the object, requiring the reader to dive deeper into the heirarchy to dig things out. This is where we can no longer write a JSONPath expression equivalent. There is no corresponding JSONPath for this query because JSONPath cannot support this type of extraction directly - it's actually several JSONPath queries:

$.id$.name$.number_of_episodes$.last_episode_to_air.name$.created_by[0].name$.created_by[0].profile_path

Note though that below, we effectively create the above queries from right to left because of the way we must nest structures:

JsonElement creditName;

JsonElement creditProfilePath;

JsonElement id;

JsonElement name;

JsonElement numberOfEpisodes;

JsonElement lastEpisodeToAirName;

const char* createdByFields[] = {

"name",

"profile_path"

};

JsonExtractor createdByExtractions[] = {

JsonExtractor(&creditName),

JsonExtractor(&creditProfilePath)

};

JsonExtractor createdByExtraction(

createdByFields,

2,

createdByExtractions

);

JsonExtractor createdByArrayExtractions[] {

createdByExtraction

};

size_t createdByArrayIndices[] = {0};

JsonExtractor createdByArrayExtraction(

createdByArrayIndices,

1,

createdByArrayExtractions

);

const char* lastEpisodeFields[] = {"name"};

JsonExtractor lastEpisodeExtractions[] = {

JsonExtractor(&lastEpisodeToAirName)

};

JsonExtractor lastEpisodeExtraction(

lastEpisodeFields,

1,

lastEpisodeExtractions

);

const char* showFields[] = {

"id",

"name",

"created_by",

"number_of_episodes",

"last_episode_to_air"

};

JsonExtractor showExtractions[] = {

JsonExtractor(&id),

JsonExtractor(&name),

createdByArrayExtraction,

JsonExtractor(&numberOfEpisodes),

lastEpisodeExtraction

};

JsonExtractor showExtraction(

showFields,

5,

showExtractions

);

And now from the root, you can call jsonReader.extract(showExtraction) and it will fill all of your JsonElement variables.

Doing this for me yields:

id: 2919

name: "Burn Notice"

created_by: [0]: name: "Matt Nix"

profile_path: "/qvfbD7kc7nU3RklhFZDx9owIyrY.jpg"

number_of_episodes: 111

last_episode_to_air: name: "Reckoning"

Parsed 191288 characters in 4 milliseconds using 33 bytes of LexContext and 64 bytes of the pool.

main.cpp's dumpExtraction() routine is not exactly pretty printed but all the data is there.

Remember that extractions are relative to the current document location they are performed on. This one was relative to the root only because the root was what was under the cursor when we called extract(). This is useful because as we did with the episodes earlier, you can reexecute the same extraction from multiple places in the document by simply navigating to the desired location, extracting, then navigating and doing it again.

Hopefully, the nested structures aren't overly confusing. I will most likely end up making some kind of code generation tool for creating the extraction code for you since it's so regular, but I haven't for now.

The great thing about these extractions is that they effectively "program" the reader to skip over irrelevant parts of the document, and navigate to relevant parts, retrieving all the values for you. The structures may seem complicated, but it's a whole lot less complicated (and more efficient) to use extract() than it is to drive the reader manually. It also avoids potentially loading and normalizing data you do not need.

Conclusion

Using this library requires a significant paradigm shift compared to how JSON libraries typically parse and process their data. The hope is that it's worth it because it enables extremely efficient selective bulk loading on any machine and efficient queries even on less capable CPUs and systems with very small amounts of RAM.

In this paradigm, you build an "extraction", navigate, and then extract() using your extraction. You then free your results from memory and move on to the next part of the document you wish to extract in a streaming, forward only fashion, and repeat the extract(), continuing this process until you have all your results.

By designing your queries (which entail both navigation and extraction) appropriately, you can reap incredible performance benefits and run virtually anywhere, even when your input data is measured in gigabytes.

I hope you spend some time with this library, and you grow to appreciate this paradigm. I see it as a particularly effective method of processing JSON.

Limitations

- This library currently does not support Unicode, but future Unicode by way of UTF-8 support is in the pipeline.

- There currently is no way to stream large scalar values like base64 encoded string BLOBs out of the document. Usually, this means you'll have to skip them because you won't have enough RAM to load a BLOB all at once. I plan to add this facility eventually.

- This library does not handle poorly formed documents as well as other libraries due to the type of skipping and scanning it does. This is by design. The alternative is more normalization, more RAM use and less efficiency. If you want to make a validator, you can with this library, using

read() and a stack but you'll lose the performance. JSON is almost always machine generated so unlike with HTML, well formedness errors aren't terribly common in the wild. The best case is the code finds the error right away. The worst case is this code will miss some errors altogether. The usual case is it won't find the error until later, and then it may return a weird error do to the cascading effect of unbalanced nodes. - I work and have limited time to create a full test suite for this.

History

- 22nd December, 2020 - Initial submission

Just a shiny lil monster. Casts spells in C++. Mostly harmless.