Here we are going to add webcam capabilities to our object recognition model code, and we are going to look at using the HTML5 Webcam API with TensorFlow.js, and detecting face touches.

TensorFlow + JavaScript. The most popular, cutting-edge AI framework now supports the most widely used programming language on the planet, so let’s make magic happen through deep learning right in our web browser, GPU-accelerated via WebGL using TensorFlow.js!

One of the best parts about modern web browsers that support HTML5 is the easy access to the variety of APIs, such as webcam and audio. And with the recent COVID-19 issues impacting public health, a bunch of very creative developers used this to build an app called donottouchyourface.com, which helps people reduce the risk of getting sick by learning to stop touching their faces. In this article, we are going to use all that we’ve learned so far with computer vision in TensorFlow.js to try building a version of this app ourselves.

Starting Point

We are going to add webcam capabilities to our object recognition model code, and will then capture frames in real time for training and predicting face touch actions. This code will look familiar if you followed along with the previous article. Here is what the resulting code will do:

- Import TensorFlow.js and TensorFlow’s tf-data.js

- Define Touch vs. Not-Touch category labels

- Add a video element for the webcam

- Run the model prediction every 200 ms after it’s been trained for the first time

- Show the prediction result

- Load a pre-trained MobileNet model and prepare for transfer learning

- Train and classify custom objects in images

- Skip disposing of image and target samples in the training process to keep them for multiple training runs

This will be our starting point for this project, before we add the real-time webcam functionality:

<html>

<head>

<title>Face Touch Detection with TensorFlow.js Part 1: Using Real-Time Webcam Data with Deep Learning</title>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs@2.0.0/dist/tf.min.js"></script>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs-data@2.0.0/dist/tf-data.min.js"></script>

<style>

img, video {

object-fit: cover;

}

</style>

</head>

<body>

<video autoplay playsinline muted id="webcam" width="224" height="224"></video>

<h1 id="status">Loading...</h1>

<script>

let touch = [];

let notouch = [];

const labels = [

"Touch!",

"No Touch"

];

function setText( text ) {

document.getElementById( "status" ).innerText = text;

}

async function predictImage() {

if( !hasTrained ) { return; }

const img = await getWebcamImage();

let result = tf.tidy( () => {

const input = img.reshape( [ 1, 224, 224, 3 ] );

return model.predict( input );

});

img.dispose();

let prediction = await result.data();

result.dispose();

let id = prediction.indexOf( Math.max( ...prediction ) );

setText( labels[ id ] );

}

function createTransferModel( model ) {

const bottleneck = model.getLayer( "dropout" );

const baseModel = tf.model({

inputs: model.inputs,

outputs: bottleneck.output

});

for( const layer of baseModel.layers ) {

layer.trainable = false;

}

const newHead = tf.sequential();

newHead.add( tf.layers.flatten( {

inputShape: baseModel.outputs[ 0 ].shape.slice( 1 )

} ) );

newHead.add( tf.layers.dense( { units: 100, activation: 'relu' } ) );

newHead.add( tf.layers.dense( { units: 100, activation: 'relu' } ) );

newHead.add( tf.layers.dense( { units: 10, activation: 'relu' } ) );

newHead.add( tf.layers.dense( {

units: 2,

kernelInitializer: 'varianceScaling',

useBias: false,

activation: 'softmax'

} ) );

const newOutput = newHead.apply( baseModel.outputs[ 0 ] );

const newModel = tf.model( { inputs: baseModel.inputs, outputs: newOutput } );

return newModel;

}

async function trainModel() {

hasTrained = false;

setText( "Training..." );

const imageSamples = [];

const targetSamples = [];

for( let i = 0; i < touch.length; i++ ) {

let result = touch[ i ];

imageSamples.push( result );

targetSamples.push( tf.tensor1d( [ 1, 0 ] ) );

}

for( let i = 0; i < notouch.length; i++ ) {

let result = notouch[ i ];

imageSamples.push( result );

targetSamples.push( tf.tensor1d( [ 0, 1 ] ) );

}

const xs = tf.stack( imageSamples );

const ys = tf.stack( targetSamples );

model.compile( { loss: "meanSquaredError", optimizer: "adam", metrics: [ "acc" ] } );

await model.fit( xs, ys, {

epochs: 30,

shuffle: true,

callbacks: {

onEpochEnd: ( epoch, logs ) => {

console.log( "Epoch #", epoch, logs );

}

}

});

hasTrained = true;

}

const mobilenet = "https://storage.googleapis.com/tfjs-models/tfjs/mobilenet_v1_0.25_224/model.json";

let model = null;

let hasTrained = false;

(async () => {

model = await tf.loadLayersModel( mobilenet );

model = createTransferModel( model );

setInterval( predictImage, 200 );

})();

</script>

</body>

</html>

Using the HTML5 Webcam API With TensorFlow.js

Starting a webcam is quite simple in JavaScript once you have a code snippet for it. Here is a utility function for you to start it and request access from the user:

async function setupWebcam() {

return new Promise( ( resolve, reject ) => {

const webcamElement = document.getElementById( "webcam" );

const navigatorAny = navigator;

navigator.getUserMedia = navigator.getUserMedia ||

navigatorAny.webkitGetUserMedia || navigatorAny.mozGetUserMedia ||

navigatorAny.msGetUserMedia;

if( navigator.getUserMedia ) {

navigator.getUserMedia( { video: true },

stream => {

webcamElement.srcObject = stream;

webcamElement.addEventListener( "loadeddata", resolve, false );

},

error => reject());

}

else {

reject();

}

});

}

Now call the setupWebcam() function inside your code after the model has been created, and it will start working on the webpage. Let’s initialize a global webcam using the tf-data library, so we can use its helper function and easily create tensors from a webcam frame.

let webcam = null;

(async () => {

model = await tf.loadLayersModel( mobilenet );

model = createTransferModel( model );

await setupWebcam();

webcam = await tf.data.webcam( document.getElementById( "webcam" ) );

setInterval( predictImage, 200 );

})();

Capturing a frame with the TensorFlow webcam helper and normalizing the pixels can be done in a function like this:

async function getWebcamImage() {

const img = ( await webcam.capture() ).toFloat();

const normalized = img.div( 127 ).sub( 1 );

return normalized;

}

Then let’s use this function to capture images for training data in another function:

async function getWebcamImage() {

const img = ( await webcam.capture() ).toFloat();

const normalized = img.div( 127 ).sub( 1 );

return normalized;

}

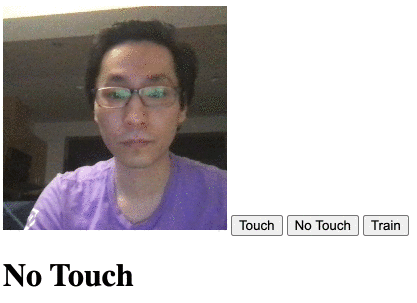

Finally, let’s add three buttons to the page, below the webcam video element, to activate the sample image capturing and model training:

<video autoplay playsinline muted id="webcam" width="224" height="224"></video>

<button onclick="captureSample(0)">Touch</button>

<button onclick="captureSample(1)">No Touch</button>

<button onclick="trainModel()">Train</button>

<h1 id="status">Loading...</h1>

Detecting Face Touches

With the webcam functionality added, we’re ready to try our face touch detection.

Open the webpage and use the Touch and No Touch buttons while you are in the camera view to capture different sample images. It seemed that capturing about 10-15 samples for each, Touch and No Touch, was good enough to begin detecting quite well.

Technical Footnotes

- Because we are likely training our model on just a small sample, without taking photos of a lot of different people, the trained AI’s accuracy will be low when other people try your app

- The AI may not distinguish depth very well, and could behave more like Face Obstructed Detection than Face Touch Detection

- We might have named the buttons and the corresponding categories Touch vs. No Touch, but the model does not recognize the implications; it could be trained on any two variations of captured photos, like Dog vs Cat or Circle vs Rectangle

Finish Line

For your reference, here is the full code:

<html>

<head>

<title>Face Touch Detection with TensorFlow.js Part 1: Using Real-Time Webcam Data with Deep Learning</title>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs@2.0.0/dist/tf.min.js"></script>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs-data@2.0.0/dist/tf-data.min.js"></script>

<style>

img, video {

object-fit: cover;

}

</style>

</head>

<body>

<video autoplay playsinline muted id="webcam" width="224" height="224"></video>

<button onclick="captureSample(0)">Touch</button>

<button onclick="captureSample(1)">No Touch</button>

<button onclick="trainModel()">Train</button>

<h1 id="status">Loading...</h1>

<script>

let touch = [];

let notouch = [];

const labels = [

"Touch!",

"No Touch"

];

function setText( text ) {

document.getElementById( "status" ).innerText = text;

}

async function predictImage() {

if( !hasTrained ) { return; }

const img = await getWebcamImage();

let result = tf.tidy( () => {

const input = img.reshape( [ 1, 224, 224, 3 ] );

return model.predict( input );

});

img.dispose();

let prediction = await result.data();

result.dispose();

let id = prediction.indexOf( Math.max( ...prediction ) );

setText( labels[ id ] );

}

function createTransferModel( model ) {

const bottleneck = model.getLayer( "dropout" );

const baseModel = tf.model({

inputs: model.inputs,

outputs: bottleneck.output

});

for( const layer of baseModel.layers ) {

layer.trainable = false;

}

const newHead = tf.sequential();

newHead.add( tf.layers.flatten( {

inputShape: baseModel.outputs[ 0 ].shape.slice( 1 )

} ) );

newHead.add( tf.layers.dense( { units: 100, activation: 'relu' } ) );

newHead.add( tf.layers.dense( { units: 100, activation: 'relu' } ) );

newHead.add( tf.layers.dense( { units: 10, activation: 'relu' } ) );

newHead.add( tf.layers.dense( {

units: 2,

kernelInitializer: 'varianceScaling',

useBias: false,

activation: 'softmax'

} ) );

const newOutput = newHead.apply( baseModel.outputs[ 0 ] );

const newModel = tf.model( { inputs: baseModel.inputs, outputs: newOutput } );

return newModel;

}

async function trainModel() {

hasTrained = false;

setText( "Training..." );

const imageSamples = [];

const targetSamples = [];

for( let i = 0; i < touch.length; i++ ) {

let result = touch[ i ];

imageSamples.push( result );

targetSamples.push( tf.tensor1d( [ 1, 0 ] ) );

}

for( let i = 0; i < notouch.length; i++ ) {

let result = notouch[ i ];

imageSamples.push( result );

targetSamples.push( tf.tensor1d( [ 0, 1 ] ) );

}

const xs = tf.stack( imageSamples );

const ys = tf.stack( targetSamples );

model.compile( { loss: "meanSquaredError", optimizer: "adam", metrics: [ "acc" ] } );

await model.fit( xs, ys, {

epochs: 30,

shuffle: true,

callbacks: {

onEpochEnd: ( epoch, logs ) => {

console.log( "Epoch #", epoch, logs );

}

}

});

hasTrained = true;

}

const mobilenet = "https://storage.googleapis.com/tfjs-models/tfjs/mobilenet_v1_0.25_224/model.json";

let model = null;

let hasTrained = false;

async function setupWebcam() {

return new Promise( ( resolve, reject ) => {

const webcamElement = document.getElementById( "webcam" );

const navigatorAny = navigator;

navigator.getUserMedia = navigator.getUserMedia ||

navigatorAny.webkitGetUserMedia || navigatorAny.mozGetUserMedia ||

navigatorAny.msGetUserMedia;

if( navigator.getUserMedia ) {

navigator.getUserMedia( { video: true },

stream => {

webcamElement.srcObject = stream;

webcamElement.addEventListener( "loadeddata", resolve, false );

},

error => reject());

}

else {

reject();

}

});

}

async function getWebcamImage() {

const img = ( await webcam.capture() ).toFloat();

const normalized = img.div( 127 ).sub( 1 );

return normalized;

}

async function captureSample( category ) {

if( category === 0 ) {

touch.push( await getWebcamImage() );

setText( "Captured: " + labels[ category ] + " x" + touch.length );

}

else {

notouch.push( await getWebcamImage() );

setText( "Captured: " + labels[ category ] + " x" + notouch.length );

}

}

let webcam = null;

(async () => {

model = await tf.loadLayersModel( mobilenet );

model = createTransferModel( model );

await setupWebcam();

webcam = await tf.data.webcam( document.getElementById( "webcam" ) );

setInterval( predictImage, 200 );

})();

</script>

</body>

</html>

What’s Next? Could We Detect Face Touches Without Training?

This time, we learned how to use the browser’s webcam functionality to fully train and recognize frames from real-time video. Wouldn’t it be nicer if the user didn’t even have to actually touch their faces to start using this app?

Follow along with the next article in this series, where we will use the pre-trained BodyPix model for detection.

Raphael Mun is a tech entrepreneur and educator who has been developing software professionally for over 20 years. He currently runs Lemmino, Inc and teaches and entertains through his Instafluff livestreams on Twitch building open source projects with his community.

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin